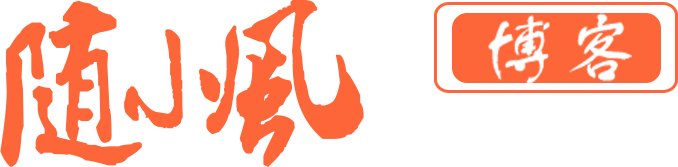

0.k8s架构图(参考别人)

1.环境准备

1.1 主机系统环境说明

[root@localhost ~]# cat /etc/redhat-release

CentOS Linux release 7.4.1708 (Core)

[root@localhost ~]# uname -r

3.10.0-693.el7.x86_64

1.2 主机名设置

hostnamectl set-hostname k8s-master

hostnamectl set-hostname k8s-node01

hostnamectl set-hostname k8s-node02

1.3 系统规划

| 主机名 | ip地址 | 角色 |

|---|---|---|

| k8s-master | 192.168.85.12 | Master、etcd、registry |

| k8s-node01 | 192.168.85.13 | node |

| k8s-node02 | 192.168.85.14 | node |

| k8s-node03 | 192.168.85.15 | ansible |

1.4 设置hosts解析

cat >> /etc/hosts <<EOF

192.168.85.12 k8s-master

192.168.85.13 k8s-node01

192.168.85.14 k8s-node02

EOF

1.5 配置免密钥登陆及安装ansible分发

这里只需在 k8s-node03节点安装即可,后续一些操作均在此机器上执行,然后把生成的文件分发至对应节点。

ssh-keygen

ssh-copy-id -i ~/.ssh/id_rsa.pub root@192.168.85.12

ssh-copy-id -i ~/.ssh/id_rsa.pub root@192.168.85.13

ssh-copy-id -i ~/.ssh/id_rsa.pub root@192.168.85.14

安装ansible

yum install -y epel-release

yum install ansible -y

定义主机组

[k8s-master]

192.168.85.12

[k8s-node]

192.168.85.13

192.168.85.14

[k8s-all]

192.168.85.12

192.168.85.13

192.168.85.14

验证

[root@k8s-node03 ~]# ansible k8s-all -m ping

192.168.185.12 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": false,

"ping": "pong"

}

192.168.85.13 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": false,

"ping": "pong"

}

192.168.85.14 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": false,

"ping": "pong"

}

1.6 关闭防火墙、selinux

ansible k8s-all -m shell -a 'systemctl stop firewalld'

ansible k8s-all -m shell -a 'systemctl disable firewalld'

ansible k8s-all -m replace -a 'path=/etc/sysconfig/selinux regexp="SELINUX=enforcing" replace=SELINUX=disabled'

ansible k8s-all -m replace -a 'path=/etc/selinux/config regexp="SELINUX=enforcing" replace=SELINUX=disabled'

1.7 设置内核优化

vim /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

ansible k8s-all -m copy -a "src=/etc/sysctl.d/k8s.conf dest=/etc/sysctl.d/k8s.conf"

ansible k8s-all -m shell -a 'modprobe br_netfilter'

ansible k8s-all -m shell -a 'sysctl -p /etc/sysctl.d/k8s.conf'

1.8 时间同步

ansible k8s-all -m yum -a "name=ntpdate state=latest"

ansible k8s-all -m cron -a "name='k8s cluster crontab' minute=*/30 hour=* day=* month=* weekday=* job='ntpdate time7.aliyun.com >/dev/null 2>&1'"

ansible k8s-all -m shell -a "ntpdate time7.aliyun.com"

1.9 关闭swap

ansible k8s-all -m shell -a " sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab"

ansible k8s-all -m shell -a 'swapoff -a'

1.10 创建集群目录

ansible k8s-all -m file -a 'path=/etc/kubernetes/ssl state=directory'

ansible k8s-all -m file -a 'path=/etc/kubernetes/config state=directory'

[root@k8s-master ~]# mkdir /opt/k8s/{certs,cfg,unit} -p

1.11 安装 Docker

ansible k8s-all -m shell -a 'yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo'

ansible k8s-all -m shell -a 'yum install docker-ce -y'

ansible k8s-all -m shell -a 'systemctl start docker && systemctl enable docker'

2.创建CA证书和秘钥

2.1 安装及配置CFSSL

生成证书时可在任一节点完成,这里在k8s-node03主机执行,证书只需要创建一次即可,以后在向集群中添加新节点时只要将 /etc/kubernetes/ssl 目录下的证书拷贝到新节点上即可。

mkdir k8s/cfssl -p && cd k8s/cfssl/

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

chmod +x cfssl_linux-amd64

cp cfssl_linux-amd64 /usr/local/bin/cfssl

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

chmod +x cfssljson_linux-amd64

cp cfssljson_linux-amd64 /usr/local/bin/cfssljson

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

chmod +x cfssl-certinfo_linux-amd64

cp cfssl-certinfo_linux-amd64 /usr/local/bin/cfssl-certinfo

2.2 创建根证书(CA)

CA 证书是集群所有节点共享的,只需要创建一个 CA 证书,后续创建的所有证书都由 它签名。

cd /opt/k8s/certs/

cat > ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "87600h"

}

}

}

}

EOF

注释:

1.signing:表示该证书可用于签名其它证书,生成的ca.pem证书中

CA=TRUE

2.server auth:表示client可以用该证书对server提供的证书进行验证;

3.表示server可以用该该证书对client提供的证书进行验证;

2.3 创建证书签名请求文件

cat > ca-csr.json <<EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "ShangHai",

"L": "ShangHai",

"O": "k8s",

"OU": "System"

}

]

}

EOF

2.4 生成CA证书、私钥和csr证书签名请

cfssl gencert -initca ca-csr.json | cfssljson -bare ca

[root@k8s-node03 certs]# ls

ca-config.json ca.csr ca-csr.json ca-key.pem ca.pem

2.5 分发证书文件

将生成的 CA 证书、秘钥文件、配置文件拷贝到所有节点的 /etc/kubernetes/cert

目录下

ansible k8s-all -m copy -a 'src=ca.csr dest=/etc/kubernetes/ssl/'

ansible k8s-all -m copy -a 'src=ca-key.pem dest=/etc/kubernetes/ssl/'

ansible k8s-all -m copy -a 'src=ca.pem dest=/etc/kubernetes/ssl/'

3.部署etcd集群

etcd 是k8s集群最重要的组件,用来存储k8s的所有服务信息, etcd 挂了,集群就挂了,我们这里把etcd部署在master三台节点上做高可用,etcd集群采用raft算法选举Leader, 由于Raft算法在做决策时需要多数节点的投票,所以etcd一般部署集群推荐奇数个节点,推荐的数量为3、5或者7个节点构成一个集群。

3.1 下载etcd二进制文件

cd k8s/

wget https://github.com/etcd-io/etcd/releases/download/v3.3.12/etcd-v3.3.12-linux-amd64.tar.gz

tar -xf etcd-v3.3.12-linux-amd64.tar.gz

cd etcd-v3.3.12-linux-amd64

ansible k8s-master -m copy -a 'src=/root/k8s/etcd-v3.3.12-linux-amd64/etcd dest=/usr/local/bin/ mode=0755'

ansible k8s-master -m copy -a 'src=/root/k8s/etcd-v3.3.12-linux-amd64/etcdctl dest=/usr/local/bin/ mode=0755'

3.2 创建etcd证书请求模板文件

cat > /opt/k8s/certs/etcd-csr.json <<EOF

{

"CN": "etcd",

"hosts": [

"127.0.0.1",

"192.168.85.12",

"192.168.85.13",

"192.168.85.14"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "ShangHai",

"L": "ShangHai",

"O": "k8s",

"OU": "System"

}

]

}

EOF

说明:hosts中的IP为各etcd节点IP及本地127地址,在生产环境中hosts列表最好多预留几个IP,这样后续扩展节点或者因故障需要迁移时不需要再重新生成证.

3.3 生成证书及私钥

cd /opt/k8s/certs/

cfssl gencert -ca=/opt/k8s/certs/ca.pem -ca-key=/opt/k8s/certs/ca-key.pem -config=/opt/k8s/certs/ca-config.json -profile=kubernetes etcd-csr.json | cfssljson -bare etcd

查看:

[root@k8s-node03 certs]# ll etcd*

-rw-r--r-- 1 root root 1066 11月 4 20:49 etcd.csr

-rw-r--r-- 1 root root 301 11月 4 20:44 etcd-csr.json

-rw------- 1 root root 1679 11月 4 20:49 etcd-key.pem

-rw-r--r-- 1 root root 1440 11月 4 20:49 etcd.pem

3.4 etcd证书分发

把生成的etcd证书复制到创建的证书目录并放至另2台etcd节点

ansible k8s-all -m copy -a 'src=/opt/k8s/certs/etcd.pem dest=/etc/kubernetes/ssl/'

ansible k8s-all -m copy -a 'src=/opt/k8s/certs/etcd-key.pem dest=/etc/kubernetes/ssl/'

3.5 修改etcd配置参数

使用单独的用户启动etcd

3.5.1 创建etcd用户和组

ansible k8s-all -m group -a 'name=etcd'

ansible k8s-all -m user -a 'name=etcd group=etcd comment="etcd user" shell=/sbin/nologin home=/var/lib/etcd createhome=no'

3.5.2 创建etcd数据存放目录并授权

ansible k8s-all -m file -a 'path=/var/lib/etcd state=directory owner=etcd group=etcd'

3.6 配置etcd配置文件

etcd.conf配置文件信息,配置文件中涉及证书,etcd用户需要对其有可读权限,否则会提示无法获取证书,644权限即可。

cat > /etc/kubernetes/config/etcd<<EOF

#[Member]

ETCD_NAME="etcd01"

ETCD_DATA_DIR="/var/lib/etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.85.12:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.85.12:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.85.12:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.85.12:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://192.168.85.12:2380,etcd02=https://192.168.85.13:2380,etcd03=https://192.168.85.14:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

EOF

参数解释:

ETCD_NAME 节点名称

ETCD_DATA_DIR 数据目录

ETCD_LISTEN_PEER_URLS 集群通信监听地址

ETCD_LISTEN_CLIENT_URLS 客户端访问监听地址

ETCD_INITIAL_ADVERTISE_PEER_URLS 集群通告地址

ETCD_ADVERTISE_CLIENT_URLS 客户端通告地址

ETCD_INITIAL_CLUSTER 集群节点地址

ETCD_INITIAL_CLUSTER_TOKEN 集群Token

ETCD_INITIAL_CLUSTER_STATE 加入集群的当前状态,new是新集群,existing表示加入已有集群

copy到所有的etcd节点:

ansible k8s-all -m copy -a 'src=etcd dest=/etc/kubernetes/config/etcd'

修改另外两台机器配置文件,修改相关地址为本机地址。

3.7 systemd管理etcd

vim /usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=-/etc/kubernetes/config/etcd

ExecStart=/usr/local/bin/etcd \

--name={ETCD_NAME} \

--data-dir={ETCD_DATA_DIR} \

--listen-peer-urls={ETCD_LISTEN_PEER_URLS} \

--listen-client-urls={ETCD_LISTEN_CLIENT_URLS},http://127.0.0.1:2379 \

--advertise-client-urls={ETCD_ADVERTISE_CLIENT_URLS} \

--initial-advertise-peer-urls={ETCD_INITIAL_ADVERTISE_PEER_URLS} \

--initial-cluster={ETCD_INITIAL_CLUSTER} \

--initial-cluster-token={ETCD_INITIAL_CLUSTER} \

--initial-cluster-state=${ETCD_INITIAL_CLUSTER_STATE} \

--cert-file=/etc/kubernetes/ssl/etcd.pem \

--key-file=/etc/kubernetes/ssl/etcd-key.pem \

--peer-cert-file=/etc/kubernetes/ssl/etcd.pem \

--peer-key-file=/etc/kubernetes/ssl/etcd-key.pem \

--trusted-ca-file=/etc/kubernetes/ssl/ca.pem \

--peer-trusted-ca-file=/etc/kubernetes/ssl/ca.pem

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

copy到etcd集群的每一台:

ansible k8s-all -m copy -a 'src=/opt/k8s/unit/etcd.service dest=/usr/lib/systemd/system/etcd.service'

ansible k8s-all -m shell -a 'systemctl daemon-reload'

ansible k8s-master -m shell -a 'systemctl enable etcd'

ansible k8s-master -m shell -a 'systemctl start etcd'

3.8 验证etcd集群状态

[root@k8s-master ~]# etcdctl --ca-file=/etc/kubernetes/ssl/ca.pem --cert-file=/etc/kubernetes/ssl/etcd.pem --key-file=/etc/kubernetes/ssl/etcd-key.pem --endpoints="https://192.168.85.12:2379,\

> https://192.168.85.13:2379,\

> https://192.168.85.14:2379" cluster-health

member 19612d11bb42f3f is healthy: got healthy result from https://192.168.85.14:2379

member 23a452a6e5bfaa4 is healthy: got healthy result from https://192.168.85.13:2379

member 2ce221743acad866 is healthy: got healthy result from https://192.168.85.12:2379

cluster is healthy

查看成员情况:

etcdctl --ca-file=/etc/kubernetes/ssl/ca.pem --cert-file=/etc/kubernetes/ssl/etcd.pem --key-file=/etc/kubernetes/ssl/etcd-key.pem --endpoints="https://192.168.85.12:2379,\

https://192.168.85.13:2379,\

https://192.168.85.14:2379" member list

19612d11bb42f3f: name=etcd03 peerURLs=https://192.168.85.14:2380 clientURLs=https://192.168.85.14:2379 isLeader=true

23a452a6e5bfaa4: name=etcd02 peerURLs=https://192.168.85.13:2380 clientURLs=https://192.168.85.13:2379 isLeader=false

2ce221743acad866: name=etcd01 peerURLs=https://192.168.85.12:2380 clientURLs=https://192.168.85.12:2379 isLeader=false

集群显示健康,并可以看到isLeader=true 所在节点

4.部署kubectl命令行工具

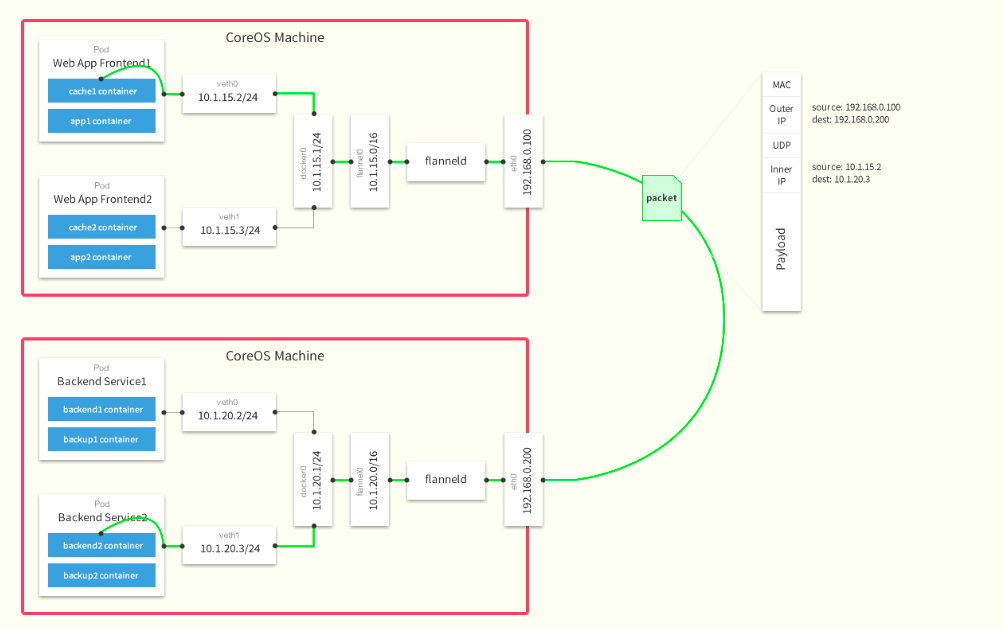

kubectl只需要部署一次,生成的 kubeconfig 文件是通用的,可以拷贝到需要执行 kubectl 命令的机器,重命名为 ~/.kube/config master节点组件:kube-apiserver、etcd、kube-controller-manager、kube-scheduler、kubectl node节点组件:kubelet、kube-proxy、docker、coredns、calico

4.1 下载kubernetes二进制安装包

[root@k8s-master ~]# cd k8s/

[root@k8s-master k8s]# wget https://storage.googleapis.com/kubernetes-release/release/v1.14.1/kubernetes-server-linux-amd64.tar.gz

[root@k8s-master k8s]# tar -xf kubernetes-server-linux-amd64.tar.gz

4.2 分发对应角色需要的二进制文件

master:

[root@k8s-master k8s]# scp kubernetes/server/bin/{kube-apiserver,kube-controller-manager,kube-scheduler,kubectl,kubeadm} 192.168.85.12:/usr/local/bin/

node:

scp kubernetes/server/bin/{kube-proxy,kubelet} 192.168.85.13:/usr/local/bin/

scp kubernetes/server/bin/{kube-proxy,kubelet} 192.168.85.14:/usr/local/bin/

4.3 创建admin证书和私钥

kubectl用于日常直接管理K8S集群,kubectl要进行管理k8s,就需要和k8s的组件进行通信,也就需要用到证书。

4.3.1 创建证书签名请求

cat > /opt/k8s/certs/admin-csr.json <<EOF

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "ShangHai",

"L": "ShangHai",

"O": "system:masters",

"OU": "System"

}

]

}

EOF

4.3.2 生成证书和私钥

cd /opt/k8s/certs/

cfssl gencert -ca=/opt/k8s/certs/ca.pem \

-ca-key=/opt/k8s/certs/ca-key.pem \

-config=/opt/k8s/certs/ca-config.json \

-profile=kubernetes admin-csr.json | cfssljson -bare admin

4.3.3 查看生成的证书

[root@k8s-node03 certs]# ll admin*

-rw-r--r-- 1 root root 1013 11月 6 15:37 admin.csr

-rw-r--r-- 1 root root 231 11月 6 15:33 admin-csr.json

-rw------- 1 root root 1675 11月 6 15:37 admin-key.pem

-rw-r--r-- 1 root root 1407 11月 6 15:37 admin.pem

4.3.4 分发证书到所有的master节点(本次实验只有一个master)

ansible k8s-master -m copy -a 'src=/opt/k8s/certs/admin-key.pem dest=/etc/kubernetes/ssl/'

ansible k8s-master -m copy -a 'src=/opt/k8s/certs/admin.pem dest=/etc/kubernetes/ssl/'

4.3.5 生成kubeconfig 配置文件

kubeconfig是kubectl的配置文件,包含访问apiserver的所有信息,如apiserver地址,CA证书和自身使用的证书.如果在其他节点上操作集群需要用到这个kubectl,就需要将该文件拷贝到其他节点。

# 设置集群参数

[root@k8s-master ~]# kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=https://127.0.0.1:6443

Cluster "kubernetes" set.

# 设置客户端认证参数

[root@k8s-master ~]# kubectl config set-credentials admin \

--client-certificate=/etc/kubernetes/ssl/admin.pem \

--embed-certs=true \

--client-key=/etc/kubernetes/ssl/admin-key.pem

User "admin" set.

# 设置上下文参数

kubectl config set-context admin@kubernetes \

--cluster=kubernetes \

--user=admin

Context "admin@kubernetes" created.

# 设置默认上下文

[root@k8s-master ~]# kubectl config use-context admin@kubernetes

Switched to context "admin@kubernetes".

以上操作会在当前目录下生成.kube/config文件,操作集群时,apiserver需要对该文件进行验证,创建的admin用户对kubernetes集群有所有权限(集群管理员)。

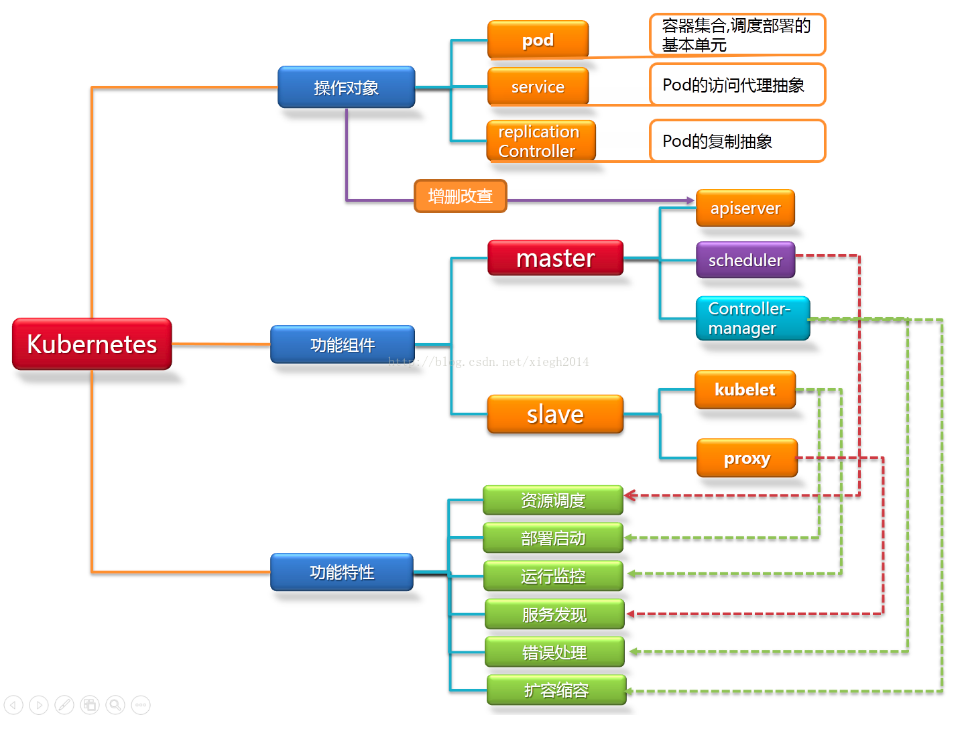

5.部署flannel网络

5.1 创建flannel证书和私钥

flanneld从etcd集群存取网段分配信息,而etcd集群启用了双向x509证书认证,所以需要为flannel生成证书和私钥。也可以不创建,用上面创建好的。

创建证书签名请求:

cd /opt/k8s/certs

cat >flanneld-csr.json<<EOF

{

"CN": "flanneld",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "ShangHai",

"L": "ShangHai",

"O": "k8s",

"OU": "System"

}

]

}

EOF

生成证书和私钥:

cfssl gencert -ca=/opt/k8s/certs/ca.pem \

-ca-key=/opt/k8s/certs/ca-key.pem \

-config=/opt/k8s/certs/ca-config.json \

-profile=kubernetes flanneld-csr.json | cfssljson -bare flanneld

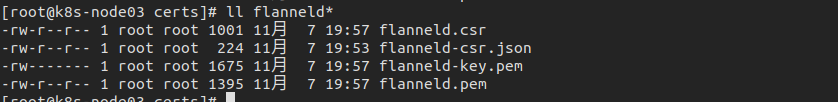

查看证书生成情况:

5.2 将生成的证书和私钥分发到所有节点

ansible k8s-all -m copy -a 'src=/opt/k8s/certs/flanneld.pem dest=/etc/kubernetes/ssl/'

ansible k8s-all -m copy -a 'src=/opt/k8s/certs/flanneld-key.pem dest=/etc/kubernetes/ssl/'

5.3 下载和分发flanneld二进制文件

下载flannel

cd /opt/k8s/

mkdir flannel

wget https://github.com/coreos/flannel/releases/download/v0.11.0/flannel-v0.11.0-linux-amd64.tar.gz

tar -xzvf flannel-v0.11.0-linux-amd64.tar.gz -C flannel

分发二进制文件到集群所有节点

ansible k8s-all -m copy -a 'src=/opt/k8s/flannel/flanneld dest=/usr/local/bin/ mode=0755'

ansible k8s-all -m copy -a 'src=/opt/k8s/flannel/mk-docker-opts.sh dest=/usr/local/bin/ mode=0755'

备注:

mk-docker-opts.sh脚本将分配给flanneld的Pod子网网段信息写入/run/flannel/docker文件,后续docker启动时使用这个文件中的环境变量配置docker0网桥;

flanneld使用系统缺省路由所在的接口与其它节点通信,对于有多个网络接口(如内网和公网)的节点,可以用 -iface参数指定通信接口,如上面的eth1接口;

5.4 配置Flannel

cat>flanneld<<EOF

FLANNEL_OPTIONS="--etcd-endpoints=https://192.168.85.12:2379,https://192.168.85.13:2379,https://192.168.85.14:2379 -etcd-cafile=/etc/kubernetes/ssl/ca.pem -etcd-certfile=/etc/kubernetes/ssl/flanneld.pem -etcd-keyfile=/etc/kubernetes/ssl/flanneld-key.pem"

EOF

分发到所有服务器

ansible k8s-all -m copy -a 'src=/root/k8s/flanneld dest=/etc/kubernetes/config'

5.5 创建flanneld的systemd unit文件

cat >flanneld.service<<EOF

[Unit]

Description=Flanneld overlay address etcd agent

After=network-online.target network.target

Before=docker.service

[Service]

Type=notify

EnvironmentFile=/etc/kubernetes/config/flanneld

ExecStart=/usr/local/bin/flanneld --ip-masq $FLANNEL_OPTIONS

ExecStartPost=/usr/local/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

分发到所有服务器

ansible k8s-all -m copy -a 'src=/root/k8s/flanneld.service dest=/usr/lib/systemd/system/'

5.6 向etcd写入集群Pod网段信息

etcdctl \

--endpoints="https://192.168.85.12:2379,https://192.168.85.13:2379,https://192.168.85.14:2379" \

--ca-file=/etc/kubernetes/ssl/ca.pem \

--cert-file=/etc/kubernetes/ssl/flanneld.pem \

--key-file=/etc/kubernetes/ssl/flanneld-key.pem \

set /coreos.com/network/config '{"Network":"10.30.0.0/16","SubnetLen": 24, "Backend": {"Type": "vxlan"}}'

输出:

{"Network":"10.30.0.0/16","SubnetLen": 24, "Backend": {"Type": "vxlan"}}

备注:写入的Pod网段"Network"必须是/16段地址,必须与kube-controller-manager的--cluster-cidr参数值一致.

5.7 检查分配给各flanneld的Pod网段信息

etcdctl --endpoints="https://192.168.85.12:2379,https://192.168.85.13:2379,https://192.168.85.14:2379" \

--ca-file=/etc/kubernetes/ssl/ca.pem \

--cert-file=/etc/kubernetes/ssl/flanneld.pem \

--key-file=/etc/kubernetes/ssl/flanneld-key.pem \

get /coreos.com/network/config

{"Network":"10.30.0.0/16","SubnetLen": 24, "Backend": {"Type": "vxlan"}}

5.8 启动并检查flanneld服务

启动

ansible k8s-all -m shell -a 'systemctl daemon-reload && systemctl enable flanneld && systemctl start flanneld'

查看启动情况

ansible k8s-all -m shell -a 'systemctl status flanneld|grep Active'

192.168.85.14 | CHANGED | rc=0 >>

Active: active (running) since 五 2019-11-08 15:59:06 CST; 1min 8s ago

192.168.85.13 | CHANGED | rc=0 >>

Active: active (running) since 五 2019-11-08 15:59:06 CST; 1min 8s ago

192.168.85.12 | CHANGED | rc=0 >>

Active: active (running) since 五 2019-11-08 15:59:07 CST; 1min 7s ago

启动成功.

5.9 查看已分配的Pod子网段列表(/24)

etcdctl --endpoints="https://192.168.85.12:2379,https://192.168.85.13:2379,https://192.168.85.14:2379" --ca-file=/etc/kubernetes/ssl/ca.pem --cert-file=/etc/kubernetes/ssl/flanneld.pem --key-file=/etc/kubernetes/ssl/flanneld-key.pem ls /coreos.com/network/subnets

输出:

/coreos.com/network/subnets/10.30.86.0-24

/coreos.com/network/subnets/10.30.77.0-24

/coreos.com/network/subnets/10.30.75.0-24

5.10 查看某一Pod网段对应的节点IP和flannel接口地址

etcdctl --endpoints="https://192.168.85.12:2379,https://192.168.85.13:2379,https://192.168.85.14:2379" --ca-file=/etc/kubernetes/ssl/ca.pem --cert-file=/etc/kubernetes/ssl/flanneld.pem --key-file=/etc/kubernetes/ssl/flanneld-key.pem get /coreos.com/network/subnets/10.30.75.0-24

输出:

{"PublicIP":"192.168.85.12","BackendType":"vxlan","BackendData":{"VtepMAC":"52:ef:fe:03:d1:52"}}

5.11 验证各节点能通过Pod网段互通

vim /opt/k8s/script/ping_flanneld.sh

NODE_IPS=("192.168.85.12" "192.168.85.13" "192.168.85.14")

for node_ip in {NODE_IPS[@]};do

echo ">>>{node_ip}"

#在各节点上部署 flannel 后,检查是否创建了 flannel 接口(名称可能为 flannel0、flannel.0、flannel.1 等)

ssh {node_ip} "/usr/sbin/ip addr show flannel.1|grep -w inet"

#在各节点上 ping 所有 flannel 接口 IP,确保能通

ssh{node_ip} "ping -c 1 10.30.86.0"

ssh {node_ip} "ping -c 1 10.30.77.0"

ssh{node_ip} "ping -c 1 10.30.75.0"

done

chmod +x /opt/k8s/script/ping_flanneld.sh && bash /opt/k8s/script/ping_flanneld.sh

5.12 配置Docker启动指定子网段

cat /usr/lib/systemd/system/docker.service

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=/run/flannel/subnet.env

ExecStart=/usr/bin/dockerd DOCKER_NETWORK_OPTIONS

ExecReload=/bin/kill -s HUPMAINPID

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TimeoutStartSec=0

Delegate=yes

KillMode=process

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target

启动服务

systemctl daemon-reload

systemctl start flanneld

systemctl enable flanneld

systemctl restart docker

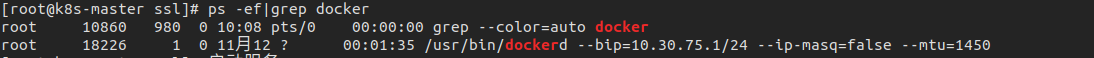

验证

6 部署master节点

6.1 下载1.14的二进制文件

wget https://storage.googleapis.com/kubernetes-release/release/v1.14.1/kubernetes-server-linux-amd64.tar.gz

tar -xf kubernetes-server-linux-amd64.tar.gz

cp {apiextensions-apiserver,cloud-controller-manager,kube-apiserver,kube-controller-manager,kube-proxy,kube-scheduler,kubeadm,kubectl,kubelet,mounter} /usr/local/bin/

cd /usr/local/bin/

chmod +x *

6.2 部署kube-apiserver组件

6.2.1 配置kube-apiserver客户端使用的token文件

创建 TLS Bootstrapping Token

# head -c 16 /dev/urandom | od -An -t x | tr -d ' '

7ba37c56dd0566aeb2e70db45794875a

vim /etc/kubernetes/config/token.csv

7ba37c56dd0566aeb2e70db45794875a,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

6.2.2 创建kubernetes证书和私钥

cat > kubernetes-csr.json <<EOF

{

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

"192.168.85.12",

"10.30.75.1",

"10.0.0.1",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "ShangHai",

"L": "ShangHai",

"O": "k8s",

"OU": "System"

}

]

}

EOF

集群版的话 要把集群所有地址都加入

6.2.3 生成kubernetes证书和私钥

cfssl gencert -ca=/opt/k8s/certs/ca.pem \

-ca-key=/opt/k8s/certs/ca-key.pem \

-config=/opt/k8s/certs/ca-config.json \

-profile=kubernetes kubernetes-csr.json | cfssljson -bare kubernetes

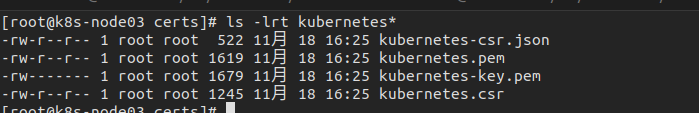

查看生成结果

传输到master服务器上

scp kubernetes* 192.168.85.12:/etc/kubernetes/ssl

6.2.4 创建apiserver配置文件

cat /etc/kubernetes/config/kube-apiserver

KUBE_APISERVER_OPTS="--logtostderr=true \

--v=4 \

--etcd-servers=https://192.168.85.12:2379,https://192.168.85.13:2379,https://192.168.85.14:2379 \

--bind-address=192.168.85.12 \

--secure-port=6443 \

--advertise-address=192.168.85.12 \

--allow-privileged=true \

--service-cluster-ip-range=10.0.0.0/24 \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota,NodeRestriction \

--authorization-mode=RBAC,Node \

--enable-bootstrap-token-auth \

--token-auth-file=/etc/kubernetes/config/token.csv \

--service-node-port-range=30000-50000 \

--tls-cert-file=/etc/kubernetes/ssl/kubernetes.pem \

--tls-private-key-file=/etc/kubernetes/ssl/kubernetes-key.pem \

--client-ca-file=/etc/kubernetes/ssl/ca.pem \

--service-account-key-file=/etc/kubernetes/ssl/ca-key.pem \

--etcd-cafile=/etc/kubernetes/ssl/ca.pem \

--etcd-certfile=/etc/kubernetes/ssl/kubernetes.pem \

--etcd-keyfile=/etc/kubernetes/ssl/kubernetes-key.pem"

KUBE_APISERVER_OPTS="--logtostderr=true \

--v=4 \

--etcd-servers=https://192.168.85.12:2379,https://192.168.85.13:2379,https://192.168.85.14:2379 \

--bind-address=192.168.85.12 \

--secure-port=6443 \

--advertise-address=192.168.85.12 \

--allow-privileged=true \

--service-cluster-ip-range=10.0.0.0/24 \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota,NodeRestriction \

--authorization-mode=RBAC,Node \

--enable-bootstrap-token-auth \

--token-auth-file=/etc/kubernetes/config/token.csv \

--service-node-port-range=30000-50000 \

--tls-cert-file=/etc/kubernetes/ssl/kubernetes.pem \

--tls-private-key-file=/etc/kubernetes/ssl/kubernetes-key.pem \

--client-ca-file=/etc/kubernetes/ssl/ca.pem \

--service-account-key-file=/etc/kubernetes/ssl/ca-key.pem \

--etcd-cafile=/etc/kubernetes/ssl/ca.pem \

--etcd-certfile=/etc/kubernetes/ssl/kubernetes.pem \

--etcd-keyfile=/etc/kubernetes/ssl/kubernetes-key.pem"

6.2.5 kube-apiserver启动脚本

[root@k8s-master ssl]# cat /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/etc/kubernetes/config/kube-apiserver

ExecStart=/usr/local/bin/kube-apiserver $KUBE_APISERVER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

启动服务

systemctl daemon-reload

systemctl enable kube-apiserver

systemctl restart kube-apiserver

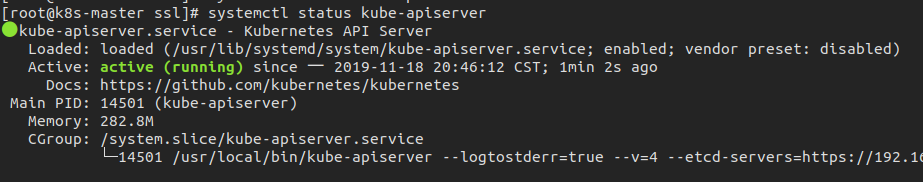

查看服务状态

6.3 部署kube-scheduler

6.3.1 创建kube-scheduler配置文件

vim /etc/kubernetes/config/kube-scheduler

KUBE_SCHEDULER_OPTS="--logtostderr=true --v=4 --master=127.0.0.1:8080 --leader-elect"

注释:

--address:在127.0.0.1:10251端口接收http /metrics请求;kube-scheduler目前还不支持接收 https请求;

--kubeconfig:指定kubeconfig文件路径,kube-scheduler使用它连接和验证kube-apiserver;

--leader-elect=true:集群运行模式,启用选举功能;被选为leader的节点负责处理工作,其它节点为阻塞状态;

6.3.2 创建kube-scheduler systemd unit文件

vim /usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/etc/kubernetes/config/kube-scheduler

ExecStart=/usr/local/bin/kube-scheduler $KUBE_SCHEDULER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

启动服务

systemctl daemon-reload

systemctl enable kube-scheduler.service

systemctl restart kube-scheduler.service

验证服务是否正常

6.4 部署kube-controller-manager

6.4.1 创建kube-controller-manager配置文件

vim /etc/kubernetes/config/kube-controller-manager

KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=true \

--v=4 \

--master=127.0.0.1:8080 \

--leader-elect=true \

--address=127.0.0.1 \

--service-cluster-ip-range=10.0.0.0/24 \

--cluster-name=kubernetes \

--cluster-signing-cert-file=/etc/kubernetes/ssl/ca.pem \

--cluster-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \

--root-ca-file=/etc/kubernetes/ssl/ca.pem \

--service-account-private-key-file=/etc/kubernetes/ssl/ca-key.pem"

6.4.2 创建kube-controller-manager systemd unit 文件

vim /usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/etc/kubernetes/config/kube-controller-manager

ExecStart=/usr/local/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

启动服务

systemctl daemon-reload

systemctl enable kube-controller-manager

systemctl restart kube-controller-manager

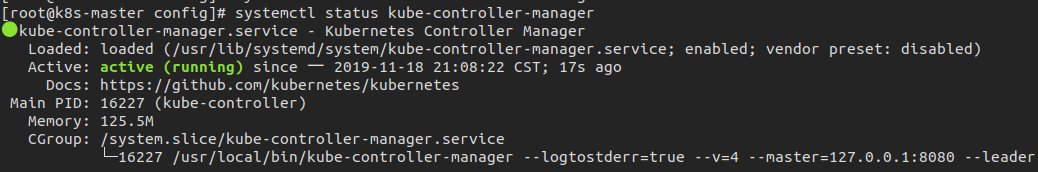

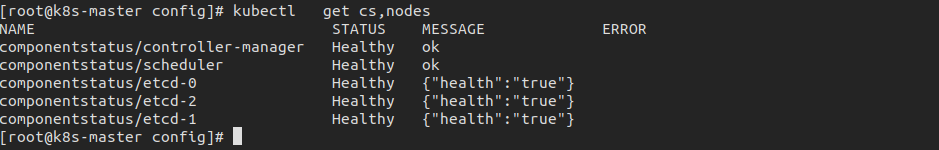

验证服务

6.4.3 查看master集群状态

7 部署node节点

kubernetes work节点运行如下组件:

docker 前面已经部署

kubelet

kube-proxy

7.1 部署kubelet组件

Kubelet组件运行在Node节点上,维持运行中的Pods以及提供kuberntes运行时环境,主要完成以下使命:

1.监视分配给该Node节点的pods

2.挂载pod所需要的volumes

3.下载pod的secret

4.通过docker/rkt来运行pod中的容器

5.周期的执行pod中为容器定义的liveness探针

6.上报pod的状态给系统的其他组件

7.上报Node的状态

1、以下操作属于node节点上组件的部署,在master节点上只是进行文件配置,然后发布至各node节点。

2、若是需要master也作为node节点加入集群,也需要在master节点部署docker、kubelet、kube-proxy。

将kubelet, kube-proxy二进制文件拷贝node节点

scp kubernetes/server/bin/{kube-proxy,kubelet} 192.168.85.13:/usr/local/bin/

scp kubernetes/server/bin/{kube-proxy,kubelet} 192.168.85.14:/usr/local/bin/

上面已copy过

7.2 创建kubelet bootstrap kubeconfig文件

cat environment.sh

# 创建kubelet bootstrapping kubeconfig

BOOTSTRAP_TOKEN=7ba37c56dd0566aeb2e70db45794875a

KUBE_APISERVER="https://192.168.85.12:6443"

# 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server={KUBE_APISERVER} \

--kubeconfig=bootstrap.kubeconfig

# 设置客户端认证参数

kubectl config set-credentials kubelet-bootstrap \

--token={BOOTSTRAP_TOKEN} \

--kubeconfig=bootstrap.kubeconfig

# 设置上下文参数

kubectl config set-context default \

--cluster=kubernetes \

--user=kubelet-bootstrap \

--kubeconfig=bootstrap.kubeconfig

# 设置默认上下文

kubectl config use-context default --kubeconfig=bootstrap.kubeconfig

获取bootstrap.kubeconfig配置文件。

[root@k8s-master node]# bash environment.sh

Cluster "kubernetes" set.

User "kubelet-bootstrap" set.

Context "default" created.

Switched to context "default".

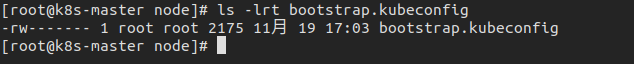

查看是否创建

7.3 创建kubelet.kubeconfig文件

vim envkubelet.kubeconfig.sh

# 创建kubelet bootstrapping kubeconfig

BOOTSTRAP_TOKEN=7ba37c56dd0566aeb2e70db45794875a

KUBE_APISERVER="https://192.168.85.12:6443"

# 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server={KUBE_APISERVER} \

--kubeconfig=kubelet.kubeconfig

# 设置客户端认证参数

kubectl config set-credentials kubelet \

--token={BOOTSTRAP_TOKEN} \

--kubeconfig=kubelet.kubeconfig

# 设置上下文参数

kubectl config set-context default \

--cluster=kubernetes \

--user=kubelet \

--kubeconfig=kubelet.kubeconfig

# 设置默认上下文

kubectl config use-context default --kubeconfig=kubelet.kubeconfig

执行脚本,生成kubelet.kubeconfig

[root@k8s-master node]# bash envkubelet.kubeconfig.sh

Cluster "kubernetes" set.

User "kubelet" set.

Context "default" created.

Switched to context "default".

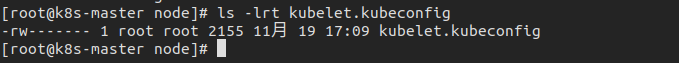

查看生成的kubelet.kubeconfig

7.4 创建kube-proxy kubeconfig文件

7.4.1 创建kube-proxy证书

创建kube-proxy证书

cat > kube-proxy-csr.json <<EOF

{

"CN": "system:kube-proxy",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "ShangHai",

"L": "ShangHai",

"O": "k8s",

"OU": "System"

}

]

}

EOF

生成证书和私钥:

cfssl gencert -ca=/opt/k8s/certs/ca.pem \

-ca-key=/opt/k8s/certs/ca-key.pem \

-config=/opt/k8s/certs/ca-config.json \

-profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

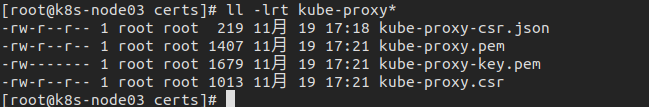

查看生成结果:

cp 到创建kube-proxy kubeconfig文件的服务器

[root@k8s-node03 certs]# scp kube-proxy* 192.168.85.12:/etc/kubernetes/ssl

7.4.2 创建kube-proxy kubeconfig文件

cat env_proxy.sh

#创建kube-proxy kubeconfig文件

BOOTSTRAP_TOKEN=7ba37c56dd0566aeb2e70db45794875a

KUBE_APISERVER="https://192.168.85.12:6443"

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-credentials kube-proxy \

--client-certificate=./kube-proxy.pem \

--client-key=./kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

执行脚本,生成kube-proxy.kubeconfig

[root@k8s-master node]# bash env_proxy.sh

Cluster "kubernetes" set.

User "kube-proxy" set.

Context "default" modified.

Switched to context "default".

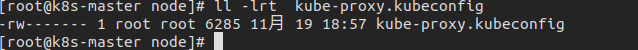

查看结果

将bootstrap kubeconfig kube-proxy.kubeconfig 文件拷贝到所有 nodes节点

scp -rp bootstrap.kubeconfig kube-proxy.kubeconfig 192.168.85.13:/etc/kubernetes/config

scp -rp bootstrap.kubeconfig kube-proxy.kubeconfig 192.168.85.14:/etc/kubernetes/config

7.5 创建kubelet参数配置文件拷贝到所有nodes节点

7.5.1 创建kubelet参数配置模板文件

cat /etc/kubernetes/config/kubelet.config

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 192.168.85.12

port: 10250

readOnlyPort: 10255

cgroupDriver: cgroupfs

clusterDomain: cluster.local.

failSwapOn: false

authentication:

anonymous:

enabled: true

7.5.2 创建kubelet配置文件

cat /etc/kubernetes/config/kubelet

KUBELET_OPTS="--logtostderr=true \

--v=4 \

--hostname-override=192.168.85.13 \

--kubeconfig=/etc/kubernetes/config/kubelet.kubeconfig \

--bootstrap-kubeconfig=/etc/kubernetes/config/bootstrap.kubeconfig \

--config=/etc/kubernetes/config/kubelet.config \

--cert-dir=/etc/kubernetes/ssl \

--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0"

7.5.3 创建kubelet systemd unit文件

cat /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

After=docker.service

Requires=docker.service

[Service]

EnvironmentFile=/etc/kubernetes/config/kubelet

ExecStart=/usr/local/bin/kubelet $KUBELET_OPTS

Restart=on-failure

KillMode=process

[Install]

WantedBy=multi-user.target

7.5.4 将kubelet-bootstrap用户绑定到系统集群角色

在master中执行

kubectl create clusterrolebinding kubelet-bootstrap \

--clusterrole=system:node-bootstrapper \

--user=kubelet-bootstrap

执行结果为

clusterrolebinding.rbac.authorization.k8s.io/kubelet-bootstrap created

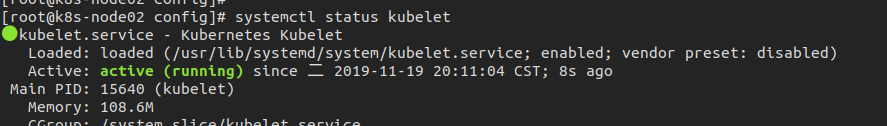

7.5.5 启动kubelet服务

node节点执行

systemctl daemon-reload

systemctl enable kubelet

systemctl restart kubelet

查看结果

7.6 approve kubelet CSR请求

master节点执行

[root@k8s-master bin]# kubectl get csr

[root@k8s-master bin]# kubectl get csr|grep 'Pending' | awk 'NR>0{print $1}'| xargs kubectl certificate approve

certificatesigningrequest.certificates.k8s.io/node-csr-l0kHoPMfyv3HWpyFH6GJWIAY8SkYv5BAOZRKfgS-Pjw approved

certificatesigningrequest.certificates.k8s.io/node-csr-nOvG_-P05HDjAn0CpK3pfEKcCMznSQVtbkPTjQBbhkg approved

[root@k8s-master bin]# kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-l0kHoPMfyv3HWpyFH6GJWIAY8SkYv5BAOZRKfgS-Pjw 2m45s kubelet-bootstrap Approved,Issued

node-csr-nOvG_-P05HDjAn0CpK3pfEKcCMznSQVtbkPTjQBbhkg 2m50s kubelet-bootstrap Approved,Issued

csr 状态变为 Approved,Issued 即可

注释:

Requesting User:请求 CSR 的用户,kube-apiserver 对它进行认证和授权;

Subject:请求签名的证书信息;

证书的 CN 是 system:node:kube-node2, Organization 是 system:nodes,kube-apiserver 的 Node 授权模式会授予该证书的相关权限;

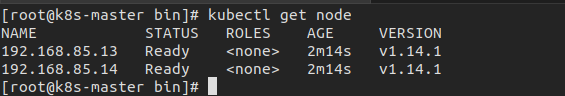

7.7 查看集群状态

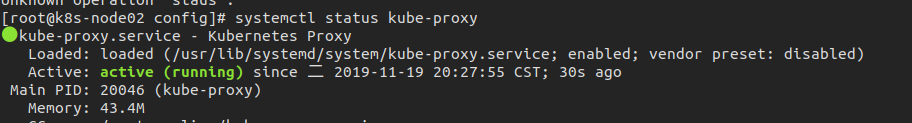

7.8 部署node kube-proxy组件

kube-proxy 运行在所有 node节点上,它监听 apiserver 中 service 和 Endpoint 的变化情况,创建路由规则来进行服务负载均衡。

7.8.1 创建kube-proxy配置文件(所有node)

cat /etc/kubernetes/config/kube-proxy

KUBE_PROXY_OPTS="--logtostderr=true \

--v=4 \

--hostname-override=192.168.85.13 \

--cluster-cidr=10.0.0.0/24 \

--kubeconfig=/etc/kubernetes/config/kube-proxy.kubeconfig"

7.8.2 创建kube-proxy systemd unit文件

cat /usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Proxy

After=network.target

[Service]

EnvironmentFile=-/etc/kubernetes/config/kube-proxy

ExecStart=/usr/local/bin/kube-proxy $KUBE_PROXY_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

7.8.3 启动kube-proxy服务

systemctl daemon-reload

systemctl enable kube-proxy

systemctl restart kube-proxy

查看启动情况

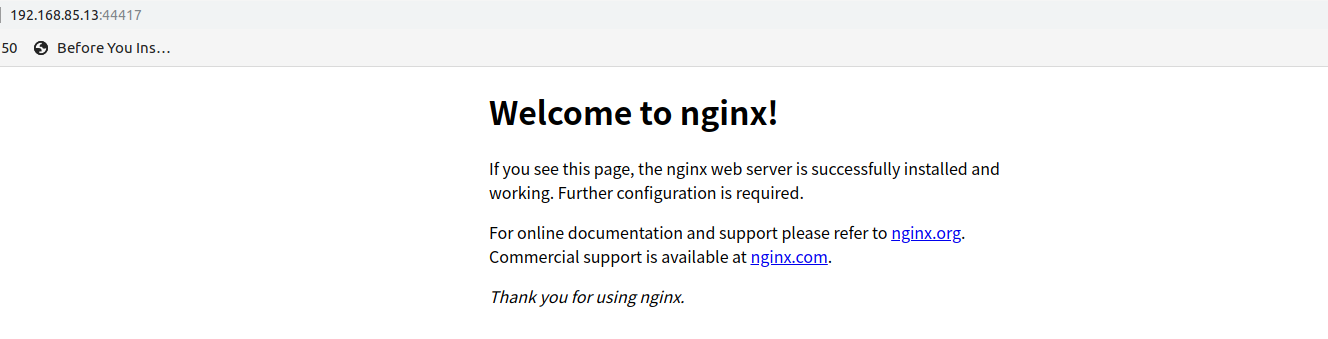

8 使用kubectl的run命令创建deployment

8.1 创建实例

kubectl run nginx --image=nginx:1.10.0

查看创建的实例

kubectl get pods -o wide

8.2 使用expose将端口暴露出来

kubectl expose deployment nginx --port 80 --type LoadBalancer

[root@k8s-master bin]# kubectl get services -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 23h <none>

nginx LoadBalancer 10.0.0.48 <pending> 80:44417/TCP 6s run=nginx

查看nginx服务

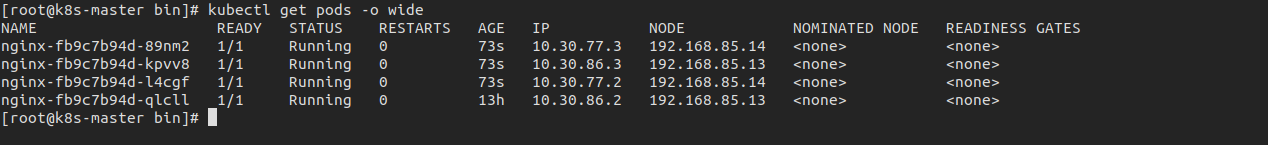

8.3 通过scale命令扩展应用

[root@k8s-master bin]# kubectl scale deployments/nginx --replicas=4

deployment.extensions/nginx scaled

查看扩展结果

8.4 更新应用镜像,滚动更新应用镜像

[root@k8s-master bin]# kubectl set image deployments/nginx nginx=qihao/nginx

deployment.extensions/nginx image updated

确认更新

kubectl rollout status deployments/nginx

回滚到之前版本

kubectl rollout undo deployments/nginx

9 Kubernetes集群Dashboard部署

9.1 修改配置文件

将下载的 kubernetes-server-linux-amd64.tar.gz 解压后,再解压其中的 kubernetes-

src.tar.gz 文件。

cd /root/k8s/kubernetes

tar -xzvf kubernetes-src.tar.gz -C tmp/

dashboard 对应的目录是: cluster/addons/dashboard :

cd /root/k8s/kubernetes/tmp/cluster/addons/dashboard

修改源 dashboard-controller.yaml

spec:

priorityClassName: system-cluster-critical

containers:

- name: kubernetes-dashboard

# image: k8s.gcr.io/kubernetes-dashboard-amd64:v1.10.1

image: registry.cn-hangzhou.aliyuncs.com/google_containers/kubernetes-dashboard-amd64:v1.10.1

修改service定义,指定端口类型为NodePort.

cat dashboard-service.yaml

apiVersion: v1

kind: Service

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

type: NodePort

selector:

k8s-app: kubernetes-dashboard

ports:

- port: 443

targetPort: 8443

9.2 根据yaml文件,启动dashboard实例

kubectl create clusterrolebinding system:anonymous --clusterrole=cluster-admin --user=system:anonymous

[root@k8s-master dashboard]# ll *yaml

-rw-rw-r-- 1 root root 264 4月 6 2019 dashboard-configmap.yaml

-rw-rw-r-- 1 root root 1925 11月 26 17:35 dashboard-controller.yaml

-rw-rw-r-- 1 root root 1353 4月 6 2019 dashboard-rbac.yaml

-rw-rw-r-- 1 root root 551 4月 6 2019 dashboard-secret.yaml

-rw-rw-r-- 1 root root 340 11月 26 16:45 dashboard-service.yaml

执行

kubectl apply -f .

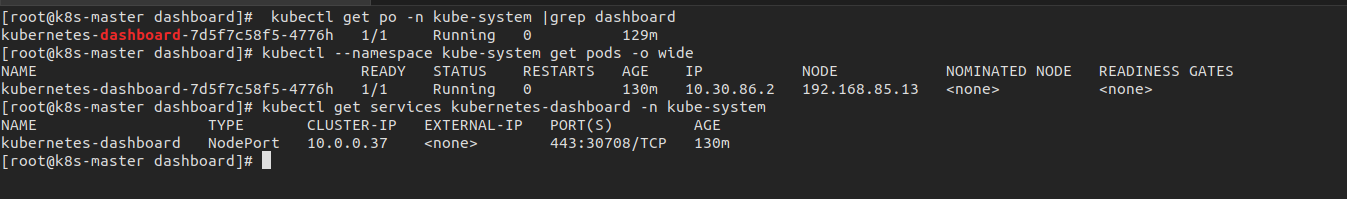

9.3 验证dashboard服务,查看POD状态

9.4 创建集群管理员账号

9.4.1 创建服务账号

[root@k8s-master dashboard]# kubectl create serviceaccount dashboard-admin -n kube-system

serviceaccount/dashboard-admin created

9.4.2 创建集群角色绑定

[root@k8s-master dashboard]# kubectl create clusterrolebinding dashboard-cluster-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

clusterrolebinding.rbac.authorization.k8s.io/dashboard-cluster-admin created

9.4.3 获取用户登录Token

[root@k8s-master dashboard]# kubectl -n kube-system describe secret (kubectl -n kube-system get secret |grep dashboard-admin |awk '{print1}')

Name: dashboard-admin-token-xm9cj

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: dashboard-admin

kubernetes.io/service-account.uid: 44a1c5c7-10f3-11ea-a564-5254006ea49d

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1363 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4teG05Y2oiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiNDRhMWM1YzctMTBmMy0xMWVhLWE1NjQtNTI1NDAwNmVhNDlkIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.QpJR5e92fCoZzSC7lEB4L0Vex3cZ2kRohpypW7XDC44rctGOPJpOej033cbrGqMf3e3JB-VcqggIt34pGaOOKcyEaPW1zt-PTG7HXcwoeBtLvVEgxbaizkYIp7YQW7807umFjC5jN7zaMc9f0wnG3-i7Rja7VYK9wCbWDNJ0FB1mBR5PPZcNIG7W10h455Vmc7NoxaY7akIrv97fspjOIFAPlHLee_659wQ1aKfkEWaFt34SingoHcMXGM41NaYFL8KdQE-9p0B_OTkN04SIenZtxgBMf21tZdDMraCB6R27nN5MECW6WEy84F30HYUvojChKIE_71EGfuU5Jhd9cA

9.5 登录dashboard验证服务

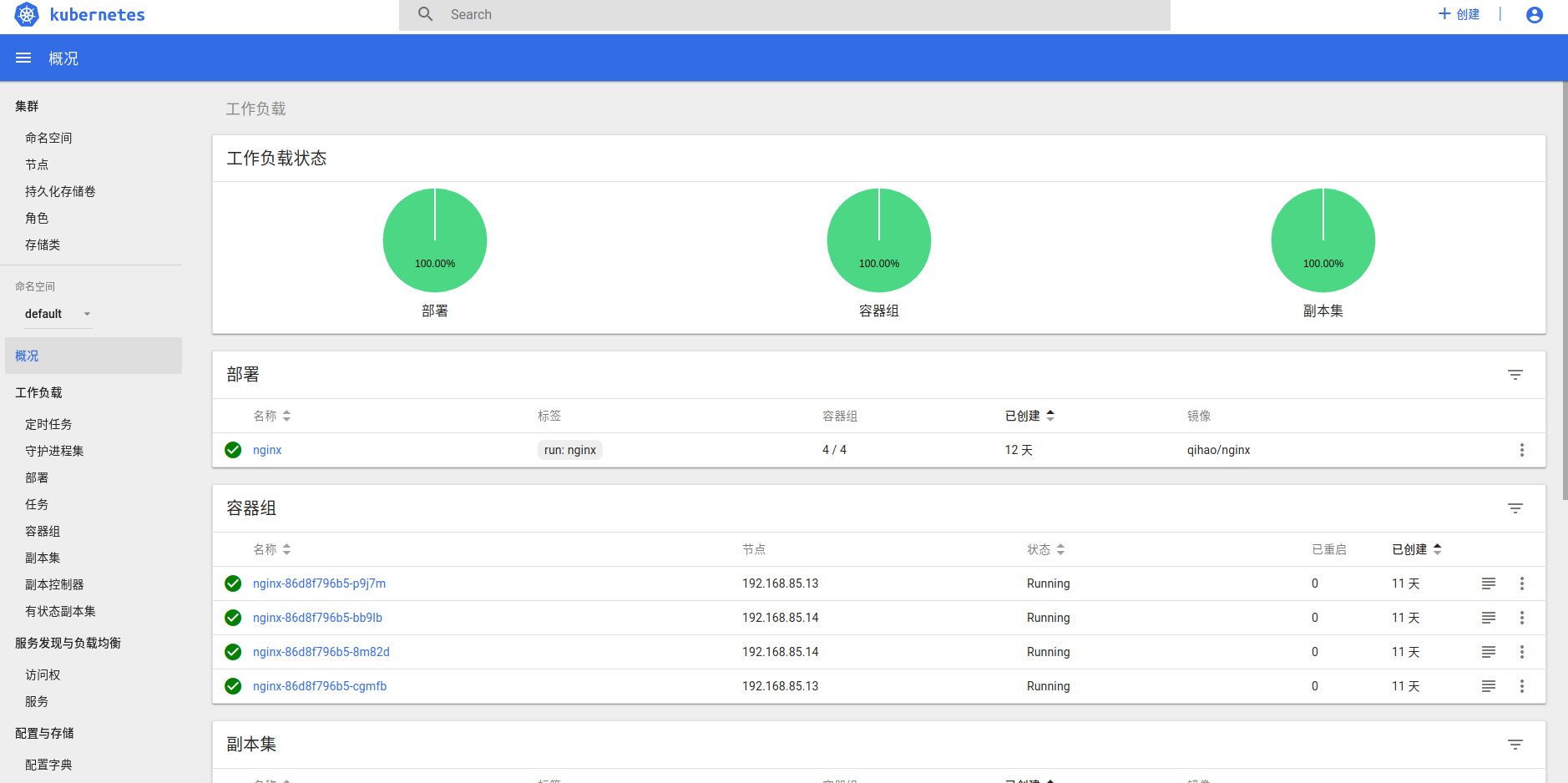

登录方式有两种,这里选择令牌的方式登录。选择上面的token粘贴到界面里,登录成功如下图。

9.6 卸载dashboard插件

kubectl delete -f dashboard-configmap.yaml

kubectl delete -f dashboard-controller.yaml

kubectl delete -f dashboard-rbac.yaml

kubectl delete -f dashboard-secret.yaml

kubectl delete -f dashboard-service.yaml

- 我的微信

- 这是我的微信扫一扫

-

- 我的微信公众号

- 我的微信公众号扫一扫

-