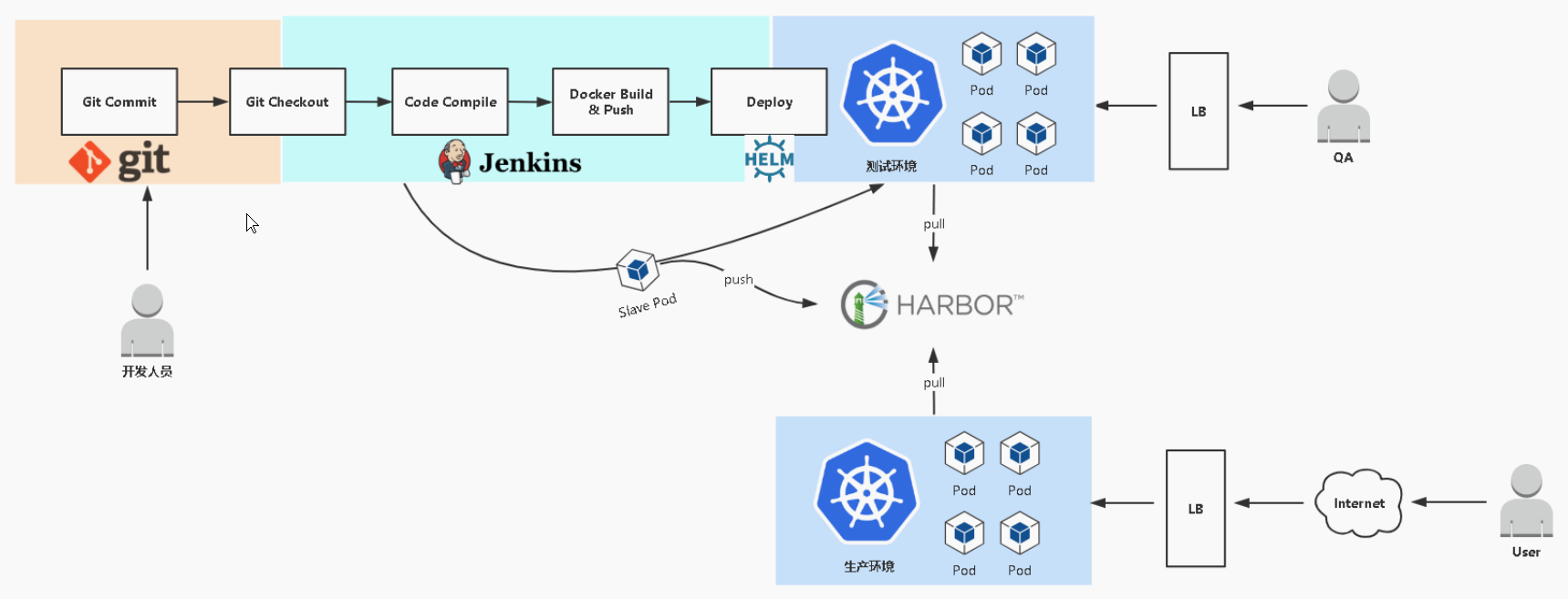

1 发布流程设计

2 准备基础环境

2.1 代码版本仓库 Gitlab

部署gitlab

docker run -d \

--name gitlab \

-p 8443:443 \

-p 9999:80 \

-p 9998:22 \

-v PWD/config:/etc/gitlab \

-vPWD/logs:/var/log/gitlab \

-v $PWD/data:/var/opt/gitlab \

-v /etc/localtime:/etc/localtime \

lizhenliang/gitlab-ce-zh:latest

访问地址:http://IP:9999

初次会先设置管理员密码 ,然后登陆,默认管理员用户名root,密码就是刚设置的。

### 1.2 创建项目,提交测试代码

https://github.com/lizhenliang/simple-microservice

代码分支说明:

- dev1 交付代码

- dev2 编写Dockerfile构建镜像

- dev3 K8S资源编排

- dev4 增加微服务链路监控

- master 最终上线

拉取dev3分支,推送到私有代码仓库:

git clone -b dev3 https://github.com/lizhenliang/simple-microservice

git clone http://192.168.10.20:8088/root/ms.git

cp -rf simple-microservice/* ms

cd ms

git add .

git config --global user.email "you@example.com"

git config --global user.name "Your Name"

git commit -m 'all'

git push origin master

2.2 镜像仓库 Harbor

安装docker与docker-compose

# wget http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

# yum install docker-ce -y

# systemctl start docker

# systemctl enable docker

curl -L https://github.com/docker/compose/releases/download/1.25.0/docker-compose-`uname -s`-`uname -m` -o /usr/local/bin/docker-compose

chmod +x /usr/local/bin/docker-compose

解压离线包部署

# tar zxvf harbor-offline-installer-v1.9.1.tgz

# cd harbor

# vi harbor.yml

hostname: 192.168.10.20

port: 8081

# ./prepare

# ./install.sh --with-chartmuseum

# docker-compose ps

--with-chartmuseum 参数表示启用Charts存储功能。

配置Docker可信任

由于habor未配置https,还需要在docker配置可信任。

# cat /etc/docker/daemon.json

{"registry-mirrors": ["http://f1361db2.m.daocloud.io"],

"insecure-registries": ["192.168.10.20:8081"]

}

# systemctl restart docker

2.3 应用包管理器 Helm

安装Helm工具

# wget https://get.helm.sh/helm-v3.0.0-linux-amd64.tar.gz

# tar zxvf helm-v3.0.0-linux-amd64.tar.gz

# mv linux-amd64/helm /usr/bin/

配置国内Chart仓库

# helm repo add stable http://mirror.azure.cn/kubernetes/charts

# helm repo add aliyun https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

# helm repo list

安装push插件

# helm plugin install https://github.com/chartmuseum/helm-push

如果网络下载不了,也可以直接解压课件里包:

# tar zxvf helm-push_0.7.1_linux_amd64.tar.gz

# mkdir -p /root/.local/share/helm/plugins/helm-push

# chmod +x bin/*

# mv bin plugin.yaml /root/.local/share/helm/plugins/helm-push

添加repo

# helm repo add --username admin --password Harbor12345 myrepo http://192.168.31.70/chartrepo/library

推送与安装Chart

# helm push mysql-1.4.0.tgz --username=admin --password=Harbor12345 http://192.168.31.70/chartrepo/library

# helm install web --version 1.4.0 myrepo/demo

微服务数据库 MySQL

# yum install mariadb-server -y

# mysqladmin -uroot password '123456'

或者docker创建

docker run -d --name db -p 3306:3306 -v /opt/mysql:/var/lib/mysql -e MYSQL_ROOT_PASSWORD=123456 mysql:5.7 --character-set-server=utf8

最后将微服务数据库导入。

2.4 K8S PV自动供给

先准备一台NFS服务器为K8S提供存储支持。

# yum install nfs-utils

# vi /etc/exports

/ifs/kubernetes *(rw,no_root_squash)

# mkdir -p /ifs/kubernetes

# systemctl start nfs

# systemctl enable nfs

并且要在每个Node上安装nfs-utils包,用于mount挂载时用。

由于K8S不支持NFS动态供给,还需要先安装上图中的nfs-client-provisioner插件:

# cd nfs-client

# vi deployment.yaml # 修改里面NFS地址和共享目录为你的

# kubectl apply -f .

# kubectl get pods

NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-df88f57df-bv8h7 1/1 Running 0 49m

2.5 持续集成 Jenkins

由于默认插件源在国外服务器,大多数网络无法顺利下载,需修改国内插件源地址:

cd jenkins_home/updates

sed -i 's/http:\/\/updates.jenkins-ci.org\/download/https:\/\/mirrors.tuna.tsinghua.edu.cn\/jenkins/g' default.json && \

sed -i 's/http:\/\/www.google.com/https:\/\/www.baidu.com/g' default.json

生成kubeconfig文件

# vim admin-csr.json

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:masters",

"OU": "System"

}

]

}

# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

二、创建kubeconfig文件

# 设置集群参数

kubectl config set-cluster kubernetes \

--server=https://192.168.31.61:6443 \

--certificate-authority=ca.pem \

--embed-certs=true \

--kubeconfig=config

# 设置上下文参数

kubectl config set-context default \

--cluster=kubernetes \

--user=cluster-admin \

--kubeconfig=config

# 设置默认上下文

kubectl config use-context default --kubeconfig=config

# 设置客户端认证参数

kubectl config set-credentials cluster-admin \

--certificate-authority=ca.pem \

--embed-certs=true \

--client-key=admin-key.pem \

--client-certificate=admin.pem \

--kubeconfig=config

3 搭建微服务发布平台

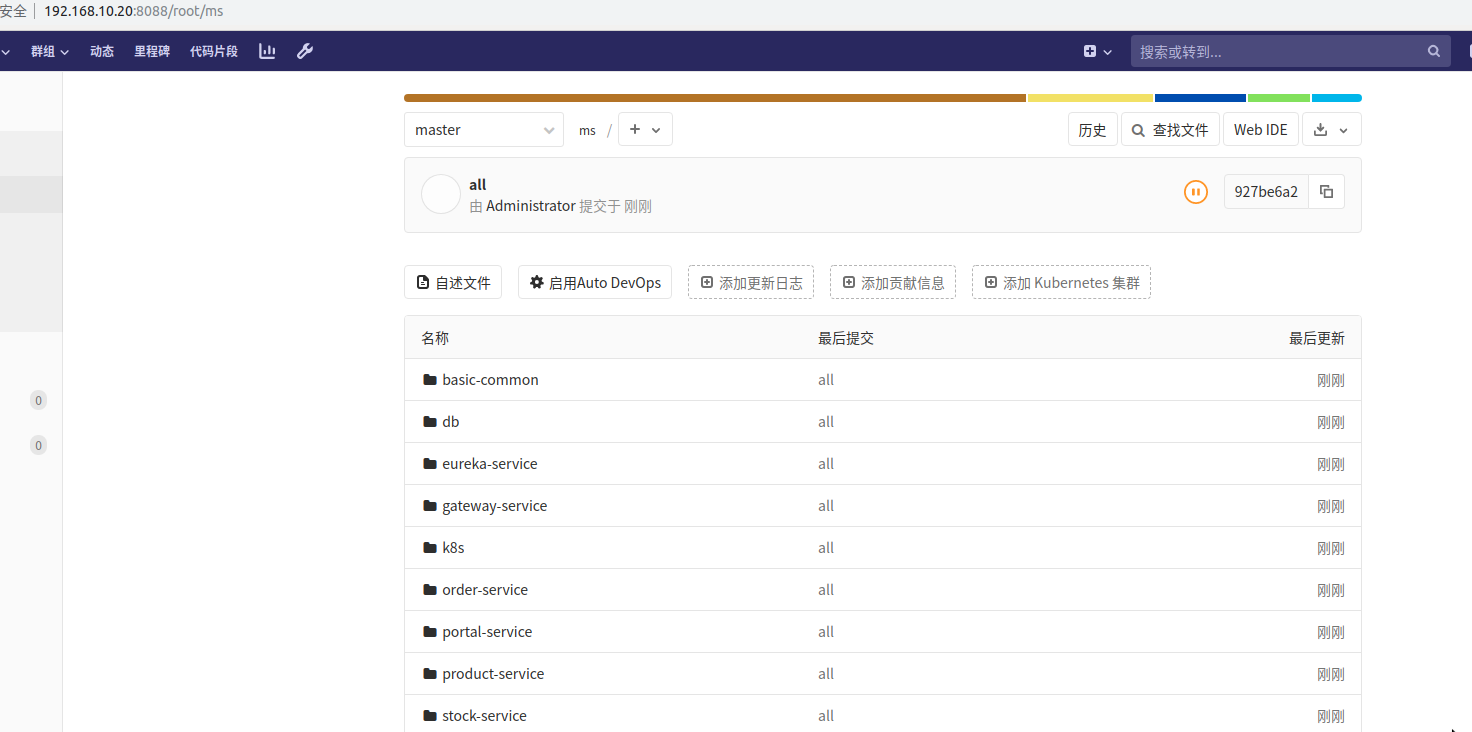

3.1 推送代码到gitlab

# 当时这个代码是放在k8s master节点上的

git config --global user.name "root"

git config --global user.email "root@example.com"

cd simple-microservice-dev3

find ./ -name target | xargs rm -fr #删除之前的构建记录

git init

git remote add origin http://192.168.10.20:8088/root/ms.git

git add .

git commit -m 'all'

git push origin master

如图已经推送成功.

3.2 安装mysql服务

本次实验使用之前数据库.

https://devopstack.cn/k8s/1878.html

3.3 Eureka(注册中心)

本次实验也是使用之前的实例.

https://devopstack.cn/k8s/1878.html

3.4 jenkins插件安装

管理Jenkins->系统配置–>管理插件–>分别搜索Git Parameter/Git/Pipeline/kubernetes/Config File Provider,选中点击安装。

Git Parameter:Git参数化构建

Extended Choice Parameter: 参数化构建多选框

Git:拉取代码

Pipeline:流水线

kubernetes:连接Kubernetes动态创建Slave代理

Config File Provider:存储kubectl用于连接k8s集群的kubeconfig配置文件

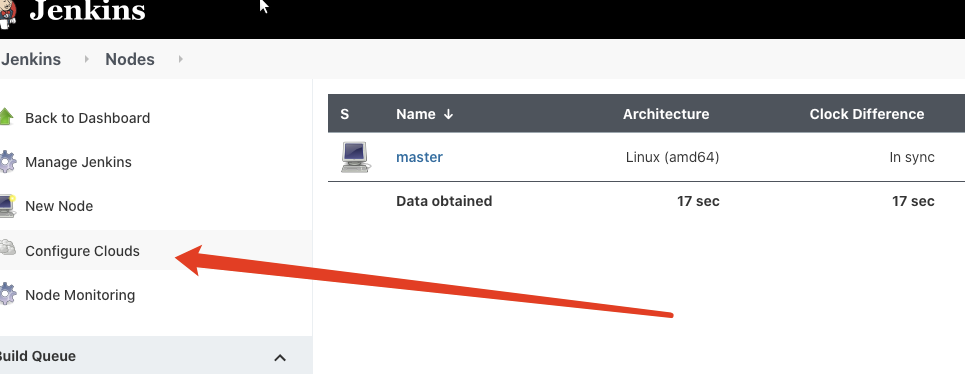

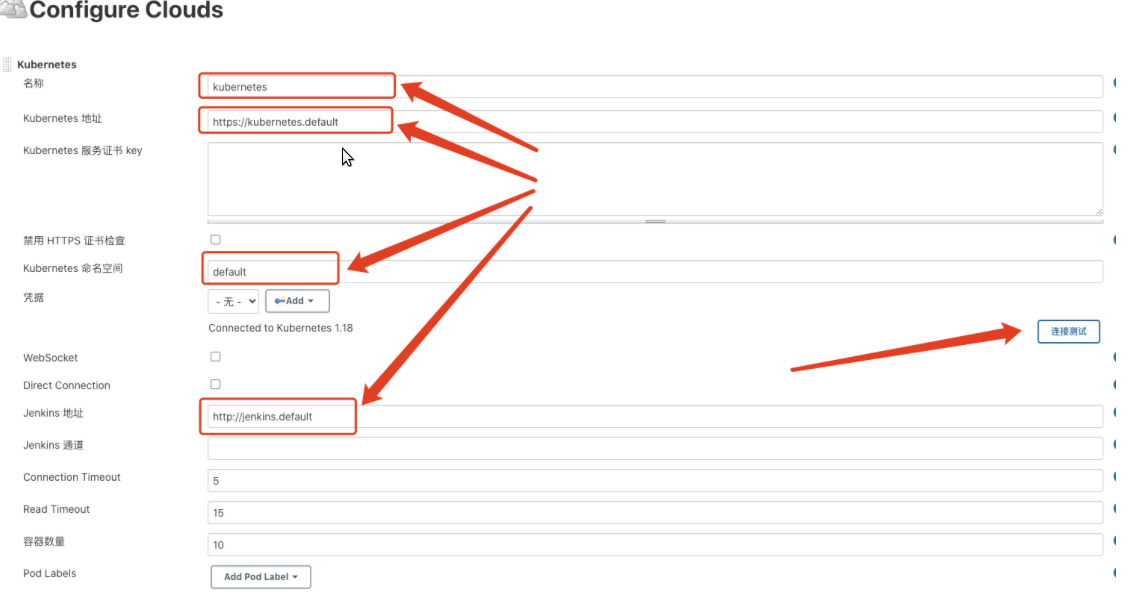

3.5 jenkins配置kubernetes

3.6 构建jenkins-slave镜像

[root@gitlab jenkins-slave]# cd jenkins-slave

[root@gitlab jenkins-slave]# ll

总用量 83292

-rw-r--r-- 1 root root 435 12月 26 2019 Dockerfile

-rwxr-xr-x 1 root root 37818368 11月 13 2019 helm

-rw-r--r-- 1 root root 1980 11月 24 2019 jenkins-slave

-rwxr-xr-x 1 root root 46677376 12月 26 2019 kubectl

-rw-r--r-- 1 root root 10409 11月 24 2019 settings.xml

-rw-r--r-- 1 root root 770802 11月 24 2019 slave.jar

[root@gitlab jenkins-slave]# cat Dockerfile

FROM centos:7

LABEL maintainer lizhenliang

RUN yum install -y java-1.8.0-openjdk maven curl git libtool-ltdl-devel && \

yum clean all && \

rm -rf /var/cache/yum/* && \

mkdir -p /usr/share/jenkins

COPY slave.jar /usr/share/jenkins/slave.jar

COPY jenkins-slave /usr/bin/jenkins-slave

COPY settings.xml /etc/maven/settings.xml

RUN chmod +x /usr/bin/jenkins-slave

COPY helm kubectl /usr/bin/

ENTRYPOINT ["jenkins-slave"]

docker build -t 192.168.10.20:8081/library/jenkins-slave-jdk:1.8 .

docker push 192.168.10.20:8081/library/jenkins-slave-jdk:1.8

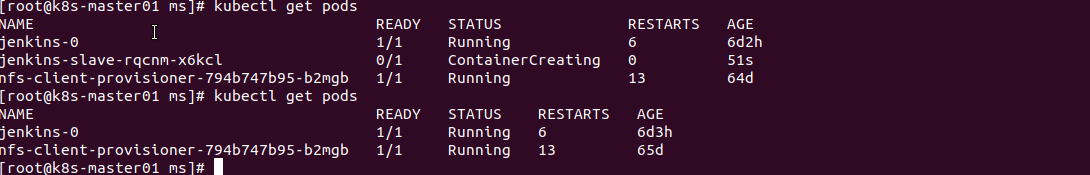

3.7 jenkins-slave 构建实例

通过k8s pod实现动态的去创建jenkins-slave

pipeline {

agent {

kubernetes {

label 'jenkins-slave'

yaml """

apiVersion: v1

kind: Pod

metadata:

name: jenkins-slave

spec:

containers:

- name: jnlp

image: "192.168.10.20:8081/library/jenkins-slave-jdk:1.8"

"""

}

}

stages {

stage('Build') {

steps {

echo "hello"

}

}

stage('test') {

steps {

echo "hello"

}

}

stage('deploy') {

steps {

echo "hello"

}

}

}

}

4 pipeline集成helm发布spring cloud微服务

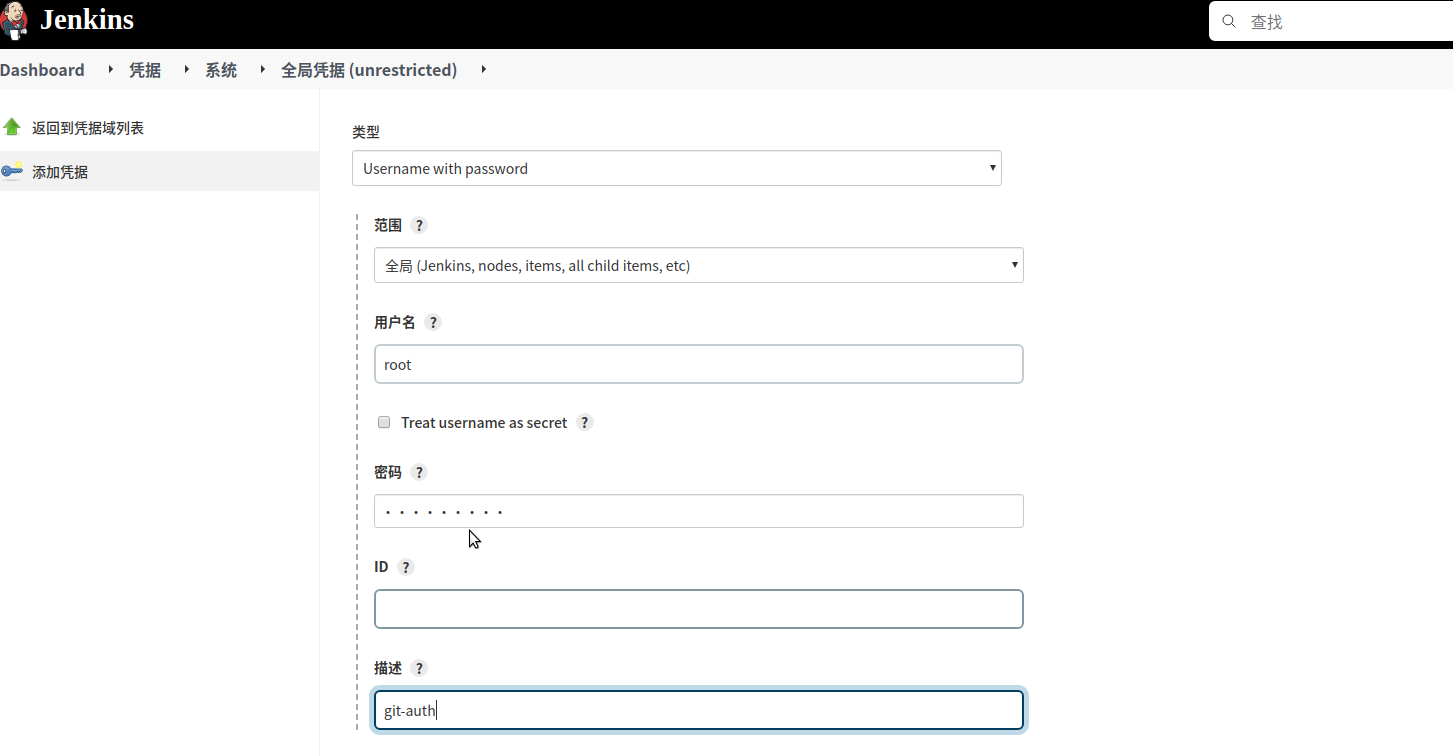

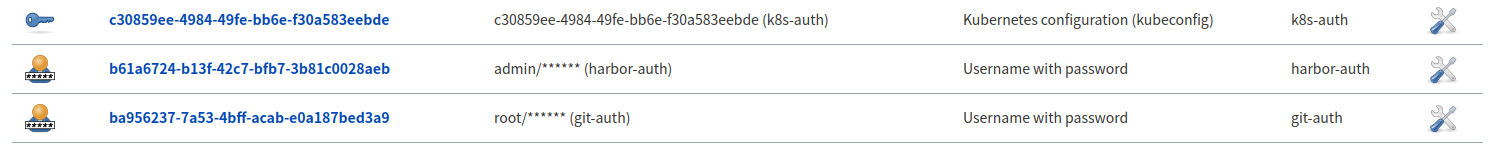

4.1 创建gitlab认证

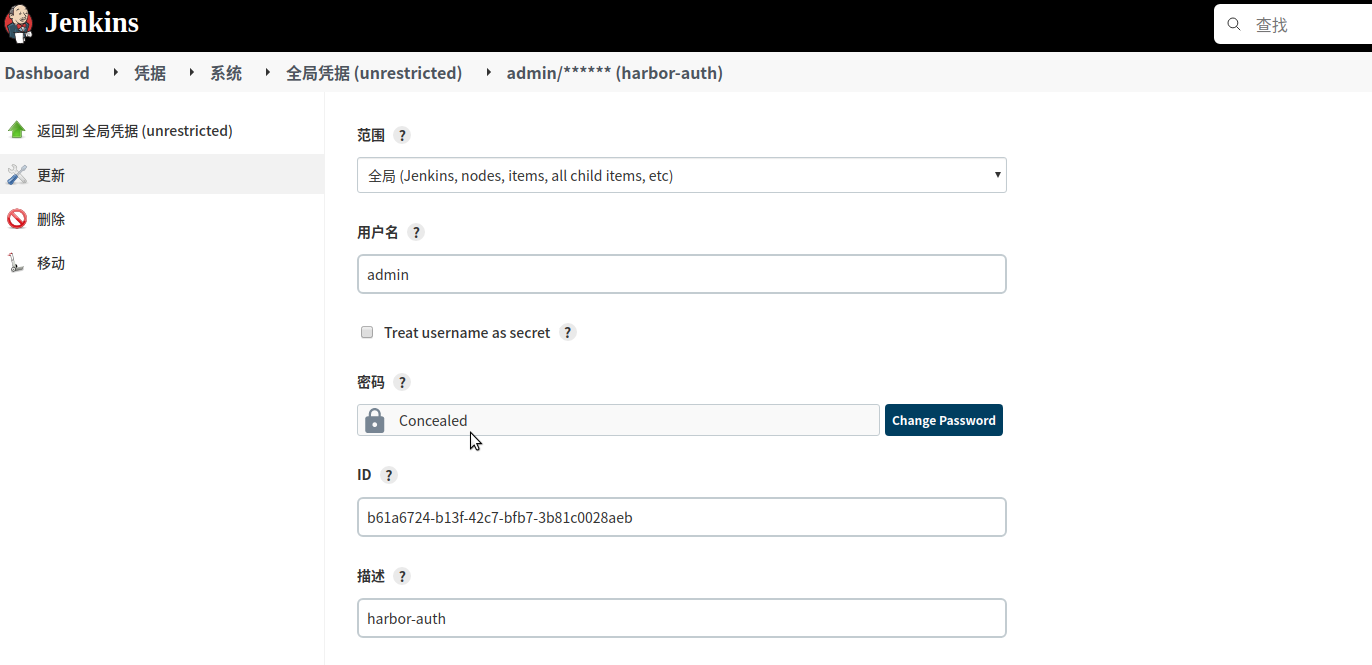

4.2 创建harbor认证

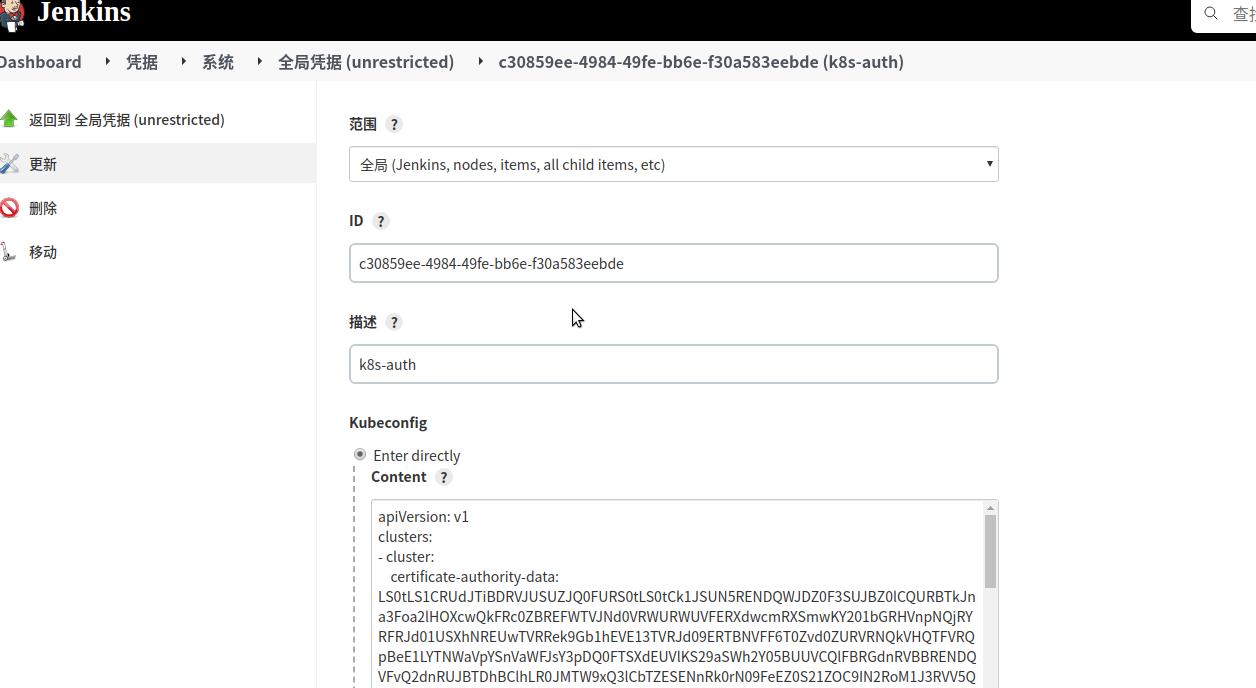

4.3 kubernetes kubeconfig认证

[root@k8s-master01 order-service-biz]# cat ~/.kube/config

如下图 需要的凭证已创建完毕

4.4 编写chart模版添加到repo里

[root@k8s-master01 ms]# tree

.

├── Chart.yaml

├── templates

│ ├── deployment.yaml

│ ├── _helpers.tpl

│ ├── ingress.yaml

│ ├── NOTES.txt

│ └── service.yaml

└── values.yaml

[root@k8s-master01 ms]# cat Chart.yaml

apiVersion: v2

appVersion: 0.1.0

description: microservice

name: ms

type: application

version: 0.1.0

[root@k8s-master01 ms]# cat values.yaml

env:

JAVA_OPTS: -Xmx1g

image:

pullPolicy: IfNotPresent

repository: lizhenliang/java-demo

tag: latest

imagePullSecrets: []

ingress:

annotations:

nginx.ingress.kubernetes.io/proxy-body-size: 100m

nginx.ingress.kubernetes.io/proxy-connect-timeout: "600"

nginx.ingress.kubernetes.io/proxy-read-timeout: "600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "600"

enabled: false

host: example.ctnrs.com

tls: []

nodeSelector: {}

replicaCount: 3

resources:

limits:

cpu: 1000m

memory: 1Gi

requests:

cpu: 100m

memory: 128Mi

service:

port: 80

targetPort: 80

type: ClusterIP

tolerations: []

cat templates/deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: {{ include "demo.fullname" . }}

labels:

{{- include "demo.labels" . | nindent 4 }}

spec:

replicas: {{ .Values.replicaCount }}

selector:

matchLabels:

{{- include "demo.selectorLabels" . | nindent 6 }}

template:

metadata:

labels:

{{- include "demo.selectorLabels" . | nindent 8 }}

spec:

{{- with .Values.imagePullSecrets }}

imagePullSecrets:

{{- toYaml . | nindent 8 }}

{{- end }}

containers:

- name: {{ .Chart.Name }}

image: "{{ .Values.image.repository }}:{{ .Values.image.tag }}"

imagePullPolicy: {{ .Values.image.pullPolicy }}

env:

{{- range k,v := .Values.env }}

- name: {{ k }}

value: {{v | quote }}

{{- end }}

ports:

- name: http

containerPort: {{ .Values.service.targetPort }}

protocol: TCP

livenessProbe:

tcpSocket:

port: http

initialDelaySeconds: 60

periodSeconds: 10

readinessProbe:

tcpSocket:

port: http

initialDelaySeconds: 60

periodSeconds: 10

resources:

{{- toYaml .Values.resources | nindent 12 }}

{{- with .Values.nodeSelector }}

nodeSelector:

{{- toYaml . | nindent 8 }}

{{- end }}

{{- with .Values.tolerations }}

tolerations:

{{- toYaml . | nindent 8 }}

{{- end }}

cat templates/ingress.yaml

{{- if .Values.ingress.enabled -}}

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: {{ include "demo.fullname" . }}

labels:

{{- include "demo.labels" . | nindent 4 }}

{{- with .Values.ingress.annotations }}

annotations:

{{- toYaml . | nindent 4 }}

{{- end }}

spec:

{{- if .Values.ingress.tls }}

tls:

- hosts:

- {{ .Values.ingress.host }}

secretName: {{ .Values.ingress.tls.secretName }}

{{- end }}

rules:

- host: {{ .Values.ingress.host }}

http:

paths:

- path: /

backend:

serviceName: {{ include "demo.fullname" . }}

servicePort: {{ .Values.service.port }}

{{- end }}

cat templates/service.yaml

apiVersion: v1

kind: Service

metadata:

name: {{ include "demo.fullname" . }}

labels:

{{- include "demo.labels" . | nindent 4 }}

spec:

type: {{ .Values.service.type }}

ports:

- port: {{ .Values.service.port }}

targetPort: http

protocol: TCP

name: http

selector:

{{- include "demo.selectorLabels" . | nindent 4 }}

cat templates/_helpers.tpl

{{- define "demo.fullname" -}}

{{- .Chart.Name -}}-{{ .Release.Name }}

{{- end -}}

{{/*

公用标签

*/}}

{{- define "demo.labels" -}}

app: {{ template "demo.fullname" . }}

chart: "{{ .Chart.Name }}-{{ .Chart.Version }}"

release: "{{ .Release.Name }}"

{{- end -}}

{{/*

标签选择器

*/}}

{{- define "demo.selectorLabels" -}}

app: {{ template "demo.fullname" . }}

release: "{{ .Release.Name }}"

{{- end -}}

cat templates/NOTES.txt

URL:

{{- if .Values.ingress.enabled }}

http{{ if .Values.ingress.tls }}s{{ end }}://{{ .Values.ingress.host }}

{{- end }}

{{- if contains "NodePort" .Values.service.type }}

export NODE_PORT=(kubectl get --namespace {{ .Release.Namespace }} -o jsonpath="{.spec.ports[0].nodePort}" services {{ include "demo.fullname" . }})

export NODE_IP=(kubectl get nodes --namespace {{ .Release.Namespace }} -o jsonpath="{.items[0].status.addresses[0].address}")

echo http://NODE_IP:$NODE_PORT

{{- end }}

添加repo

helm repo add --ca-file harbor.devopstack.cn.cert --cert-file harbor.devopstack.cn.crt --key-file harbor.devopstack.cn.key --username admin --password Harbor12345 springcloud https://192.168.10.20:443/chartrepo/springcloud/

推送helm chart到仓库

[root@k8s-master01 ~]# helm cm-push ./ms springcloud --ca-file ~/harbor.devopstack.cn.cert --cert-file ~/harbor.devopstack.cn.crt --key-file ~/harbor.devopstack.cn.key --username admin --password Harbor12345

4.5 编写pipeline脚本来实现自动化发布微服务

- 我的微信

- 这是我的微信扫一扫

-

- 我的微信公众号

- 我的微信公众号扫一扫

-