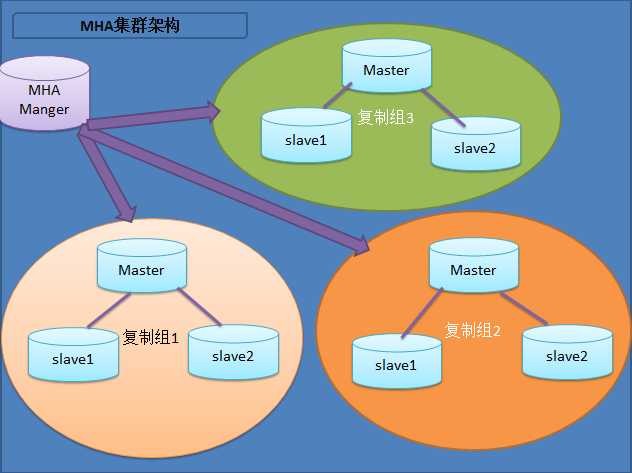

1.MHA的简单介绍

MHA(Master High Availability)在MySQL故障切换过程中,MHA能做到在0~30秒之内自动完成数据库的故障切换操作,并且在进行故障切换的过程中,MHA能在最大程度上保证数据的一致性,以达到真正意义上的高可用. 该软件由两部分组成:MHA Manager(管理节点)和MHA Node(数据节点)。MHA Manager可以单独部署在一台独立的机器上管理多个master-slave集群,也可以部署在一台slave节点上。MHA Node运行在每台MySQL服务器上,MHA Manager会定时探测集群中的master节点,当master出现故障时,它可以自动将最新数据的slave提升为新的master,然后将所有其他的slave重新指向新的master。整个故障转移过程对应用程序完全透明。

优点总结:

1、主从切换非常迅速,通常10-30s

2、最大程度上解决数据一致性的问题

3、不需要修改当前已有的MySQL架构和配置

4、不需要另外多余的服务器

5、没有性能损耗

6、没有存储引擎限制

1.1 MHA 工作原理

(1)从宕机崩溃的master保存二进制日志事件(binlog events);

(2)识别含有最新更新的slave;

(3)应用差异的中继日志(relay log)到其他的slave;

(4)应用从master保存的二进制日志事件(binlog events);

(5)提升一个slave为新的master;

(6)使其他的slave连接新的master进行复制;

1.2 MHA组成

(1)、 Manager工具:

– masterha_check_ssh : 检查MHA的SSH配置。

– masterha_check_repl : 检查MySQL复制。

– masterha_manager : 启动MHA。

– masterha_check_status : 检测当前MHA运行状态。

– masterha_master_monitor : 监测master是否宕机。

– masterha_master_switch : 控制故障转移(自动或手动)。

– masterha_conf_host : 添加或删除配置的server信息。

(2)、 Node工具(这些工具通常由MHAManager的脚本触发,无需人手操作)。

– save_binary_logs : 保存和复制master的二进制日志。

– apply_diff_relay_logs : 识别差异的中继日志事件并应用于其它slave。

– filter_mysqlbinlog : 去除不必要的ROLLBACK事件(MHA已不再使用这个工具)。

– purge_relay_logs : 清除中继日志(不会阻塞SQL线程)。

(3)、自定义扩展:

-secondary_check_script:通过多条网络路由检测master的可用性;

-master_ip_failover_script:更新application使用的masterip; (需要修改)

-shutdown_script:强制关闭master节点;

-report_script:发送报告;

-init_conf_load_script:加载初始配置参数;

-master_ip_online_change:更新master节点ip地址;(需要修改)

1.3 mha4mysql代码托管

https://code.google.com/archive/p/mysql-master-ha/

manager地址: https://github.com/yoshinorim/mha4mysql-manager

node地址: https://github.com/yoshinorim/mha4mysql-node

2 MHA环境准备

安装参考:https://github.com/yoshinorim/mha4mysql-manager/wiki/Installation

2.1 系统环境

[root@localhost ~]# cat /etc/redhat-release

CentOS Linux release 7.4.1708 (Core)

[root@localhost ~]# uname -r

3.10.0-693.el7.x86_64

2.2 关闭防火墙

iptables -F

iptables -L

systemctl stop firewalld.service

systemctl disable firewalld.service

2.3 setenforce设置

sed -i 's/SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config

setenforce 0

2.4 角色规划

| 角色 | IP 地址 | 主机名 | Server_id | 服务安装 |

|---|---|---|---|---|

| master节点 | 192.168.123.23 | MHA-M1 | 1 | mha node, mysql master |

| slave节点 | 192.168.123.63 | MHA-S1 | 2 | mha node ,mysql slave |

| slave节点 | 192.168.123.64 | MHA-S2 | 3 | mha node ,mysql slave |

| MHA管理节点 | 192.168.123.98 | mha-s3 | 无 | mha node,mha manager |

2.5 修改主机名

hostnamectl set-hostname MHA-M1

hostnamectl set-hostname MHA-S1

hostnamectl set-hostname MHA-S2

hostnamectl set-hostname MHA-S3

3. 安装mysql5.7配置好主从复制

3.1 安装mysql5.7

yum install epel* -y && yum clean all && yum makecache

rpm -Uvh http://repo.mysql.com/mysql57-community-release-el7.rpm

yum clean all && yum makecache

yum install gcc gcc-c++ openssl-devel mysql mysql-server mysql-devel -y

systemctl start mysqld

查看默认密码

cat /var/log/mysqld.log | grep 'password is generated'

对mysql进行安全加固

mysql_secure_installation

3.2 配置mysql

vim /etc/my.cnf

[client]

port = 3306

socket = /var/lib/mysql/mysql.sock

[mysqld]

server-id = 1

relay_log_purge=0

datadir=/var/lib/mysql

socket=/var/lib/mysql/mysql.sock

gtid_mode = on

enforce_gtid_consistency = 1

log_slave_updates = 1

#开启半同步复制 否则自动切换主从的时候会报主键错误

plugin_load = "rpl_semi_sync_master=semisync_master.so;rpl_semi_sync_slave=semisync_slave.so"

loose_rpl_semi_sync_master_enabled = 1

loose_rpl_semi_sync_slave_enabled = 1

loose_rpl_semi_sync_master_timeout = 5000

log-bin=mysql-bin

relay-log = mysql-relay-bin

replicate-wild-ignore-table=mysql.%

replicate-wild-ignore-table=test.%

replicate-wild-ignore-table=information_schema.%

symbolic-links=0

log-error=/var/log/mysqld.log

pid-file=/var/run/mysqld/mysqld.pid

重启服务

systemctl restart mysqld

另外两台只需更改server-id

3.3 每台主机数据库实例上配置复制用户和监控用户

grant replication slave on *.* to 'repl_user'@'192.168.123.%' identified by 'Repl_user123456';

grant all on *.* to 'mha'@'192.168.123.%' identified by 'Repl_user123456';

flush privileges;

3.4 在主master上查看状态

show master status;

+------------------+----------+--------------+------------------+------------------------------------------+

| File | Position | Binlog_Do_DB | Binlog_Ignore_DB | Executed_Gtid_Set |

+------------------+----------+--------------+------------------+------------------------------------------+

| mysql-bin.000002 | 194 | | | 85d8cfdc-c01d-11e9-9505-5254006ea49d:1-3 |

+------------------+----------+--------------+------------------+------------------------------------------+

3.5 在两个slave节点执行下面的操作

change master to master_host='192.168.123.23',master_user='repl_user',master_password='Repl_user123456',master_log_file='mysql-bin.000002',master_log_pos=194;

从库操作:

start slave;

show slave status\G;

show slave status\G;

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 192.168.123.23

Master_User: repl_user

Master_Port: 3306

Connect_Retry: 60

Master_Log_File: mysql-bin.000002

Read_Master_Log_Pos: 194

Relay_Log_File: mysql-relay-bin.000002

Relay_Log_Pos: 320

Relay_Master_Log_File: mysql-bin.000002

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

主从同步成功。

从节点设置只读权限:

set global read_only=1 ;

4 MHA的安装和配置

4.1 配置三个节点的 ssh 免密

在三个 mysql 节点分别执行如下操作

ssh-keygen -t rsa

ssh-copy-id -i /root/.ssh/id_rsa.pub root@192.168.123.63

ssh-copy-id -i /root/.ssh/id_rsa.pub root@192.168.123.64

ssh-copy-id -i /root/.ssh/id_rsa.pub root@192.168.123.23

ssh-copy-id -i /root/.ssh/id_rsa.pub root@192.168.123.98

验证

[root@mha-s2 ~]# ssh root@192.168.123.23

Last login: Fri Aug 16 19:12:53 2019 from 192.168.123.1

4.2 安装 MHA 软件

4.2.1 每台主机上安装node节点

yum install perl-DBD-MySQL -y

wget https://github.com/yoshinorim/mha4mysql-node/releases/download/v0.58/mha4mysql-node-0.58-0.el7.centos.noarch.rpm

rpm -ivh mha4mysql-node-0.58-0.el7.centos.noarch.rpm

4.2.2 安装manager节点

yum install perl-Parallel-ForkManager perl-Log-Dispatch perl-Config-Tiny perl-DBD-MySQL -y

wget https://github.com/yoshinorim/mha4mysql-manager/releases/download/v0.58/mha4mysql-manager-0.58-0.el7.centos.noarch.rpm

[root@mha-s3 ~]# rpm -ivh mha4mysql-manager-0.58-0.el7.centos.noarch.rpm

准备中... ################################# [100%]

正在升级/安装...

1:mha4mysql-manager-0.58-0.el7.cent################################# [100%]

4.3 管理节点配置MHA

mkdir /etc/mha

mkdir mkdir /data/mha/mha/app1 -p

vim /etc/mha/app1.cnf

[server default]

manager_workdir=/etc/mha/app1

manager_log=/var/log/manager.log

master_binlog_dir=/var/lib/mysql

ssh_user=root

user=mha

password=Repl_user123456

repl_user=repl_user

repl_password=Repl_user123456

secondary_check_script=masterha_secondary_check -s 192.168.123.63 -s 192.168.123.64 -s 192.168.123.23

ping_interval=3

#master_ip_failover_script=/etc/mha/app1/master_ip_failover

#shutdown_script=/etc/mha/app1/power_manager

#report_script=/etc/mha/app1/send_report

#master_ip_online_change_script=/etc/mha/app1/master_ip_online_change

[server1]

hostname=192.168.123.23

port=3306

#master_binlog_dir=/data/mysql/data

candidate_master=1

check_repl_delay=0

[server2]

hostname=192.168.123.63

port=3306

#master_binlog_dir=/data/mysql/data

candidate_master=1 #设置为候选master,如果设置该参数以后,发生主从切换以后将会将此从库提升为主库,即使这个主库不是集群中事件最新的slave

check_repl_delay=0 #默认情况下如果一个slave落后master 100M的relay logs的话,MHA将不会选择该slave作为一个新的master,因为对于这个slave的恢复需要花费很长时间,通过设置check_repl_delay=0,MHA触发切换在选择一个新的master的时候将会忽略复制延时,这个参数对于设置了candidate_master=1的主机非常有用,因为这个候选主在切换的过程中一定是新的master

[server3]

hostname=192.168.123.64

port=3306

no_master=1

注释:

#master_ip_failover_script=/etc/mha/app1/master_ip_failover #自动切换时vip管理的脚本,不是必须,如果我们使用keepalived的,我们可以自己编写脚本完成对vip的管理,比如监控mysql,如果mysql异常,我们停止keepalived就行,这样vip就会自动漂移

#shutdown_script=/etc/mha/app1/power_manager #故障发生后关闭主机的脚本,不是必须

#report_script=/etc/mha/app1/send_report #因故障切换后发送报警的脚本,不是必须,可自行编写简单的shell完成。

#master_ip_online_change_script=/etc/mha/app1/master_ip_online_change #在线切换时vip的管理,不是必须,同样可以可以自行编写简单的shell完成

4.4 验证SSH互认是否成功

masterha_check_ssh --conf=/etc/mha/app1.cnf

Sun Aug 25 18:03:44 2019 - [warning] Global configuration file /etc/masterha_default.cnf not found. Skipping.

Sun Aug 25 18:03:44 2019 - [info] Reading application default configuration from /etc/mha/app1.cnf..

Sun Aug 25 18:03:44 2019 - [info] Reading server configuration from /etc/mha/app1.cnf..

Sun Aug 25 18:03:44 2019 - [info] Starting SSH connection tests..

Sun Aug 25 18:03:45 2019 - [debug]

Sun Aug 25 18:03:44 2019 - [debug] Connecting via SSH from root@192.168.123.23(192.168.123.23:22) to root@192.168.123.63(192.168.123.63:22)..

Sun Aug 25 18:03:45 2019 - [debug] ok.

Sun Aug 25 18:03:45 2019 - [debug] Connecting via SSH from root@192.168.123.23(192.168.123.23:22) to root@192.168.123.64(192.168.123.64:22)..

Sun Aug 25 18:03:45 2019 - [debug] ok.

Sun Aug 25 18:03:46 2019 - [debug]

Sun Aug 25 18:03:45 2019 - [debug] Connecting via SSH from root@192.168.123.63(192.168.123.63:22) to root@192.168.123.23(192.168.123.23:22)..

Sun Aug 25 18:03:45 2019 - [debug] ok.

Sun Aug 25 18:03:45 2019 - [debug] Connecting via SSH from root@192.168.123.63(192.168.123.63:22) to root@192.168.123.64(192.168.123.64:22)..

Sun Aug 25 18:03:45 2019 - [debug] ok.

Sun Aug 25 18:03:47 2019 - [debug]

Sun Aug 25 18:03:45 2019 - [debug] Connecting via SSH from root@192.168.123.64(192.168.123.64:22) to root@192.168.123.23(192.168.123.23:22)..

Sun Aug 25 18:03:46 2019 - [debug] ok.

Sun Aug 25 18:03:46 2019 - [debug] Connecting via SSH from root@192.168.123.64(192.168.123.64:22) to root@192.168.123.63(192.168.123.63:22)..

Sun Aug 25 18:03:46 2019 - [debug] ok.

Sun Aug 25 18:03:47 2019 - [info] All SSH connection tests passed successfully.

4.5 检查mysql replication是否配置成功

masterha_check_repl --conf=/etc/mha/app1.cnf

Sun Aug 25 17:59:34 2019 - [warning] Global configuration file /etc/masterha_default.cnf not found. Skipping.

Sun Aug 25 17:59:34 2019 - [info] Reading application default configuration from /etc/mha/app1.cnf..

Sun Aug 25 17:59:34 2019 - [info] Reading server configuration from /etc/mha/app1.cnf..

Sun Aug 25 17:59:34 2019 - [info] MHA::MasterMonitor version 0.58.

Sun Aug 25 17:59:35 2019 - [info] GTID failover mode = 0

Sun Aug 25 17:59:35 2019 - [info] Dead Servers:

Sun Aug 25 17:59:35 2019 - [info] Alive Servers:

Sun Aug 25 17:59:35 2019 - [info] 192.168.123.23(192.168.123.23:3306)

Sun Aug 25 17:59:35 2019 - [info] 192.168.123.63(192.168.123.63:3306)

Sun Aug 25 17:59:35 2019 - [info] 192.168.123.64(192.168.123.64:3306)

Sun Aug 25 17:59:35 2019 - [info] Alive Slaves:

Sun Aug 25 17:59:35 2019 - [info] 192.168.123.63(192.168.123.63:3306) Version=5.7.27-log (oldest major version between slaves) log-bin:enabled

Sun Aug 25 17:59:35 2019 - [info] Replicating from 192.168.123.23(192.168.123.23:3306)

Sun Aug 25 17:59:35 2019 - [info] Primary candidate for the new Master (candidate_master is set)

Sun Aug 25 17:59:35 2019 - [info] 192.168.123.64(192.168.123.64:3306) Version=5.7.27-log (oldest major version between slaves) log-bin:enabled

Sun Aug 25 17:59:35 2019 - [info] Replicating from 192.168.123.23(192.168.123.23:3306)

Sun Aug 25 17:59:35 2019 - [info] Not candidate for the new Master (no_master is set)

Sun Aug 25 17:59:35 2019 - [info] Current Alive Master: 192.168.123.23(192.168.123.23:3306)

Sun Aug 25 17:59:35 2019 - [info] Checking slave configurations..

Sun Aug 25 17:59:35 2019 - [info] Checking replication filtering settings..

Sun Aug 25 17:59:35 2019 - [info] binlog_do_db= , binlog_ignore_db=

Sun Aug 25 17:59:35 2019 - [info] Replication filtering check ok.

Sun Aug 25 17:59:35 2019 - [info] GTID (with auto-pos) is not supported

Sun Aug 25 17:59:35 2019 - [info] Starting SSH connection tests..

Sun Aug 25 17:59:37 2019 - [info] All SSH connection tests passed successfully.

Sun Aug 25 17:59:37 2019 - [info] Checking MHA Node version..

Sun Aug 25 17:59:38 2019 - [info] Version check ok.

Sun Aug 25 17:59:38 2019 - [info] Checking SSH publickey authentication settings on the current master..

Sun Aug 25 17:59:38 2019 - [info] HealthCheck: SSH to 192.168.123.23 is reachable.

Sun Aug 25 17:59:38 2019 - [info] Master MHA Node version is 0.58.

Sun Aug 25 17:59:38 2019 - [info] Checking recovery script configurations on 192.168.123.23(192.168.123.23:3306)..

Sun Aug 25 17:59:38 2019 - [info] Executing command: save_binary_logs --command=test --start_pos=4 --binlog_dir=/var/lib/mysql --output_file=/var/tmp/save_binary_logs_test --manager_version=0.58 --start_file=mysql-bin.000003

Sun Aug 25 17:59:38 2019 - [info] Connecting to root@192.168.123.23(192.168.123.23:22)..

Creating /var/tmp if not exists.. ok.

Checking output directory is accessible or not..

ok.

Binlog found at /var/lib/mysql, up to mysql-bin.000003

Sun Aug 25 17:59:38 2019 - [info] Binlog setting check done.

Sun Aug 25 17:59:38 2019 - [info] Checking SSH publickey authentication and checking recovery script configurations on all alive slave servers..

Sun Aug 25 17:59:38 2019 - [info] Executing command : apply_diff_relay_logs --command=test --slave_user='mha' --slave_host=192.168.123.63 --slave_ip=192.168.123.63 --slave_port=3306 --workdir=/var/tmp --target_version=5.7.27-log --manager_version=0.58 --relay_log_info=/var/lib/mysql/relay-log.info --relay_dir=/var/lib/mysql/ --slave_pass=xxx

Sun Aug 25 17:59:38 2019 - [info] Connecting to root@192.168.123.63(192.168.123.63:22)..

Checking slave recovery environment settings..

Opening /var/lib/mysql/relay-log.info ... ok.

Relay log found at /var/lib/mysql, up to mysql-relay-bin.000005

Temporary relay log file is /var/lib/mysql/mysql-relay-bin.000005

Checking if super_read_only is defined and turned on.. not present or turned off, ignoring.

Testing mysql connection and privileges..

mysql: [Warning] Using a password on the command line interface can be insecure.

done.

Testing mysqlbinlog output.. done.

Cleaning up test file(s).. done.

Sun Aug 25 17:59:39 2019 - [info] Executing command : apply_diff_relay_logs --command=test --slave_user='mha' --slave_host=192.168.123.64 --slave_ip=192.168.123.64 --slave_port=3306 --workdir=/var/tmp --target_version=5.7.27-log --manager_version=0.58 --relay_log_info=/var/lib/mysql/relay-log.info --relay_dir=/var/lib/mysql/ --slave_pass=xxx

Sun Aug 25 17:59:39 2019 - [info] Connecting to root@192.168.123.64(192.168.123.64:22)..

Checking slave recovery environment settings..

Opening /var/lib/mysql/relay-log.info ... ok.

Relay log found at /var/lib/mysql, up to mysql-relay-bin.000006

Temporary relay log file is /var/lib/mysql/mysql-relay-bin.000006

Checking if super_read_only is defined and turned on.. not present or turned off, ignoring.

Testing mysql connection and privileges..

mysql: [Warning] Using a password on the command line interface can be insecure.

done.

Testing mysqlbinlog output.. done.

Cleaning up test file(s).. done.

Sun Aug 25 17:59:39 2019 - [info] Slaves settings check done.

Sun Aug 25 17:59:39 2019 - [info]

192.168.123.23(192.168.123.23:3306) (current master)

+--192.168.123.63(192.168.123.63:3306)

+--192.168.123.64(192.168.123.64:3306)

Sun Aug 25 17:59:39 2019 - [info] Checking replication health on 192.168.123.63..

Sun Aug 25 17:59:39 2019 - [info] ok.

Sun Aug 25 17:59:39 2019 - [info] Checking replication health on 192.168.123.64..

Sun Aug 25 17:59:39 2019 - [info] ok.

Sun Aug 25 17:59:39 2019 - [warning] master_ip_failover_script is not defined.

Sun Aug 25 17:59:39 2019 - [warning] shutdown_script is not defined.

Sun Aug 25 17:59:39 2019 - [info] Got exit code 0 (Not master dead).

MySQL Replication Health is OK.

4.6 启动mha监测集群

nohup masterha_manager --conf=/etc/mha/app1.cnf </dev/null >/var/log/mha/mag.log 2>&1 &

查看:

masterha_check_status --conf=/etc/mha/app1.cnf

app1 (pid:5124) is running(0:PING_OK), master:192.168.123.23

ps -ef |grep masterha |grep -v 'grep'

root 5124 969 0 11:34 pts/0 00:00:00 perl /usr/bin/masterha_manager --conf=/etc/mha/app1.cnf

查看日志:

tail -1000f manager.log

Mon Aug 26 11:34:22 2019 - [info] MHA::MasterMonitor version 0.58.

Mon Aug 26 11:34:23 2019 - [info] GTID failover mode = 0

Mon Aug 26 11:34:23 2019 - [info] Dead Servers:

Mon Aug 26 11:34:23 2019 - [info] Alive Servers:

Mon Aug 26 11:34:23 2019 - [info] 192.168.123.23(192.168.123.23:3306)

Mon Aug 26 11:34:23 2019 - [info] 192.168.123.63(192.168.123.63:3306)

Mon Aug 26 11:34:23 2019 - [info] 192.168.123.64(192.168.123.64:3306)

Mon Aug 26 11:34:23 2019 - [info] Alive Slaves:

Mon Aug 26 11:34:23 2019 - [info] 192.168.123.63(192.168.123.63:3306) Version=5.7.27-log (oldest major version between slaves) log-bin:enabled

Mon Aug 26 11:34:23 2019 - [info] Replicating from 192.168.123.23(192.168.123.23:3306)

Mon Aug 26 11:34:23 2019 - [info] Primary candidate for the new Master (candidate_master is set)

Mon Aug 26 11:34:23 2019 - [info] 192.168.123.64(192.168.123.64:3306) Version=5.7.27-log (oldest major version between slaves) log-bin:enabled

Mon Aug 26 11:34:23 2019 - [info] Replicating from 192.168.123.23(192.168.123.23:3306)

Mon Aug 26 11:34:23 2019 - [info] Not candidate for the new Master (no_master is set)

Mon Aug 26 11:34:23 2019 - [info] Current Alive Master: 192.168.123.23(192.168.123.23:3306)

Mon Aug 26 11:34:23 2019 - [info] Checking slave configurations..

Mon Aug 26 11:34:23 2019 - [info] Checking replication filtering settings..

Mon Aug 26 11:34:23 2019 - [info] binlog_do_db= , binlog_ignore_db=

Mon Aug 26 11:34:23 2019 - [info] Replication filtering check ok.

Mon Aug 26 11:34:23 2019 - [info] GTID (with auto-pos) is not supported

Mon Aug 26 11:34:23 2019 - [info] Starting SSH connection tests..

Mon Aug 26 11:34:26 2019 - [info] All SSH connection tests passed successfully.

Mon Aug 26 11:34:26 2019 - [info] Checking MHA Node version..

Mon Aug 26 11:34:26 2019 - [info] Version check ok.

Mon Aug 26 11:34:26 2019 - [info] Checking SSH publickey authentication settings on the current master..

Mon Aug 26 11:34:26 2019 - [info] HealthCheck: SSH to 192.168.123.23 is reachable.

Mon Aug 26 11:34:27 2019 - [info] Master MHA Node version is 0.58.

Mon Aug 26 11:34:27 2019 - [info] Checking recovery script configurations on 192.168.123.23(192.168.123.23:3306)..

Mon Aug 26 11:34:27 2019 - [info] Executing command: save_binary_logs --command=test --start_pos=4 --binlog_dir=/var/lib/mysql --output_file=/var/tmp/save_binary_logs_test --manager_version=0.58 --start_file=mysql-bin.000003

Mon Aug 26 11:34:27 2019 - [info] Connecting to root@192.168.123.23(192.168.123.23:22)..

Creating /var/tmp if not exists.. ok.

Checking output directory is accessible or not..

ok.

Binlog found at /var/lib/mysql, up to mysql-bin.000003

Mon Aug 26 11:34:27 2019 - [info] Binlog setting check done.

Mon Aug 26 11:34:27 2019 - [info] Checking SSH publickey authentication and checking recovery script configurations on all alive slave servers..

Mon Aug 26 11:34:27 2019 - [info] Executing command : apply_diff_relay_logs --command=test --slave_user='mha' --slave_host=192.168.123.63 --slave_ip=192.168.123.63 --slave_port=3306 --workdir=/var/tmp --target_version=5.7.27-log --manager_version=0.58 --relay_log_info=/var/lib/mysql/relay-log.info --relay_dir=/var/lib/mysql/ --slave_pass=xxx

Mon Aug 26 11:34:27 2019 - [info] Connecting to root@192.168.123.63(192.168.123.63:22)..

Checking slave recovery environment settings..

Opening /var/lib/mysql/relay-log.info ... ok.

Relay log found at /var/lib/mysql, up to mysql-relay-bin.000005

Temporary relay log file is /var/lib/mysql/mysql-relay-bin.000005

Checking if super_read_only is defined and turned on.. not present or turned off, ignoring.

Testing mysql connection and privileges..

mysql: [Warning] Using a password on the command line interface can be insecure.

done.

Testing mysqlbinlog output.. done.

Cleaning up test file(s).. done.

Mon Aug 26 11:34:27 2019 - [info] Executing command : apply_diff_relay_logs --command=test --slave_user='mha' --slave_host=192.168.123.64 --slave_ip=192.168.123.64 --slave_port=3306 --workdir=/var/tmp --target_version=5.7.27-log --manager_version=0.58 --relay_log_info=/var/lib/mysql/relay-log.info --relay_dir=/var/lib/mysql/ --slave_pass=xxx

Mon Aug 26 11:34:27 2019 - [info] Connecting to root@192.168.123.64(192.168.123.64:22)..

Checking slave recovery environment settings..

Opening /var/lib/mysql/relay-log.info ... ok.

Relay log found at /var/lib/mysql, up to mysql-relay-bin.000006

Temporary relay log file is /var/lib/mysql/mysql-relay-bin.000006

Checking if super_read_only is defined and turned on.. not present or turned off, ignoring.

Testing mysql connection and privileges..

mysql: [Warning] Using a password on the command line interface can be insecure.

done.

Testing mysqlbinlog output.. done.

Cleaning up test file(s).. done.

Mon Aug 26 11:34:28 2019 - [info] Slaves settings check done.

Mon Aug 26 11:34:28 2019 - [info]

192.168.123.23(192.168.123.23:3306) (current master)

+--192.168.123.63(192.168.123.63:3306)

+--192.168.123.64(192.168.123.64:3306)

Mon Aug 26 11:34:28 2019 - [warning] master_ip_failover_script is not defined.

Mon Aug 26 11:34:28 2019 - [warning] shutdown_script is not defined.

Mon Aug 26 11:34:28 2019 - [info] Set master ping interval 3 seconds.

Mon Aug 26 11:34:28 2019 - [info] Set secondary check script: masterha_secondary_check -s 192.168.123.63 -s 192.168.123.64 -s 192.168.123.23

Mon Aug 26 11:34:28 2019 - [info] Starting ping health check on 192.168.123.23(192.168.123.23:3306)..

Mon Aug 26 11:34:28 2019 - [info] Ping(SELECT) succeeded, waiting until MySQL doesn't respond..

关闭命令

masterha_stop --conf=/data/mha/app1.cnf

5 各种场景mha测试主从切换

5.1 测试无vipip主从同步是否自动切换

准备,先来检查主从是否都均已正常

首先,停止master端的mysqld服务进程,然后查看备库也就是slave01是否已经提升到主库

其次,登录slave02端查看主从是否正常,是否更新到新的master的ip上也就是是否执行slave01的ip地址

最后,启动master端的mysqld服务进程,并将其加入到主从模式中

1.检查主从是否正常

[root@mha-s1 ~]# mysql -uroot -p -e "show slave status \G" |grep "Yes"

Enter password:

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

[root@mha-s2 ~]# mysql -uroot -p -e "show slave status \G" |grep "Yes"

Enter password:

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

结果为正常

2.停止master端的mysqld

[root@mha-m1 ~]# service mysqld stop

Redirecting to /bin/systemctl stop mysqld.service

查看主从是否切换

[root@mha-s2 ~]# mysql -uroot -p -e "show slave status \G" |grep "Yes"

Enter password:

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Slave_SQL_Running: Yes

[root@mha-s2 ~]# mysql -uroot -p -e "show slave status \G"

Enter password:

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 192.168.123.63

Master_User: repl_user

Master_Port: 3306

Connect_Retry: 60

Master_Log_File: mysql-bin.000003

已切换至mha-s1上

[root@mha-s3 ~]# masterha_check_status --conf=/etc/mha/app1.cnf

app1 is stopped(2:NOT_RUNNING).

[1]+ 完成 nohup masterha_manager --conf=/etc/mha/app1.cnf < /dev/null > /var/log/mha/mag.log 2>&1

查看/var/log/manager.log 日志如下:

----- Failover Report -----

app1: MySQL Master failover 192.168.123.23(192.168.123.23:3306) to 192.168.123.63(192.168.123.63:3306) succeeded

#mysql主由192.168.123.23切至192.168.123.63

Master 192.168.123.23(192.168.123.23:3306) is down!

Check MHA Manager logs at mha-s3:/var/log/manager.log for details.

Started automated(non-interactive) failover.

The latest slave 192.168.123.63(192.168.123.63:3306) has all relay logs for recovery.

Selected 192.168.123.63(192.168.123.63:3306) as a new master.

192.168.123.63(192.168.123.63:3306): OK: Applying all logs succeeded.

192.168.123.64(192.168.123.64:3306): This host has the latest relay log events.

Generating relay diff files from the latest slave succeeded.

192.168.123.64(192.168.123.64:3306): OK: Applying all logs succeeded. Slave started, replicating from 192.168.123.63(192.168.123.63:3306)

192.168.123.63(192.168.123.63:3306): Resetting slave info succeeded.

Master failover to 192.168.123.63(192.168.123.63:3306) completed successfully.

3.原master加入集群

在manager端的mha日志文件中找到主从同步的sql语句,这条语句只需要修改密码即可使用:

grep 'MASTER_HOST' /var/log/manager.log

Mon Aug 26 14:57:11 2019 - [info] All other slaves should start replication from here. Statement should be: CHANGE MASTER TO MASTER_HOST='192.168.123.63', MASTER_PORT=3306, MASTER_LOG_FILE='mysql-bin.000003', MASTER_LOG_POS=194, MASTER_USER='repl_user', MASTER_PASSWORD='xxx';

在原来的主上执行如下语句:

mysql -uroot -p -e "CHANGE MASTER TO MASTER_HOST='192.168.123.63', MASTER_PORT=3306, MASTER_LOG_FILE='mysql-bin.000003', MASTER_LOG_POS=194, MASTER_USER='repl_user', MASTER_PASSWORD='Repl_user123456'; start slave;"

查看原master是否加入新集群

Master_TLS_Version:

[root@mha-m1 ~]# mysql -uroot -p -e "show slave status\G" |egrep "Master_Host|Slave_IO_Running|Slave_SQL_Running"

Enter password:

Master_Host: 192.168.123.63

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

如上可看出已加入至新集群

实验成功。

5.2 测试主从同步切换之后VIP漂移

5.2.1 master_ip_failover_script对应的脚本修改

#!/usr/bin/env perl

use strict;

use warnings FATAL => 'all';

use Getopt::Long;

my (

command,ssh_user, orig_master_host,orig_master_ip,

orig_master_port,new_master_host, new_master_ip,new_master_port

);

my vip = '192.168.123.22/24';

mykey = '1';

my ssh_start_vip = "/sbin/ifconfig eth0:key vip";

myssh_stop_vip = "/sbin/ifconfig eth0:key down";

GetOptions(

'command=s' => \$command,

'ssh_user=s' => \$ssh_user,

'orig_master_host=s' => \$orig_master_host,

'orig_master_ip=s' => \$orig_master_ip,

'orig_master_port=i' => \$orig_master_port,

'new_master_host=s' => \$new_master_host,

'new_master_ip=s' => \$new_master_ip,

'new_master_port=i' => \$new_master_port,

);

exit &main();

sub main {

print "\n\nIN SCRIPT TEST====ssh_stop_vip==ssh_start_vip===\n\n";

if (command eq "stop" || command eq "stopssh" ) {

myexit_code = 1;

eval {

print "Disabling the VIP on old master: orig_master_host \n";

&stop_vip();exit_code = 0;

};

if (@) {

warn "Got Error:@\n";

exit exit_code;

}

exitexit_code;

}

elsif ( command eq "start" ) {

myexit_code = 10;

eval {

print "Enabling the VIP - vip on the new master -new_master_host \n";

&start_vip();

exit_code = 0;

};

if (@) {

warn @;

exitexit_code;

}

exit exit_code;

}

elsif (command eq "status" ) {

print "Checking the Status of the script.. OK \n";

exit 0;

}

else {

&usage();

exit 1;

}

}

sub start_vip() {

`ssh ssh_user\@new_master_host \" ssh_start_vip \"`;

}

sub stop_vip() {

`sshssh_user\@orig_master_host \"ssh_stop_vip \"`;

}

sub usage {

print

"Usage: master_ip_failover --command=start|stop|stopssh|status --orig_master_host=host --orig_master_ip=ip --orig_master_port=port --new_master_host=host --new_master_ip=ip --new_master_port=port\n";

}

原来Failover两种方式:一种是虚拟IP地址,一种是全局配置文件。MHA并没有限定使用哪一种方式,而是让用户自己选择,虚拟IP地址的方式会牵扯到其它的软件,比如keepalive软件,而且还要修改脚本master_ip_failover。这里先把app1.cnf 里面 master_ip_failover_script= /usr/local/bin/master_ip_failover这个选项屏蔽才可以通过。

5.2.2 先来检查主从是否都均已正常

[root@mha-s2 ~]# mysql -uroot -p -e "show slave status \G" |egrep "Yes|Master_Host"

Enter password:

Master_Host: 192.168.123.63

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

主从是正常的。

5.2.3 启动mhaManager

nohup masterha_manager --conf=/etc/mha/app1.cnf </dev/null >/var/log/mha/mag.log 2>&1 &

masterha_check_status --conf=/etc/mha/app1.cnf

app1 (pid:12015) is running(0:PING_OK), master:192.168.123.63

5.2.4 failover 测试

停止slave01端的mysqld服务进程,然后查看master是否已经提升到新的主库

[root@mha-s1 ~]# service mysqld stop

Redirecting to /bin/systemctl stop mysqld.service

[root@mha-s1 ~]#

[root@mha-s2 ~]# mysql -uroot -p -e "show slave status \G" |egrep "Yes|Master_Host"

Enter password:

Master_Host: 192.168.123.23

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

之前master已提升为主

5.2.5 failover 测试结果验证

vip地址已更新至新的主上,完成了主从切换及vip的切换。

[root@mha-m1 ~]# ifconfig

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.123.23 netmask 255.255.255.0 broadcast 192.168.123.255

inet6 fe80::ffd5:7e3d:5a19:281e prefixlen 64 scopeid 0x20<link>

ether 52:54:00:6e:a4:9d txqueuelen 1000 (Ethernet)

RX packets 613907 bytes 58242195 (55.5 MiB)

RX errors 0 dropped 18 overruns 0 frame 0

TX packets 116173 bytes 13147091 (12.5 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth0:1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.123.22 netmask 255.255.255.0 broadcast 192.168.123.255

ether 52:54:00:6e:a4:9d txqueuelen 1000 (Ethernet)

查看mha日志如下

----- Failover Report -----

app1: MySQL Master failover 192.168.123.63(192.168.123.63:3306) to 192.168.123.23(192.168.123.23:3306) succeeded

Master 192.168.123.63(192.168.123.63:3306) is down!

Check MHA Manager logs at mha-s3:/var/log/manager.log for details.

Started automated(non-interactive) failover.

Invalidated master IP address on 192.168.123.63(192.168.123.63:3306)

The latest slave 192.168.123.23(192.168.123.23:3306) has all relay logs for recovery.

Selected 192.168.123.23(192.168.123.23:3306) as a new master.

192.168.123.23(192.168.123.23:3306): OK: Applying all logs succeeded.

192.168.123.23(192.168.123.23:3306): OK: Activated master IP address.

192.168.123.64(192.168.123.64:3306): This host has the latest relay log events.

Generating relay diff files from the latest slave succeeded.

192.168.123.64(192.168.123.64:3306): OK: Applying all logs succeeded. Slave started, replicating from 192.168.123.23(192.168.123.23:3306)

192.168.123.23(192.168.123.23:3306): Resetting slave info succeeded.

Master failover to 192.168.123.23(192.168.123.23:3306) completed successfully.

Tue Aug 27 14:50:39 2019 - [info] Sending mail..

5.2.6 启动master端的mysqld服务进程,并将其加入到主从模式中

grep 'MASTER_HOST' /var/log/manager.log

Tue Aug 27 14:50:37 2019 - [info] All other slaves should start replication from here. Statement should be: CHANGE MASTER TO MASTER_HOST='192.168.123.23', MASTER_PORT=3306, MASTER_LOG_FILE='mysql-bin.000004', MASTER_LOG_POS=194, MASTER_USER='repl_user', MASTER_PASSWORD='xxx';

在slave01执行如下命令

[root@mha-s1 ~]# service mysqld start

Redirecting to /bin/systemctl start mysqld.service

mysql -uroot -p -e "CHANGE MASTER TO MASTER_HOST='192.168.123.23', MASTER_PORT=3306, MASTER_LOG_FILE='mysql-bin.000004', MASTER_LOG_POS=194, MASTER_USER='repl_user', MASTER_PASSWORD='Repl_user123456'; start slave;"

[root@mha-s1 ~]# mysql -uroot -p -e "show slave status \G" |egrep "Yes|Master_Host"

Enter password:

Master_Host: 192.168.123.23

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

至此 slave01已加入至集群。

这里强调下,默认情况下每次主备库切换后,mha服务都会停止。

5.4 手动在线切换测试

在许多情况下, 需要将现有的主服务器迁移到另外一台服务器上。 比如主服务器硬件故障,RAID 控制卡需要重建,将主服务器移到性能更好的服务器上等等。维护主服务器引起性能下降, 导致停机时间至少无法写入数据。 另外, 阻塞或杀掉当前运行的会话会导致主主之间数据不一致的问题发生。 MHA 提供快速切换和优雅的阻塞写入,这个切换过程只需要 0.5-2s 的时间,这段时间内数据是无法写入的。在很多情况下,0.5-2s 的阻塞写入是可以接受的。因此切换主服务器不需要计划分配维护时间窗口。

MHA在线切换的大概过程:

1.检测复制设置和确定当前主服务器

2.确定新的主服务器

3.阻塞写入到当前主服务器

4.等待所有从服务器赶上复制

5.授予写入到新的主服务器

6.重新设置从服务器

注意,在线切换的时候应用架构需要考虑以下两个问题:

1.自动识别master和slave的问题(master的机器可能会切换),如果采用了vip的方式,基本可以解决这个问题。

2.负载均衡的问题(可以定义大概的读写比例,每台机器可承担的负载比例,当有机器离开集群时,需要考虑这个问题)

5.5 在线手动切换步骤

5.5.1.先停止mha监控程序

[root@mha-s3 app1]# masterha_stop --conf=/etc/mha/app1.cnf

MHA Manager is not running on app1(2:NOT_RUNNING).

5.5.2 检测主从状态等

[root@mha-s1 ~]# mysql -uroot -p -e "show slave status \G" |egrep "Yes|Master_Host"

Enter password:

Master_Host: 192.168.123.23

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

[root@mha-s3 app1]# masterha_check_repl --conf=/etc/mha/app1.cnf

.......

MySQL Replication Health is OK.

[root@mha-m1 ~]# ifconfig | grep -A 2 eth0:1

eth0:1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.123.22 netmask 255.255.255.0 broadcast 192.168.123.255

ether 52:54:00:6e:a4:9d txqueuelen 1000 (Ethernet)

5.5.2.修改master_ip_online_change脚本

cat /etc/mha/app1/master_ip_online_change

#!/usr/bin/env perl

use strict;

use warnings FATAL => 'all';

use Getopt::Long;

use MHA::DBHelper;

use MHA::NodeUtil;

use Time::HiRes qw( sleep gettimeofday tv_interval );

use Data::Dumper;

my _tstart;

my_running_interval = 0.1;

my (

command,orig_master_host, orig_master_ip,orig_master_port, orig_master_user,new_master_host, new_master_ip,new_master_port,

new_master_user,

);

myvip = '192.168.123.22/24'; # Virtual IP

my key = "1";

myssh_start_vip = "/sbin/ifconfig eth0:keyvip";

my ssh_stop_vip = "/sbin/ifconfig eth0:key down";

my ssh_user = "root";

mynew_master_password='Repl_user123456';

my orig_master_password='Repl_user123456';

GetOptions(

'command=s' => \$command,

#'ssh_user=s' => \$ssh_user, 'orig_master_host=s' => \$orig_master_host,

'orig_master_ip=s' => \$orig_master_ip,

'orig_master_port=i' => \$orig_master_port,

'orig_master_user=s' => \$orig_master_user,

#'orig_master_password=s' => \$orig_master_password,

'new_master_host=s' => \$new_master_host,

'new_master_ip=s' => \$new_master_ip,

'new_master_port=i' => \$new_master_port,

'new_master_user=s' => \$new_master_user,

#'new_master_password=s' => \$new_master_password,

);

exit &main();

sub current_time_us {

my (sec, microsec ) = gettimeofday();

mycurdate = localtime(sec);

returncurdate . " " . sprintf( "%06d", microsec );

}

sub sleep_until {

myelapsed = tv_interval(_tstart);

if (_running_interval > elapsed ) {

sleep(_running_interval - elapsed );

}

}

sub get_threads_util {

mydbh = shift;

my my_connection_id = shift;

myrunning_time_threshold = shift;

my type = shift;running_time_threshold = 0 unless (running_time_threshold);type = 0 unless (type);

my @threads;

mysth = dbh->prepare("SHOW PROCESSLIST");sth->execute();

while ( my ref =sth->fetchrow_hashref() ) {

my id =ref->{Id};

my user =ref->{User};

my host =ref->{Host};

my command =ref->{Command};

my state =ref->{State};

my query_time =ref->{Time};

my info =ref->{Info};

info =~ s/^\s*(.*?)\s*/1/ if defined(info);

next if ( my_connection_id ==id );

next if ( defined(query_time) &&query_time < running_time_threshold );

next if ( defined(command) && command eq "Binlog Dump" );

next if ( defined(user) && user eq "system user" );

next

if ( defined(command)

&& command eq "Sleep"

&& defined(query_time)

&& query_time >= 1 );

if (type >= 1 ) {

next if ( defined(command) &&command eq "Sleep" );

next if ( defined(command) &&command eq "Connect" );

}

if ( type >= 2 ) {

next if ( defined(info) && info =~ m/^select/i );

next if ( defined(info) && info =~ m/^show/i );

}

push @threads,ref;

}

return @threads;

}

sub main {

if ( command eq "stop" ) {

## Gracefully killing connections on the current master

# 1. Set read_only= 1 on the new master

# 2. DROP USER so that no app user can establish new connections

# 3. Set read_only= 1 on the current master

# 4. Kill current queries

# * Any database access failure will result in script die.

myexit_code = 1;

eval {

## Setting read_only=1 on the new master (to avoid accident)

my new_master_handler = new MHA::DBHelper();

# args: hostname, port, user, password, raise_error(die_on_error)_or_notnew_master_handler->connect( new_master_ip,new_master_port,

new_master_user,new_master_password, 1 );

print current_time_us() . " Set read_only on the new master.. ";

new_master_handler->enable_read_only();

if (new_master_handler->is_read_only() ) {

print "ok.\n";

}

else {

die "Failed!\n";

}

new_master_handler->disconnect();

# Connecting to the orig master, die if any database error happens

myorig_master_handler = new MHA::DBHelper();

orig_master_handler->connect(orig_master_ip, orig_master_port,orig_master_user, orig_master_password, 1 );

## Drop application user so that nobody can connect. Disabling per-session binlog beforehand

#orig_master_handler->disable_log_bin_local();

#print current_time_us() . " Drpping app user on the orig master..\n";

#FIXME_xxx_drop_app_user(orig_master_handler);

## Waiting for N * 100 milliseconds so that current connections can exit

mytime_until_read_only = 15;

_tstart = [gettimeofday];

my @threads = get_threads_util(orig_master_handler->{dbh},

orig_master_handler->{connection_id} );

while (time_until_read_only > 0 && #threads >= 0 ) {

if (time_until_read_only % 5 == 0 ) {

printf

"%s Waiting all running %d threads are disconnected.. (max %d milliseconds)\n",

current_time_us(), #threads + 1,time_until_read_only * 100;

if ( #threads_] )->Indent(0)->Terse(1)->Dump . "\n"

foreach (@threads);

}

}

sleep_until();

_tstart = [gettimeofday];time_until_read_only--;

@threads = get_threads_util( orig_master_handler->{dbh},orig_master_handler->{connection_id} );

}

## Setting read_only=1 on the current master so that nobody(except SUPER) can write

print current_time_us() . " Set read_only=1 on the orig master.. ";

orig_master_handler->enable_read_only();

if (orig_master_handler->is_read_only() ) {

print "ok.\n";

}

else {

die "Failed!\n";

}

## Waiting for M * 100 milliseconds so that current update queries can complete

my time_until_kill_threads = 5;

@threads = get_threads_util(orig_master_handler->{dbh},

orig_master_handler->{connection_id} );

while (time_until_kill_threads > 0 && #threads >= 0 ) {

if (time_until_kill_threads % 5 == 0 ) {

printf

"%s Waiting all running %d queries are disconnected.. (max %d milliseconds)\n",

current_time_us(), #threads + 1,time_until_kill_threads * 100;

if ( #threads_] )->Indent(0)->Terse(1)->Dump . "\n"

foreach (@threads);

}

}

sleep_until();

_tstart = [gettimeofday];time_until_kill_threads--;

@threads = get_threads_util( orig_master_handler->{dbh},orig_master_handler->{connection_id} );

}

print "Disabling the VIP on old master: orig_master_host \n";

&stop_vip();

## Terminating all threads

print current_time_us() . " Killing all application threads..\n";orig_master_handler->kill_threads(@threads) if ( #threads >= 0 );

print current_time_us() . " done.\n";

#orig_master_handler->enable_log_bin_local();

orig_master_handler->disconnect();

## After finishing the script, MHA executes FLUSH TABLES WITH READ LOCKexit_code = 0;

};

if (@) {

warn "Got Error:@\n";

exit exit_code;

}

exitexit_code;

}

elsif ( command eq "start" ) {

## Activating master ip on the new master

# 1. Create app user with write privileges

# 2. Moving backup script if needed

# 3. Register new master's ip to the catalog database

# We don't return error even though activating updatable accounts/ip failed so that we don't interrupt slaves' recovery.

# If exit code is 0 or 10, MHA does not abort

myexit_code = 10;

eval {

my new_master_handler = new MHA::DBHelper();

# args: hostname, port, user, password, raise_error_or_notnew_master_handler->connect( new_master_ip,new_master_port,

new_master_user,new_master_password, 1 );

## Set read_only=0 on the new master

#new_master_handler->disable_log_bin_local();

print current_time_us() . " Set read_only=0 on the new master.\n";new_master_handler->disable_read_only();

## Creating an app user on the new master

#print current_time_us() . " Creating app user on the new master..\n";

#FIXME_xxx_create_app_user(new_master_handler);

#new_master_handler->enable_log_bin_local();

new_master_handler->disconnect();

## Update master ip on the catalog database, etc

print "Enabling the VIP -vip on the new master - new_master_host \n";

&start_vip();exit_code = 0;

};

if (@) {

warn "Got Error:@\n";

exit exit_code;

}

exitexit_code;

}

elsif ( command eq "status" ) {

# do nothing

exit 0;

}

else {

&usage();

exit 1;

}

}

# A simple system call that enable the VIP on the new master

sub start_vip() {

`sshssh_user\@new_master_host \"ssh_start_vip \"`;

}

# A simple system call that disable the VIP on the old_master

sub stop_vip() {

`ssh ssh_user\@orig_master_host \" $ssh_stop_vip \"`;

}

sub usage {

print

"Usage: master_ip_online_change --command=start|stop|status --orig_master_host=host --orig_master_ip=ip --orig_master_port=port --new_master_host=host --new_master_ip=ip --new_master_port=port\n";

die;

}

5.5.3 执行在线手动切换

masterha_master_switch --conf=/etc/mha/app1.cnf --master_state=alive --new_master_host=192.168.123.63 --new_master_port=3306 --orig_master_is_new_slave --running_updates_limit=10000 --interactive=0

参数解释:

new_master_host:指定哪台成为新的主库

new_master_port:指定对应的数据库端口

orig_master_is_new_slave:将原来的主库变为从库

running_updates_limit:指定复制延迟在10000s内的都可切换

interactive:表示不需要人工干预,自动执行

执行结果如下:

Tue Aug 27 20:50:09 2019 - [info] MHA::MasterRotate version 0.58.

Tue Aug 27 20:50:09 2019 - [info] Starting online master switch..

Tue Aug 27 20:50:09 2019 - [info]

Tue Aug 27 20:50:09 2019 - [info] * Phase 1: Configuration Check Phase..

Tue Aug 27 20:50:09 2019 - [info]

Tue Aug 27 20:50:09 2019 - [warning] Global configuration file /etc/masterha_default.cnf not found. Skipping.

Tue Aug 27 20:50:09 2019 - [info] Reading application default configuration from /etc/mha/app1.cnf..

Tue Aug 27 20:50:10 2019 - [info] Reading server configuration from /etc/mha/app1.cnf..

Tue Aug 27 20:50:11 2019 - [info] GTID failover mode = 0

Tue Aug 27 20:50:11 2019 - [info] Current Alive Master: 192.168.123.23(192.168.123.23:3306)

Tue Aug 27 20:50:11 2019 - [info] Alive Slaves:

Tue Aug 27 20:50:11 2019 - [info] 192.168.123.63(192.168.123.63:3306) Version=5.7.27-log (oldest major version between slaves) log-bin:enabled

Tue Aug 27 20:50:11 2019 - [info] Replicating from 192.168.123.23(192.168.123.23:3306)

Tue Aug 27 20:50:11 2019 - [info] Primary candidate for the new Master (candidate_master is set)

Tue Aug 27 20:50:11 2019 - [info] 192.168.123.64(192.168.123.64:3306) Version=5.7.27-log (oldest major version between slaves) log-bin:enabled

Tue Aug 27 20:50:11 2019 - [info] Replicating from 192.168.123.23(192.168.123.23:3306)

Tue Aug 27 20:50:11 2019 - [info] Not candidate for the new Master (no_master is set)

Tue Aug 27 20:50:11 2019 - [info] Executing FLUSH NO_WRITE_TO_BINLOG TABLES. This may take long time..

Tue Aug 27 20:50:11 2019 - [info] ok.

Tue Aug 27 20:50:11 2019 - [info] Checking MHA is not monitoring or doing failover..

Tue Aug 27 20:50:11 2019 - [info] Checking replication health on 192.168.123.63..

Tue Aug 27 20:50:11 2019 - [info] ok.

Tue Aug 27 20:50:11 2019 - [info] Checking replication health on 192.168.123.64..

Tue Aug 27 20:50:11 2019 - [info] ok.

Tue Aug 27 20:50:11 2019 - [info] 192.168.123.63 can be new master.

Tue Aug 27 20:50:11 2019 - [info]

From:

192.168.123.23(192.168.123.23:3306) (current master)

+--192.168.123.63(192.168.123.63:3306)

+--192.168.123.64(192.168.123.64:3306)

To:

192.168.123.63(192.168.123.63:3306) (new master)

+--192.168.123.64(192.168.123.64:3306)

+--192.168.123.23(192.168.123.23:3306)

Tue Aug 27 20:50:11 2019 - [info] Checking whether 192.168.123.63(192.168.123.63:3306) is ok for the new master..

Tue Aug 27 20:50:11 2019 - [info] ok.

Tue Aug 27 20:50:11 2019 - [info] 192.168.123.23(192.168.123.23:3306): SHOW SLAVE STATUS returned empty result. To check replication filtering rules, temporarily executing CHANGE MASTER to a dummy host.

Tue Aug 27 20:50:11 2019 - [info] 192.168.123.23(192.168.123.23:3306): Resetting slave pointing to the dummy host.

Tue Aug 27 20:50:11 2019 - [info] ** Phase 1: Configuration Check Phase completed.

Tue Aug 27 20:50:11 2019 - [info]

Tue Aug 27 20:50:11 2019 - [info] * Phase 2: Rejecting updates Phase..

Tue Aug 27 20:50:11 2019 - [info]

Tue Aug 27 20:50:11 2019 - [info] Executing master ip online change script to disable write on the current master:

Tue Aug 27 20:50:11 2019 - [info] /etc/mha/app1/master_ip_online_change --command=stop --orig_master_host=192.168.123.23 --orig_master_ip=192.168.123.23 --orig_master_port=3306 --orig_master_user='mha' --new_master_host=192.168.123.63 --new_master_ip=192.168.123.63 --new_master_port=3306 --new_master_user='mha' --orig_master_ssh_user=root --new_master_ssh_user=root --orig_master_is_new_slave --orig_master_password=xxx --new_master_password=xxx

Unknown option: orig_master_ssh_user

Unknown option: new_master_ssh_user

Unknown option: orig_master_is_new_slave

Unknown option: orig_master_password

Unknown option: new_master_password

Tue Aug 27 20:50:11 2019 220111 Set read_only on the new master.. ok.

Tue Aug 27 20:50:11 2019 223259 Set read_only=1 on the orig master.. ok.

Disabling the VIP on old master: 192.168.123.23

Tue Aug 27 20:50:11 2019 372793 Killing all application threads..

Tue Aug 27 20:50:11 2019 372908 done.

Tue Aug 27 20:50:11 2019 - [info] ok.

Tue Aug 27 20:50:11 2019 - [info] Locking all tables on the orig master to reject updates from everybody (including root):

Tue Aug 27 20:50:11 2019 - [info] Executing FLUSH TABLES WITH READ LOCK..

Tue Aug 27 20:50:11 2019 - [info] ok.

Tue Aug 27 20:50:11 2019 - [info] Orig master binlog:pos is mysql-bin.000004:194.

Tue Aug 27 20:50:11 2019 - [info] Waiting to execute all relay logs on 192.168.123.63(192.168.123.63:3306)..

Tue Aug 27 20:50:11 2019 - [info] master_pos_wait(mysql-bin.000004:194) completed on 192.168.123.63(192.168.123.63:3306). Executed 0 events.

Tue Aug 27 20:50:11 2019 - [info] done.

Tue Aug 27 20:50:11 2019 - [info] Getting new master's binlog name and position..

Tue Aug 27 20:50:11 2019 - [info] mysql-bin.000004:194

Tue Aug 27 20:50:11 2019 - [info] All other slaves should start replication from here. Statement should be: CHANGE MASTER TO MASTER_HOST='192.168.123.63', MASTER_PORT=3306, MASTER_LOG_FILE='mysql-bin.000004', MASTER_LOG_POS=194, MASTER_USER='repl_user', MASTER_PASSWORD='xxx';

Tue Aug 27 20:50:11 2019 - [info] Executing master ip online change script to allow write on the new master:

Tue Aug 27 20:50:11 2019 - [info] /etc/mha/app1/master_ip_online_change --command=start --orig_master_host=192.168.123.23 --orig_master_ip=192.168.123.23 --orig_master_port=3306 --orig_master_user='mha' --new_master_host=192.168.123.63 --new_master_ip=192.168.123.63 --new_master_port=3306 --new_master_user='mha' --orig_master_ssh_user=root --new_master_ssh_user=root --orig_master_is_new_slave --orig_master_password=xxx --new_master_password=xxx

Unknown option: orig_master_ssh_user

Unknown option: new_master_ssh_user

Unknown option: orig_master_is_new_slave

Unknown option: orig_master_password

Unknown option: new_master_password

Tue Aug 27 20:50:11 2019 472102 Set read_only=0 on the new master.

Enabling the VIP - 192.168.123.22/24 on the new master - 192.168.123.63

Tue Aug 27 20:50:11 2019 - [info] ok.

Tue Aug 27 20:50:11 2019 - [info]

Tue Aug 27 20:50:11 2019 - [info] * Switching slaves in parallel..

Tue Aug 27 20:50:11 2019 - [info]

Tue Aug 27 20:50:11 2019 - [info] -- Slave switch on host 192.168.123.64(192.168.123.64:3306) started, pid: 13833

Tue Aug 27 20:50:11 2019 - [info]

Tue Aug 27 20:50:12 2019 - [info] Log messages from 192.168.123.64 ...

Tue Aug 27 20:50:12 2019 - [info]

Tue Aug 27 20:50:11 2019 - [info] Waiting to execute all relay logs on 192.168.123.64(192.168.123.64:3306)..

Tue Aug 27 20:50:11 2019 - [info] master_pos_wait(mysql-bin.000004:194) completed on 192.168.123.64(192.168.123.64:3306). Executed 0 events.

Tue Aug 27 20:50:11 2019 - [info] done.

Tue Aug 27 20:50:11 2019 - [info] Resetting slave 192.168.123.64(192.168.123.64:3306) and starting replication from the new master 192.168.123.63(192.168.123.63:3306)..

Tue Aug 27 20:50:11 2019 - [info] Executed CHANGE MASTER.

Tue Aug 27 20:50:11 2019 - [info] Slave started.

Tue Aug 27 20:50:12 2019 - [info] End of log messages from 192.168.123.64 ...

Tue Aug 27 20:50:12 2019 - [info]

Tue Aug 27 20:50:12 2019 - [info] -- Slave switch on host 192.168.123.64(192.168.123.64:3306) succeeded.

Tue Aug 27 20:50:12 2019 - [info] Unlocking all tables on the orig master:

Tue Aug 27 20:50:12 2019 - [info] Executing UNLOCK TABLES..

Tue Aug 27 20:50:12 2019 - [info] ok.

Tue Aug 27 20:50:12 2019 - [info] Starting orig master as a new slave..

Tue Aug 27 20:50:12 2019 - [info] Resetting slave 192.168.123.23(192.168.123.23:3306) and starting replication from the new master 192.168.123.63(192.168.123.63:3306)..

Tue Aug 27 20:50:12 2019 - [info] Executed CHANGE MASTER.

Tue Aug 27 20:50:12 2019 - [info] Slave started.

Tue Aug 27 20:50:12 2019 - [info] All new slave servers switched successfully.

Tue Aug 27 20:50:12 2019 - [info]

Tue Aug 27 20:50:12 2019 - [info] * Phase 5: New master cleanup phase..

Tue Aug 27 20:50:12 2019 - [info]

Tue Aug 27 20:50:12 2019 - [info] 192.168.123.63: Resetting slave info succeeded.

Tue Aug 27 20:50:12 2019 - [info] Switching master to 192.168.123.63(192.168.123.63:3306) completed successfully.

在线切换成功

5.5.4 验证切换结果

[root@mha-m1 ~]# ifconfig |grep eth0:1

[root@mha-m1 ~]#

[root@mha-s1 ~]# ifconfig |grep eth0:1

eth0:1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

[root@mha-s1 ~]#

[root@mha-s2 ~]# mysql -uroot -p -e "show slave status \G" |egrep "Yes|Master_Host"

Enter password:

Master_Host: 192.168.123.63

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

[root@mha-m1 ~]# mysql -uroot -p -e "show slave status \G" |egrep "Yes|Master_Host"

Enter password:

Master_Host: 192.168.123.63

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

参考

https://github.com/yoshinorim/mha4mysql-manager/wiki/masterha_manager

- 我的微信

- 这是我的微信扫一扫

-

- 我的微信公众号

- 我的微信公众号扫一扫

-