1 基础环境设置

1.1 服务器规划

| ip | 主机名 | 描述 | 系统版本 |

|---|---|---|---|

| 192.168.88.230 | controller | 控制节点 | Ubuntu 18.04.2 |

| 192.168.88.231 | compute01 | 计算节点 | Ubuntu 18.04.2 |

| 192.168.88.232 | compute02 | 计算节点 | Ubuntu 18.04.2 |

1.2 服务器版本及内核相关

upsmart@controller:~$ cat /etc/issue && uname -a

Ubuntu 18.04.2 LTS n l

Linux controller 4.4.0-131-generic #157-Ubuntu SMP Thu Jul 12 15:51:36 UTC 2018 x86_64 x86_64 x86_64 GNU/Linux

root@controller:~# uname -r

4.15.0-54-generic

root@controller:~# tail -3 /etc/hosts

192.168.88.230 controller

192.168.88.231 compute01

192.168.88.232 compute02

1.3 OpenStack基础配置服务流程

OpenStack 相关服务安装流程(keystone服务除外):

1)在数据库中,创库,授权;

2)在keystone中创建用户并授权;

3)在keystone中创建服务实体,和注册API接口;

4)安装软件包;

5)修改配置文件(数据库信息);

6)同步数据库;

7)启动服务。

1.4 OpenStack服务部署顺序

Minimal deployment for Rocky¶

At a minimum, you need to install the following services. Install the services in the order specified below:

Identity service – keystone installation for Rocky

Image service – glance installation for Rocky

Compute service – nova installation for Rocky

Networking service – neutron installation for Rocky

Dashboard – horizon installation for Rocky

Block Storage service – cinder installation for Rocky

1.5 同步网络时间服务器

controller:

sudo apt-get update

sudo apt-get install chrony

sudo vim /etc/chrony/chrony.conf

allow 0/0

sudo service chrony restart

其他节点:

sudo apt-get install chrony -y

sudo vim /etc/chrony/chrony.conf

server controller iburst

sudo service chrony restart

验证:

sudo chronyc sources

1.6 openstack基础服务安装

添加apt仓库

sudo apt install software-properties-common

sudo add-apt-repository cloud-archive:rocky

sudo apt update && sudo apt dist-upgrade

安装Openstack客户端

sudo apt install python-openstackclient -y

1.7 配置网络信息

controller compute服务器(必做)

cat /etc/network/interfaces

auto enp3s0

iface enp3s0 inet manual

up ip link set dev IFACE up

down ip link set devIFACE down

service networking restart

1.8 基础服务MySQL

sudo apt install mariadb-server python-pymysql

创建配置文件

cat /etc/mysql/mariadb.conf.d/99-openstack.cnf

[mysqld]

bind-address = 192.168.88.230

default-storage-engine = innodb

innodb_file_per_table = on

max_connections = 4096

collation-server = utf8_general_ci

character-set-server = utf8

启动mariadb

sudo service mysql start

执行mariadb安全初始化

为了保证数据库服务的安全性,运行``mysql_secure_installation``脚本。特别需要说明的是,为数据库的root用户设置一个适当的密码。

sudo mysql_secure_installation

1.9 基础服务RabbitMQ

安装RabbitMQ消息队列

apt install rabbitmq-server

添加 openstack 用户:

sudo rabbitmqctl add_user openstack 123456

配置openstack用户写和读权限

sudo rabbitmqctl set_permissions openstack ".*" ".*" ".*"

1.10 基础服务Memcached

安装Memcached缓存

sudo apt install memcached python-memcache

编辑/etc/sysconfig/memcached文件,更改监听地址

/etc/memcached.conf

-l 192.168.88.230

启动memcached

sudo service memcached restart

1.11 基础服务Etcd

安装etcd

sudo apt install etcd

配置etcd

vim /etc/default/etcd

ETCD_NAME="controller"

ETCD_DATA_DIR="/var/lib/etcd"

ETCD_INITIAL_CLUSTER_STATE="new"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster-01"

ETCD_INITIAL_CLUSTER="controller=http://192.168.88.230:2380"

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.88.230:2380"

ETCD_ADVERTISE_CLIENT_URLS="http://192.168.88.230:2379"

ETCD_LISTEN_PEER_URLS="http://0.0.0.0:2380"

ETCD_LISTEN_CLIENT_URLS="http://192.168.88.230:2379"

启动 etcd

# systemctl enable etcd

# systemctl start etcd

2 Keystone安装配置

2.1 创建数据库

create a database

CREATE DATABASE keystone;

GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%'

IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost'

IDENTIFIED BY '123456';

2.2 安装keystone

sudo apt install keystone apache2 libapache2-mod-wsgi

安装的软件包为 keystone服务包,http服务,用于连接python程序与web服务的中间件

2.3 修改配置文件

cp /etc/keystone/keystone.conf{,.bak}

vim /etc/keystone/keystone.conf

connection = mysql+pymysql://keystone:123456@controller/keystone

[token]

# ...

provider = fernet

2.4 初始化身份认证服务的数据库

su -s /bin/sh -c "keystone-manage db_sync" keystone

2.5 初始化Fernet keys

keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

keystone-manage credential_setup --keystone-user keystone --keystone-group keystone

2.6 创建身份服务API端点

keystone-manage bootstrap --bootstrap-password 123456

--bootstrap-admin-url http://controller:5000/v3/

--bootstrap-internal-url http://controller:5000/v3/

--bootstrap-public-url http://controller:5000/v3/

--bootstrap-region-id RegionOne

2.7 配置Apache HTTP服务器

sudo vim /etc/apache2/apache2.conf

ServerName controller

service apache2 restart

2.8 配置admin账户,配置环境变量,临时生效

export OS_USERNAME=admin

export OS_PASSWORD=123456

export OS_PROJECT_NAME=admin

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_DOMAIN_NAME=Default

export OS_AUTH_URL=http://controller:5000/v3

export OS_IDENTITY_API_VERSION=3

2.9 创建项目、域、用户和角色

创建域:

openstack domain create --description "An Example Domain" example

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | An Example Domain |

| enabled | True |

| id | ccaa9a58a7fb4f99b973fcde355cd755 |

| name | example |

| tags | [] |

+-------------+----------------------------------+

创建服务

openstack project create --domain default

> --description "Service Project" service

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | Service Project |

| domain_id | default |

| enabled | True |

| id | fe907c88d26041b59ce7c1d720f23ff0 |

| is_domain | False |

| name | service |

| parent_id | default |

| tags | [] |

+-------------+----------------------------------+

创建myproject项目

openstack project create --domain default

> --description "Demo Project" myproject

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | Demo Project |

| domain_id | default |

| enabled | True |

| id | 54fa86df40fd4902a0f21d981a77a6fa |

| is_domain | False |

| name | myproject |

| parent_id | default |

| tags | [] |

+-------------+----------------------------------+

创建myuser用户

openstack user create --domain default --password-prompt myuser

User Password:123456

Repeat User Password:123456

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | f4748f17e51c4c99b984f66d8cc7f8e6 |

| name | myuser |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

创建myrole角色

openstack role create myrole

+-----------+----------------------------------+

| Field | Value |

+-----------+----------------------------------+

| domain_id | None |

| id | 3c2ca1f56b0c491da2ac8409dd4a74e7 |

| name | myrole |

+-----------+----------------------------------+

Add the myrole role to the myproject project and myuser user:

openstack role add --project myproject --user myuser myrole

验证

unset OS_AUTH_URL OS_PASSWORD

As the admin user, request an authentication token:

openstack --os-auth-url http://controller:5000/v3

> --os-project-domain-name Default --os-user-domain-name Default

> --os-project-name admin --os-username admin token issue

Password: 123456

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| Field | Value |

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| expires | 2019-07-11T15:52:12+0000 |

| id | gAAAAABdJ00c5y2RmphW6XgT8Q2ZpAz15HKw-pNyI29wJ6HGY2iub3zBDdu4x0iA3EV-mGfFWGZstim-kkfPuetpGTHiucoJzmyVHj7sSmAfplVoYOrGNJBWHOQdMgzPaWgVjUUsTmtpjzRQeKbhlfz1bCxOZyveVfwyWjTudxfGrheX9UEXg8g |

| project_id | 211b8ac07f9c4e59bc7222c9a690fc52 |

| user_id | 45f2e5357fad4b28968a83ac7643e114 |

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

作为myuser用户,请求身份验证令牌:

openstack --os-auth-url http://controller:5000/v3

> --os-project-domain-name Default --os-user-domain-name Default

> --os-project-name myproject --os-username myuser token issue

Password:

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| Field | Value |

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| expires | 2019-07-11T15:52:59+0000 |

| id | gAAAAABdJ01LsNdRMZVAb8RQyBDd77G0A2Rw3muyk17xAnsgR4um6OhFyJMCcuWLYIf-zIzc2imeJ8J4QUbrdQ0ob_eZdzL_t7liYaJ03dyXcOizkguTuxK42gIcmjT0Adec4UT8HJz1nI3NuApSD8ay6DUFsBEmB1hvTkHhIGSNtsnkqU7mNM8 |

| project_id | 54fa86df40fd4902a0f21d981a77a6fa |

| user_id | f4748f17e51c4c99b984f66d8cc7f8e6 |

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

2.10 创建openstack 客户端服务脚本

cat admin-openrc

export OS_PROJECT_DOMAIN_NAME=Default

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=123456

export OS_AUTH_URL=http://controller:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

cat demo-openrc

export OS_PROJECT_DOMAIN_NAME=Default

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_NAME=myproject

export OS_USERNAME=myuser

export OS_PASSWORD=123456

export OS_AUTH_URL=http://controller:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

3 镜像服务glance部署

glance是为虚拟机的创建提供镜像的服务,我们基于Openstack是构建基本的IaaS平台对外提供虚拟机,而虚拟机在创建时必须为选择需要安装的操作系统,glance服务就是为该选择提供不同的操作系统镜像。

3.1 创建glance数据库和用户授权

CREATE DATABASE glance;

GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost'

IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%'

IDENTIFIED BY '123456';

3.2 创建glance服务认证

3.2.1 创建 glance 用户

openstack user create --domain default --password-prompt glance

User Password:123456

Repeat User Password:123456

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | d352002ebf0f4e46b5a6da033cf9cbd9 |

| name | glance |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

3.2.2 添加 admin 角色到 glance 用户和 service 项目上

openstack role add --project service --user glance admin

3.2.3 创建镜像服务的 API 端点,并注册

openstack service create --name glance

--description "OpenStack Image" image

3.2.4 创建镜像服务的 API 端点

openstack endpoint create --region RegionOne

image public http://controller:9292

openstack endpoint create --region RegionOne

image internal http://controller:9292

openstack endpoint create --region RegionOne

image admin http://controller:9292

3.3 安装glance软件包

sudo apt install glance

服务说明:

glance-api 负责镜像的上传、下载、查看、删除

glance-registry 修改镜像的源数据:镜像所需的配置

3.4 修改glance相关配置文件

sudo cp -r /etc/glance/glance-api.conf{,.bak}

vim /etc/glance/glance-api.conf

[database]

#connection = sqlite:////var/lib/glance/glance.sqlite

#backend = sqlalchemy

connection = mysql+pymysql://glance:123456@controller/glance

[keystone_authtoken]

# ...

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = glance

password = 123456

[paste_deploy]

# ...

flavor = keystone

[glance_store]

# ...

stores = file,http

default_store = file

filesystem_store_datadir = /var/lib/glance/images/

sudo cp /etc/glance/glance-registry.conf{,.bak}

[database]

# ...

connection = mysql+pymysql://glance:123456@controller/glance

[keystone_authtoken]

# ...

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = glance

password = 123456

[paste_deploy]

# ...

flavor = keystone

3.5 同步数据库

sudo su -s /bin/sh -c "glance-manage db_sync" glance

检查数据库是否同步成功

[root@controller ~]# mysql glance -e "show tables" |wc -l

21

3.6 启动镜像服务

sudo service glance-registry restart

sudo service glance-api restart

3.7 验证镜像服务

至此glance服务配置完成

4 计算服务(nova)部署

Nova是Openstack云中的计算组织控制器。支持Openstack云中实例(Instances)生命周期的所有活动都由Nova处理。这样使得Nova成为一个负责管理计算资源、网络、认证、所需可扩展性的平台。但是Nova自身并没有提供任何虚拟化能力,相反它使用Libvirt API来与被支持的Hypervisors交互。Nova通过一个与Amazon Web Services(AWS)EC2 API兼容的Web Services API来对外提供服务。

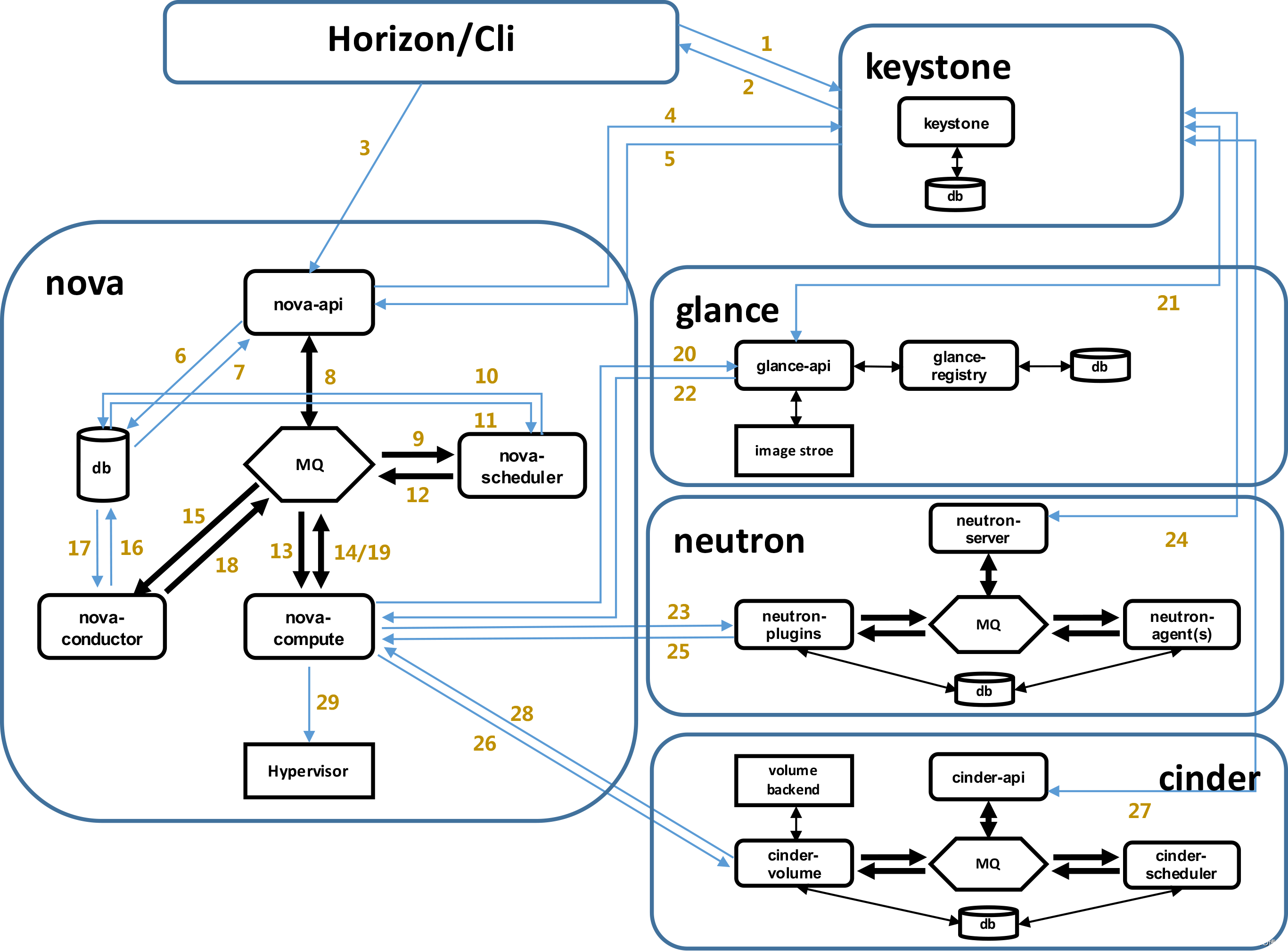

4.1 Nova的工作流程

4.2 安装配置控制节点

4.2.1 在数据库中,创库,授权

mysql -uroot -p

CREATE DATABASE nova_api;

CREATE DATABASE nova;

CREATE DATABASE nova_cell0;

CREATE DATABASE placement;

GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost'

IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%'

IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost'

IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%'

IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'localhost'

IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%'

IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'localhost'

IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'%'

IDENTIFIED BY '123456';

4.2.2 在keystone中创建用户并授权

创建nova用户:

openstack user create --domain default --password-prompt nova

User Password:123456

Repeat User Password:123456

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | 7bdef8c7b1eb4507bd7345b42eabd2a9 |

| name | nova |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

将admin角色添加到nova用户:

openstack role add --project service --user nova admin

4.2.3 在keystone中创建服务实体,和注册API接口

创建nova服务实体:

openstack service create --name nova

--description "OpenStack Compute" compute

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Compute |

| enabled | True |

| id | 0b89b806d21a49db9792bd8d14c4d374 |

| name | nova |

| type | compute |

+-------------+----------------------------------+

创建Compute API服务端点:

openstack endpoint create --region RegionOne

compute public http://controller:8774/v2.1

openstack endpoint create --region RegionOne

compute internal http://controller:8774/v2.1

openstack endpoint create --region RegionOne

compute admin http://controller:8774/v2.1

openstack user create --domain default --password-prompt placement

User Password:123456

Repeat User Password:123456

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | 8ee029fc8065417cbb35693242f22f83 |

| name | placement |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

使用admin角色将Placement用户添加到服务项目:

openstack role add --project service --user placement admin

创建placement服务实体:

openstack service create --name placement

--description "Placement API" placement

创建Placement API服务端点:

openstack endpoint create --region RegionOne

placement public http://controller:8778

openstack endpoint create --region RegionOne

placement internal http://controller:8778

openstack endpoint create --region RegionOne

placement admin http://controller:8778

4.2.4 安装Nova相关软件包

sudo apt install nova-api nova-conductor nova-consoleauth

nova-novncproxy nova-scheduler nova-placement-api

软件包说明

nova-api # 提供api接口

nova-scheduler # 调度

nova-conductor # 替代计算节点进入数据库操作

nova-consoleauth # 提供web界面版的vnc管理

nova-novncproxy # 提供web界面版的vnc管理

nova-compute # 调度libvirtd进行虚拟机生命周期的管理

4.2.5 修改 nova相关服务配置文件

sudo cp /etc/nova/nova.conf{,.bak}

vim /etc/nova/nova.conf

[api_database]

# ...

connection = mysql+pymysql://nova:123456@controller/nova_api

[database]

# ...

connection = mysql+pymysql://nova:123456@controller/nova

[placement_database]

# ...

connection = mysql+pymysql://placement:123456@controller/placement

[DEFAULT]

# ...

transport_url = rabbit://openstack:123456@controller

[api]

# ...

auth_strategy = keystone

[keystone_authtoken]

# ...

auth_url = http://controller:5000/v3

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = 123456

[DEFAULT]

# ...

my_ip = 10.0.0.11

[DEFAULT]

# ...

use_neutron = true

firewall_driver = nova.virt.firewall.NoopFirewallDriver

[vnc]

enabled = true

# ...

server_listen = my_ip

server_proxyclient_address =my_ip

novncproxy_base_url = http://192.168.88.230:6080/vnc_auto.html

[glance]

# ...

api_servers = http://controller:9292

[oslo_concurrency]

# ...

lock_path = /var/lib/nova/tmp

[placement]

# ...

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:5000/v3

username = placement

password = 123456

4.2.6 同步数据库

su -s /bin/sh -c "nova-manage api_db sync" nova

注册cell0数据库:

su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova

创建cell1单元格:

sudo su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova

16da0d9a-3477-4ad7-ba0c-78a1368bf4c2

填充nova数据库:

su -s /bin/sh -c "nova-manage db sync" nova

验证nova cell0和cell1是否正确注册:

su -s /bin/sh -c "nova-manage cell_v2 list_cells" nova

4.2.7 重启nova服务

service nova-api restart

service nova-consoleauth restart

service nova-scheduler restart

service nova-conductor restart

service nova-novncproxy restart

4.3 在计算节点安装和配置nova

4.3.1 Nova-Compute的安装

apt install nova-compute -y

4.3.2 修改Nova-Compute的配置

cp /etc/nova/nova.conf{,.bak}

[DEFAULT]

# ...

transport_url = rabbit://openstack:123456@controller

[api]

# ...

auth_strategy = keystone

[keystone_authtoken]

# ...

auth_url = http://controller:5000/v3

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = 123456

[DEFAULT]

# ...

my_ip = 192.168.88.231

[DEFAULT]

# ...

use_neutron = true

firewall_driver = nova.virt.firewall.NoopFirewallDriver

[vnc]

# ...

enabled = true

server_listen = 0.0.0.0

server_proxyclient_address = 192.168.88.231

novncproxy_base_url = http://192.168.88.230:6080/vnc_auto.html

[glance]

# ...

api_servers = http://controller:9292

[oslo_concurrency]

# ...

lock_path = /var/lib/nova/tmp

[placement]

# ...

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:5000/v3

username = placement

password = 123456

4.3.3 启动compute-nova服务

如果此命令返回值大于1,则计算节点支持硬件加速,通常不需要其他配置。

如果此命令返回值zero,则您的计算节点不支持硬件加速,您必须配置libvirt为使用QEMU而不是KVM。

编辑文件中的[libvirt]部分,/etc/nova/nova.conf如下所示:

[libvirt]

# ...

virt_type = kvm

启动服务:

service nova-compute restart

4.3.4 在控制节点查看计算节点状态

5 网络服务(Neutron)部署

Openstack的网络(Neutron),可以创建和附加其它的Openstack服务,网络管理接口设备。插件可以被实现,以适应不同的网络设备和软件,提供灵活性,以开栈架构和部署。

Openstack的网络(Neutron)管理虚拟网络基础架构(VNI),并在您的Openstack的环境中的物理网络基础架构(PNI)的接入层方面的所有网络方面。开栈网络允许租户创建高级的虚拟网络拓扑可包括服务,例如防火墙,负载均衡器,和虚拟专用网(×××)。

5.1 安装配置控制节点

5.1.1 创建neutron数据库

CREATE DATABASE neutron;

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost'

IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%'

IDENTIFIED BY '123456';

5.1.2 在keystone中创建用户并授权

创建neutron管理用户

openstack user create --domain default --password-prompt neutron

将neutron用户添加到 neutron 服务中,并赋予admin的角色

openstack role add --project service --user neutron admin

5.1.3 在keystone中创建服务实体,和注册API接口

创建neutron服务实体:

openstack service create --name neutron

--description "OpenStack Networking" network

创建网络服务API端点:

openstack endpoint create --region RegionOne

network public http://controller:9696

openstack endpoint create --region RegionOne

network internal http://controller:9696

openstack endpoint create --region RegionOne

network admin http://controller:9696

5.1.4 安装Neutron相关软件包

这里选择网络模式2

sudo apt install neutron-server neutron-plugin-ml2 neutron-linuxbridge-agent neutron-l3-agent neutron-dhcp-agent neutron-metadata-agent

5.1.5 Neutron的配置

编辑/etc/neutron/neutron.conf

sudo cp /etc/neutron/neutron.conf{,.bak}

[database]

# ...

connection = mysql+pymysql://neutron:123456@controller/neutron

[DEFAULT]

# ...

core_plugin = ml2

service_plugins = router

allow_overlapping_ips = true

[DEFAULT]

# ...

transport_url = rabbit://openstack:123456@controller

[DEFAULT]

# ...

auth_strategy = keystone

[keystone_authtoken]

# ...

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = 123456

[DEFAULT]

# ...

notify_nova_on_port_status_changes = true

notify_nova_on_port_data_changes = true

[nova]

# ...

auth_url = http://controller:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = nova

password = 123456

[oslo_concurrency]

# ...

lock_path = /var/lib/neutron/tmp

配置 Modular Layer 2 (ML2) 插件

sudo cp /etc/neutron/plugins/ml2/ml2_conf.ini{,.bak}

[ml2]

# ...

type_drivers = flat,vlan,vxlan

[ml2]

# ...

tenant_network_types = vxlan

[ml2]

# ...

mechanism_drivers = linuxbridge,l2population

[ml2]

# ...

extension_drivers = port_security

[ml2_type_flat]

# ...

flat_networks = provider

[ml2_type_vxlan]

# ...

vni_ranges = 1:1000

[securitygroup]

# ...

enable_ipset = true

配置Linuxbridge代理

sudo cp /etc/neutron/plugins/ml2/linuxbridge_agent.ini{,.bak}

physical_interface_mappings = provider:enp5s0

[vxlan]

enable_vxlan = true

local_ip = 192.168.88.230

l2_population = true

[securitygroup]

# ...

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

sudo vim /etc/sysctl.conf

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

sudo sysctl -p

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

sudo cp /etc/neutron/l3_agent.ini{,.bak}

[DEFAULT]

# ...

interface_driver = linuxbridge

[DEFAULT]

# ...

interface_driver = linuxbridge

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

enable_isolated_metadata = true

配置元数据代理

sudo cp /etc/neutron/metadata_agent.ini{,.bak}

nova_metadata_host = controller

metadata_proxy_shared_secret = METADATA_SECRET

为nove配置网络服务

vim /etc/nova/nova.conf

url = http://controller:9696

auth_url = http://controller:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = 123456

service_metadata_proxy = true

metadata_proxy_shared_secret = METADATA_SECRET

ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

配置DHCP代理

/etc/neutron/dhcp_agent.ini

[DEFAULT]

# ...

interface_driver = linuxbridge

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

enable_isolated_metadata = true

5.1.6 同步数据库

网络服务初始化脚本需要一个超链接 /etc/neutron/plugin.ini指向ML2插件配置文件/etc/neutron/plugins/ml2/ml2_conf.ini

如果超链接不存在,使用下面的命令创建它

ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

导入数据库结构

su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf

--config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

5.1.7 启动neutron相关服务

重启计算API 服务

service nova-api restart

当系统启动时,启动 Networking 服务并配置它启动

service neutron-server restart

service neutron-linuxbridge-agent restart

service neutron-dhcp-agent restart

service neutron-metadata-agent restart

service neutron-l3-agent restart

5.2 安装和配置计算节点

5.2.1 安装neutron-compute相关软件

apt install neutron-linuxbridge-agent

5.2.2 修改配置文件

编辑/etc/neutron/neutron.conf 文件并完成如下操作

cp /etc/neutron/neutron.conf{,.bak}

[DEFAULT]

# ...

transport_url = rabbit://openstack:123456@controller

[DEFAULT]

# ...

auth_strategy = keystone

[keystone_authtoken]

# ..

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = 123456

[oslo_concurrency]

# ...

lock_path = /var/lib/neutron/tmp

配置Linuxbridge代理

cp /etc/neutron/plugins/ml2/linuxbridge_agent.ini{,.bak}

physical_interface_mappings = provider:enp3s0

[vxlan]

enable_vxlan = true

local_ip = 192.168.88.231

l2_population = true

[securitygroup]

# ...

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

sudo vim /etc/sysctl.conf

root@compute01:~# sysctl -p

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

sudo modprobe br_netfilter

为计算节点配置网络服务

/etc/nova/nova.conf

[neutron]

# ...

url = http://controller:9696

auth_url = http://controller:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = 123456

5.2.3 启动neutron相关服务

service nova-compute restart

service neutron-linuxbridge-agent restart

列出验证成功连接neutron的代理

openstack network agent list

6 Dashboard(horizon-web界面)安装

6.1 安装Horizon相关软件包

apt install openstack-dashboard

6.2 修改配置文件

cp /etc/openstack-dashboard/local_settings.py{,.bak}

在 controller 节点上配置仪表盘以使用 OpenStack 服务

OPENSTACK_HOST = "192.168.88.230"

允许所有主机访问仪表板

ALLOWED_HOSTS = ['*', 'two.example.com']

# 配置memcached会话存储服务

SESSION_ENGINE = 'django.contrib.sessions.backends.cache'

CACHES = {

'default': {

'BACKEND': 'django.core.cache.backends.memcached.MemcachedCache',

'LOCATION': 'controller:11211',

}

}

启用第3版认证API:

OPENSTACK_KEYSTONE_URL = "http://%s:5000/v3" % OPENSTACK_HOST

启用对域的支持

OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True

配置API版本

OPENSTACK_API_VERSIONS = {

"identity": 3,

"image": 2,

"volume": 2,

}

通过仪表盘创建用户时的默认域配置为 default :

OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = "Default"

OPENSTACK_KEYSTONE_DEFAULT_ROLE = "user"

# 如果选择网络选项1,请禁用对第3层网络服务的支持

OPENSTACK_NEUTRON_NETWORK = {

...

'enable_router': False,

'enable_quotas': False,

'enable_ipv6': False,

'enable_distributed_router': False,

'enable_ha_router': False,

'enable_lb': False,

'enable_firewall': False,

'enable_vpn': False,

'enable_fip_topology_check': False,

}

可以选择性地配置时区

TIME_ZONE = "Asia/Shanghai"

cat /etc/apache2/conf-available/openstack-dashboard.conf |grep "WSGIApplicationGroup"

WSGIApplicationGroup %{GLOBAL}

6.3 启动horizon服务

service apache2 reload

6.4 访问dashboard

http://192.168.88.230/horizon/project/instances/

7 启动第一台实例

创建云主机流程

1. 当访问Dashboard的时候,会显示一个登录页面,Dashboard会告诉你,想使用Openstack创建云主机?那你得先把你的账号密码交给我,我去Keystone上验证你的身份之后才能让你登录。

2. Keystone接收到前端表单传过来的域,用户名,密码信息以后,到数据库查询,确认身份后将一个Token返回给该用户,让这个用户以后再进行操作的时候就不需要再提供账号密码,而是拿着Token来。

3. Horizon拿到Token之后,找到创建云主机的按钮并点击,填写云主机相关配置信息。点击启动实例后,Horizon就带着三样东西(创建云主机请求、云主机配置相关信息、Keystone返回的Token)找到Nova-API。

4. Horizon要创建云主机?你先得把你的Token交给我,我去Keystone上验证你的身份之后才给你创建云主机。

5. Keystone一看Token发现这不就是我刚发的那个吗?但程序可没这么聪明,它还得乖乖查一次数据库,然后告诉Nova-API,这兄弟信得过,你就照它说的做吧。

6. Nova-API把Horizon给的提供的云主机配置相关写到数据库(Nova-DB)。

7. 数据库(Nova-DB)写完之后,会告诉Nova-API,哥,我已经把云主机配置相关信息写到我的数据库里啦。

8. 把云主机配置相关信息写到数据库之后,Nova-API会往消息队列(RabbitMQ)里发送一条创建云主机的消息。告诉手下的小弟们,云主机配置相关信息已经放在数据库里了,你们给安排安排咯。

9. Nova-Schedular时时观察着消息队列里的消息,当看到这条创建云主机的消息之后,就要干活咯。

10. 要创建云主机,但它要看一看云主机都要什么配置,才好决定该把这事交给谁(Nova-Compute)去做,所以就去数据库去查看了

11. 数据库收到请求之后,把要创建云主机的配置发给Nova-Schedular

12. Nova-Schedular拿到云主机配置之后,使用调度算法决定了要让Nova-Compute去干这个事,然后往消息队列里面发一条消息,某某某Nova-Compute,就你了,给创建一台云主机,配置都在数据库里。

13. Nova-Compute时时观察着消息队列里的消息,当看到这条让自己创建云主机的消息之后,就要去干活咯。注意:本应该直接去数据库拿取配置信息,但因为Nova-Compute的特殊身份,Nova-Compute所在计算节点上全是云主机,万一有一台云主机被******从而控制计算节点,直接***是很危险的。所以不能让Nova-Compute知道数据库在什么地方

14. Nova-Compute没办法去数据库取东西难道就不工作了吗?那可不行啊,他不知道去哪取,但Nova-Conductor知道啊,于是Nova-Compute往消息队列里发送一条消息,我要云主机的配置相关信息,Nova-Conductor您老人家帮我去取一下吧。

15. Nova-Conductor时时观察着消息队列里的消息,当看到Nova-Conductor发的消息之后,就要去干活咯。

16. Nova-Conductor告诉数据库我要查看某某云主机的配置信息。

17. 数据库把云主机配置信息发送给Nova-Conductor。

18. Nova-Conductor把云主机配置信息发到消息队列。

19. Nova-Compute收到云主机配置信息。

20. Nova-Compute读取云主机配置信息一看,立马就去执行创建云主机了。首先去请求Glance-API,告诉Glance-API我要某某某镜像,你给我吧。

21. Glance-API可不鸟你,你是谁啊?你先得把你的Token交给我,我去Keystone上验证你的身份之后才给你镜像。Keystone一看Token,兄弟,没毛病,给他吧。

22. Glance-API把镜像资源信息返回给Nova-Compute。

23. Nova-Compute拿到镜像后,继续请求网络资源,首先去请求Neutron-Server,告诉Neutron-Server我要某某某网络资源,你给我吧。

24. Neutron-Server可不鸟你,你是谁啊?你先得把你的Token交给我,我去Keystone上验证你的身份之后才给你网络。Keystone一看Token,兄弟,没毛病,给他吧。

25. Neutron-Server把网络资源信息返回给Nova-Compute。

26. Nova-Compute拿到网络后,继续请求存储资源,首先去请求Cinder-API,告诉Cinder-API我要多少多少云硬盘,你给我吧。

27. Cinder-API可不鸟你,你是谁啊?你先得把你的Token交给我,我去Keystone上验证你的身份之后才给你网络。Keystone一看Token,兄弟,没毛病,给他吧。

28. Cinder-API把存储资源信息返回给Nova-Compute。

29. Nova-Compute拿到所有的资源后(镜像、网络、存储),其实Nova-Compute也没有创建云主机的能力,他把创建云主机的任务交给了Libvird,然后创建云主机(KVM/ZEN)

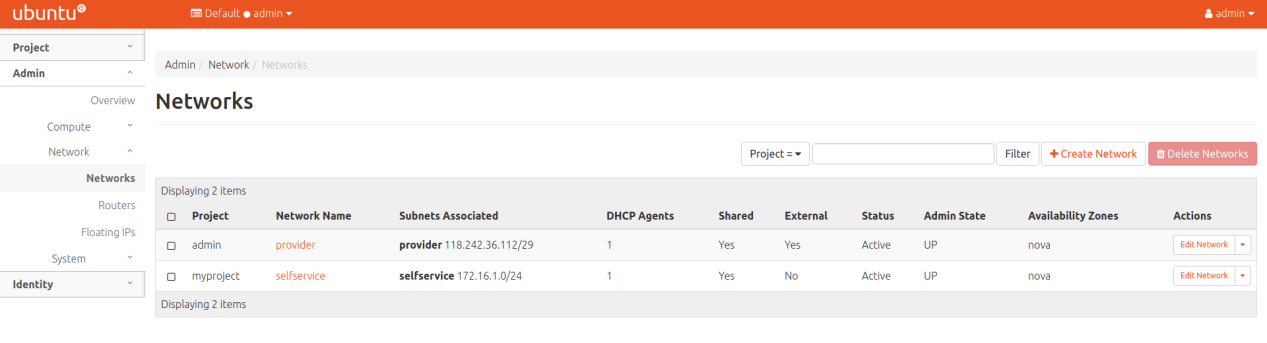

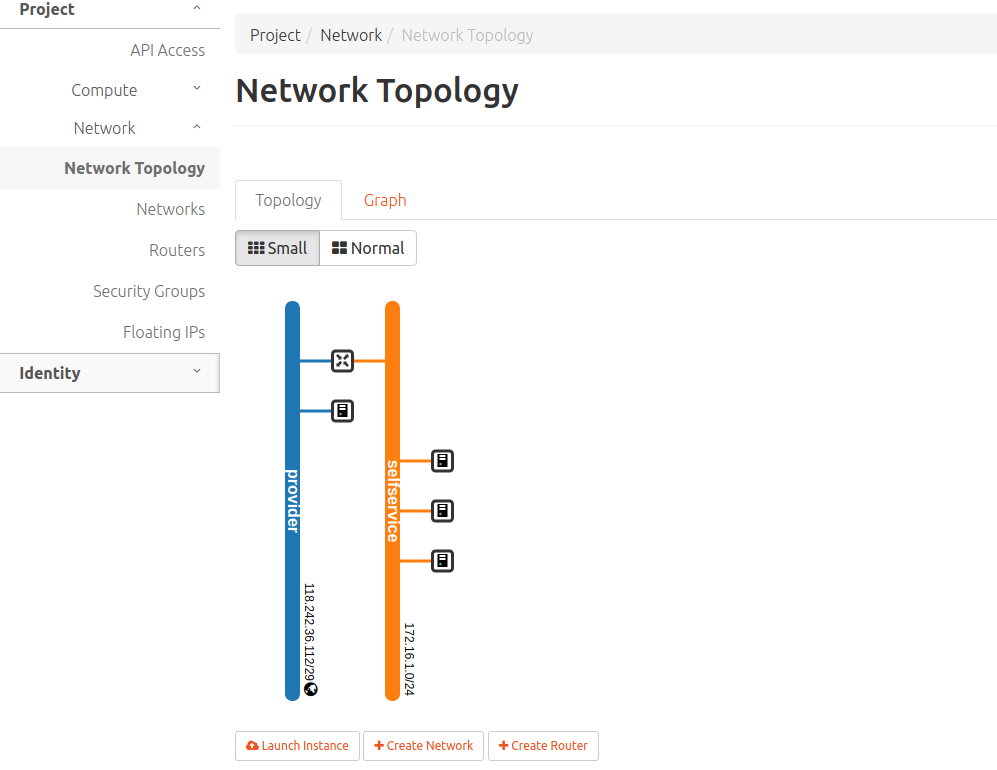

7.1 创建provider虚拟网络

在控制节点上,加载admin凭证来获取管理员能执行的命令访问权限

source admin-openrc

openstack network create --share --external

--provider-physical-network provider

--provider-network-type flat provider

+---------------------------+--------------------------------------+

| Field | Value |

+---------------------------+--------------------------------------+

| admin_state_up | UP |

| availability_zone_hints | |

| availability_zones | |

| created_at | 2019-07-16T11:45:41Z |

| description | |

| dns_domain | None |

| id | 5d12de79-e29c-49f4-a9f2-4df109c7d79c |

| ipv4_address_scope | None |

| ipv6_address_scope | None |

| is_default | False |

| is_vlan_transparent | None |

| mtu | 1500 |

| name | provider |

| port_security_enabled | True |

| project_id | 211b8ac07f9c4e59bc7222c9a690fc52 |

| provider:network_type | flat |

| provider:physical_network | provider |

| provider:segmentation_id | None |

| qos_policy_id | None |

| revision_number | 0 |

| router:external | External |

| segments | None |

| shared | True |

| status | ACTIVE |

| subnets | |

| tags | |

| updated_at | 2019-07-16T11:45:41Z |

+---------------------------+--------------------------------------+

在网络上创建出一个子网

openstack subnet create --network provider --allocation-pool start=118.242.36.114,end=118.242.36.118 --dns-nameserver 119.29.29.29 --gateway 118.242.36.113 --subnet-range 118.242.36.112/29 provider

+-------------------+--------------------------------------+

| Field | Value |

+-------------------+--------------------------------------+

| allocation_pools | 118.242.36.114-118.242.36.118 |

| cidr | 118.242.36.112/29 |

| created_at | 2019-07-16T12:09:20Z |

| description | |

| dns_nameservers | 119.29.29.29 |

| enable_dhcp | True |

| gateway_ip | 118.242.36.113 |

| host_routes | |

| id | e3cf1d9e-b2c9-48b9-9e5d-fe7d62cc8e99 |

| ip_version | 4 |

| ipv6_address_mode | None |

| ipv6_ra_mode | None |

| name | provider |

| network_id | 5d12de79-e29c-49f4-a9f2-4df109c7d79c |

| project_id | 211b8ac07f9c4e59bc7222c9a690fc52 |

| revision_number | 0 |

| segment_id | None |

| service_types | |

| subnetpool_id | None |

| tags | |

| updated_at | 2019-07-16T12:09:20Z |

+-------------------+--------------------------------------+

说明:

# --subnet-range 使用CIDR表示法表示提供IP的子网

# start和end分别为要为实例分配IP的范围

# --dns-nameserver 指定DNS解析的IP地址

# --gateway 网关地址

7.2 创建selfservice网络

. demo-openrc

创建网络

openstack network create selfservice

在网络上创建子网

openstack subnet create --network selfservice

--dns-nameserver 119.29.29.29 --gateway 172.16.1.1

--subnet-range 172.16.1.0/24 selfservice

7.3 创建路由关联两个网络

创建路由

openstack router create router

将自助网络子网添加为路由器上的接口

openstack router add subnet router selfservice

在路由器上的提供商网络上设置网关

openstack router set router --external-gateway provider

验证操作

. admin-openrc

ip netns

qrouter-8b9d33e8-6f5d-4eab-95b7-f37dafb0ee16 (id: 0)

列出路由器上的端口以确定提供商网络上的网关IP地址

openstack port list --router router

+--------------------------------------+------+-------------------+-------------------------------------------------------------------------------+--------+

| ID | Name | MAC Address | Fixed IP Addresses | Status |

+--------------------------------------+------+-------------------+-------------------------------------------------------------------------------+--------+

| bd4fbe70-13fb-4d79-85d3-b7c1a331f9b3 | | fa:16:3e:99:1c:ab | ip_address='118.242.36.115', subnet_id='e3cf1d9e-b2c9-48b9-9e5d-fe7d62cc8e99' | BUILD |

| d24e6914-04de-4825-bf00-dda43e39b786 | | fa:16:3e:af:d2:e1 | ip_address='172.16.1.1', subnet_id='0b76b0b2-e963-4688-8f9d-b398e797316e' | BUILD |

+--------------------------------------+------+-------------------+-------------------------------------------------------------------------------+--------+

7.4 创建实例配置类型

# 为虚拟机分配资源为1C64M 名为m1.nano的资源类型

openstack flavor create --id 0 --vcpus 1 --ram 64 --disk 1 m1.nano

7.5 配置秘钥对

. demo-openrc

ssh-keygen -q -N ""

openstack keypair create --public-key ~/.ssh/id_rsa.pub mykey

+-------------+-------------------------------------------------+

| Field | Value |

+-------------+-------------------------------------------------+

| fingerprint | 32:43:54:70:44:bd:7f:eb:6f:46:f0:09:39:22:13:f6 |

| name | mykey |

| user_id | f4748f17e51c4c99b984f66d8cc7f8e6 |

+-------------+-------------------------------------------------+

# 查看秘钥

openstack keypair list

+-------+-------------------------------------------------+

| Name | Fingerprint |

+-------+-------------------------------------------------+

| mykey | 32:43:54:70:44:bd:7f:eb:6f:46:f0:09:39:22:13:f6 |

+-------+-------------------------------------------------+

7.6 添加安全策略

openstack security group rule create --proto icmp default

openstack security group rule create --proto tcp --dst-port 22 default

7.7 启动实例

Launch an instance on the self-service network

. demo-openrc

openstack flavor list

+----+---------+-----+------+-----------+-------+-----------+

| ID | Name | RAM | Disk | Ephemeral | VCPUs | Is Public |

+----+---------+-----+------+-----------+-------+-----------+

| 0 | m1.nano | 64 | 1 | 0 | 1 | True |

+----+---------+-----+------+-----------+-------+-----------+

openstack image list

+--------------------------------------+--------+--------+

| ID | Name | Status |

+--------------------------------------+--------+--------+

| 7350bbc9-7abc-40e6-8a5c-e85f68343423 | cirros | active |

+--------------------------------------+--------+--------+

openstack network list

+--------------------------------------+-------------+--------------------------------------+

| ID | Name | Subnets |

+--------------------------------------+-------------+--------------------------------------+

| 5d12de79-e29c-49f4-a9f2-4df109c7d79c | provider | e3cf1d9e-b2c9-48b9-9e5d-fe7d62cc8e99 |

| b828ee15-aaf9-4529-b50b-51694ce8bf86 | selfservice | 0b76b0b2-e963-4688-8f9d-b398e797316e |

+--------------------------------------+-------------+--------------------------------------+

openstack security group list

+--------------------------------------+---------+------------------------+----------------------------------+------+

| ID | Name | Description | Project | Tags |

+--------------------------------------+---------+------------------------+----------------------------------+------+

| 04617b8d-fea6-43ee-9efb-53b890ec6474 | default | Default security group | 54fa86df40fd4902a0f21d981a77a6fa | [] |

+--------------------------------------+---------+------------------------+----------------------------------+------+

启动实例

openstack server create --flavor m1.nano --image cirros

--nic net-id=b828ee15-aaf9-4529-b50b-51694ce8bf86 --security-group default

--key-name mykey selfservice-instance

检查实例的状态

openstack server list

+--------------------------------------+---------------+--------+------------------------+--------+---------+

| ID | Name | Status | Networks | Image | Flavor |

+--------------------------------------+---------------+--------+------------------------+--------+---------+

| ea7d7de2-b576-44f8-8388-509efc3002b2 | selfservice-1 | ACTIVE | selfservice=172.16.1.9 | cirros | m1.nano |

+--------------------------------------+---------------+--------+------------------------+--------+---------+

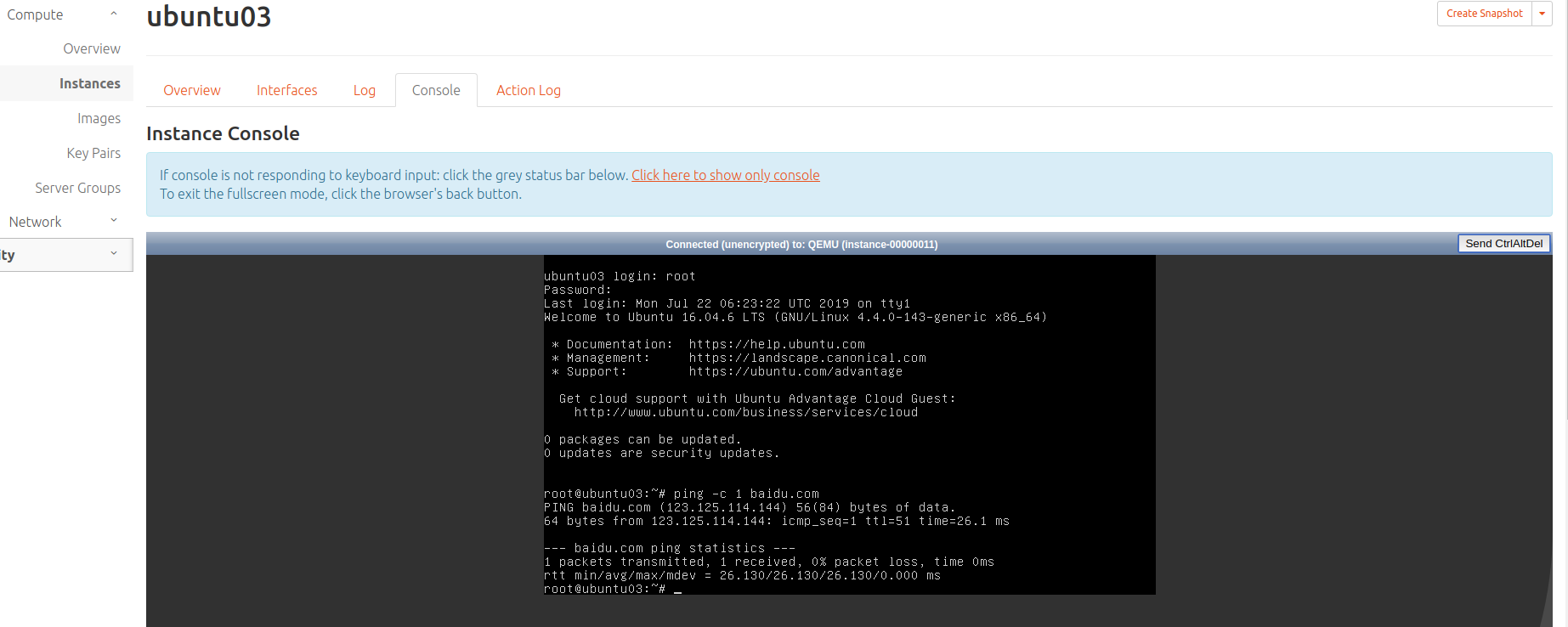

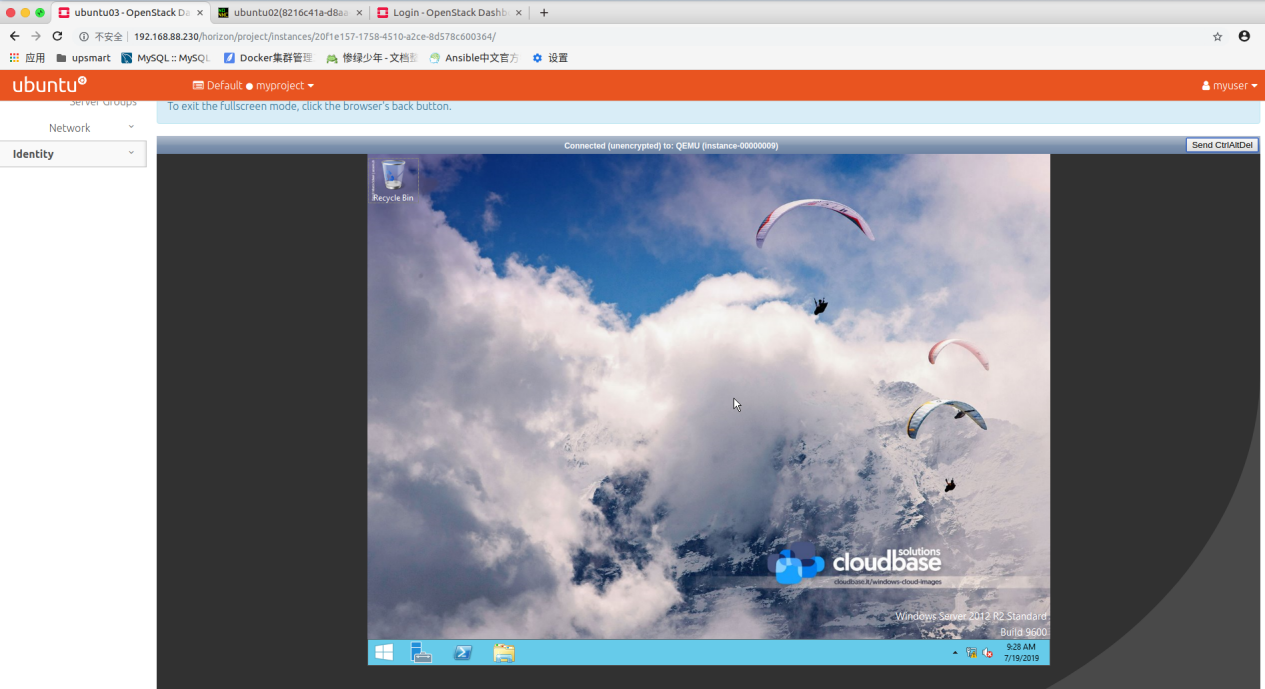

使用虚拟控制台访问实例

openstack console url show provider-instance

openstack console url show ea7d7de2-b576-44f8-8388-509efc3002b2

+-------+---------------------------------------------------------------------------------+

| Field | Value |

+-------+---------------------------------------------------------------------------------+

| type | novnc |

| url | http://controller:6080/vnc_auto.html?token=9d0a71b4-e147-4b83-8b23-8bc01c22a80a |

+-------+---------------------------------------------------------------------------------+

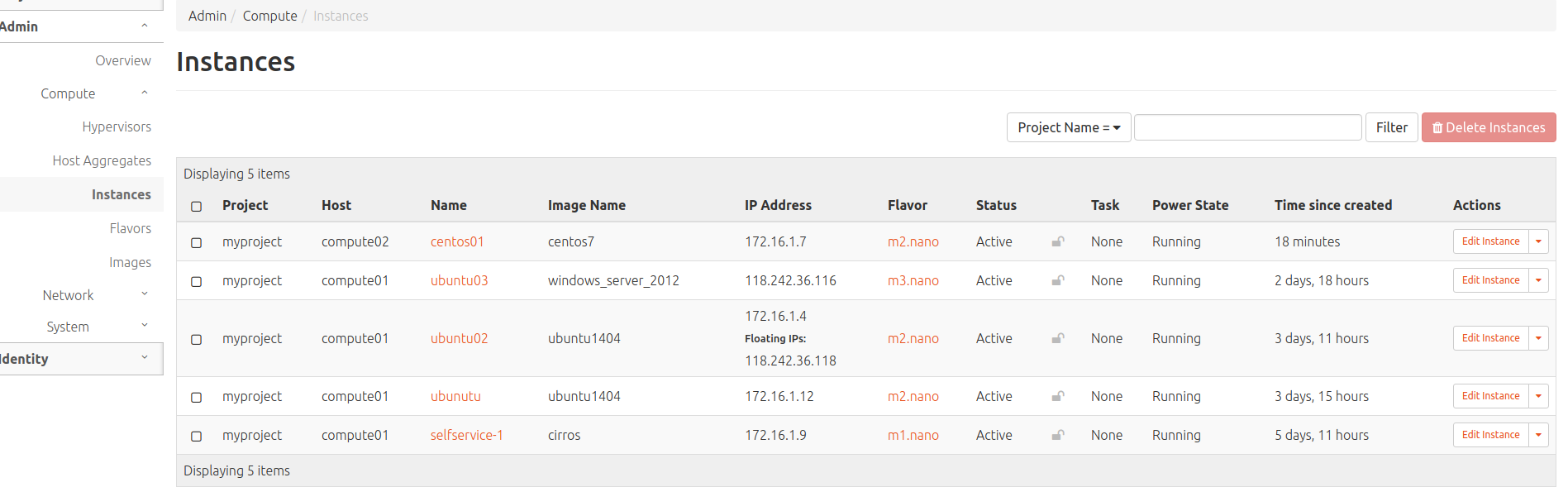

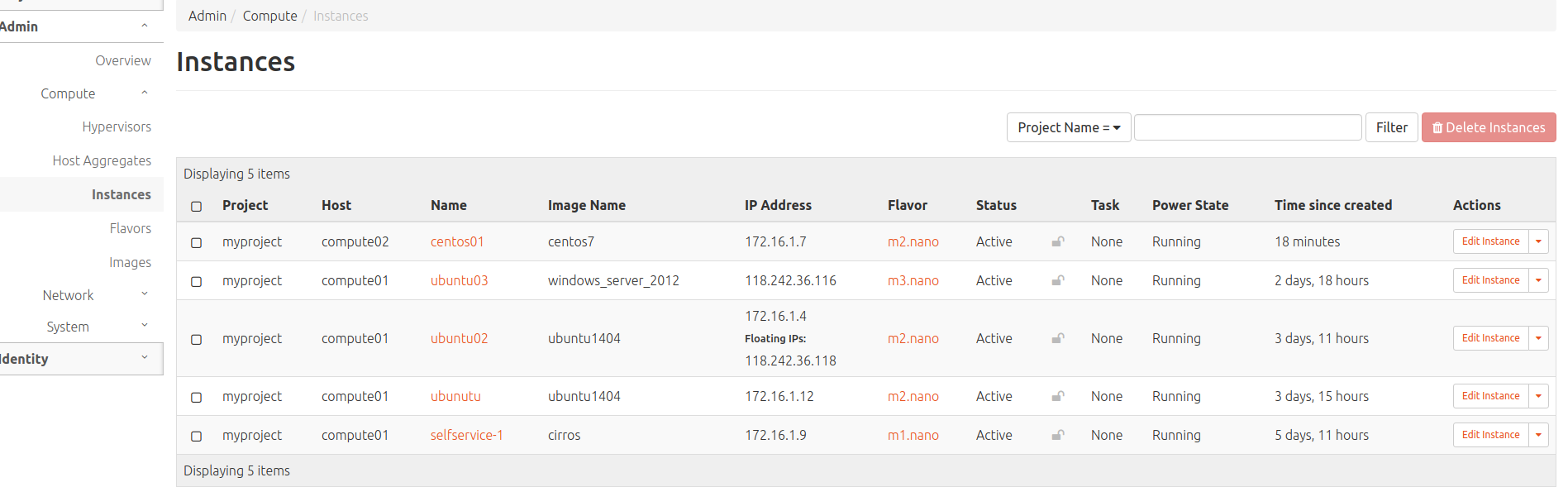

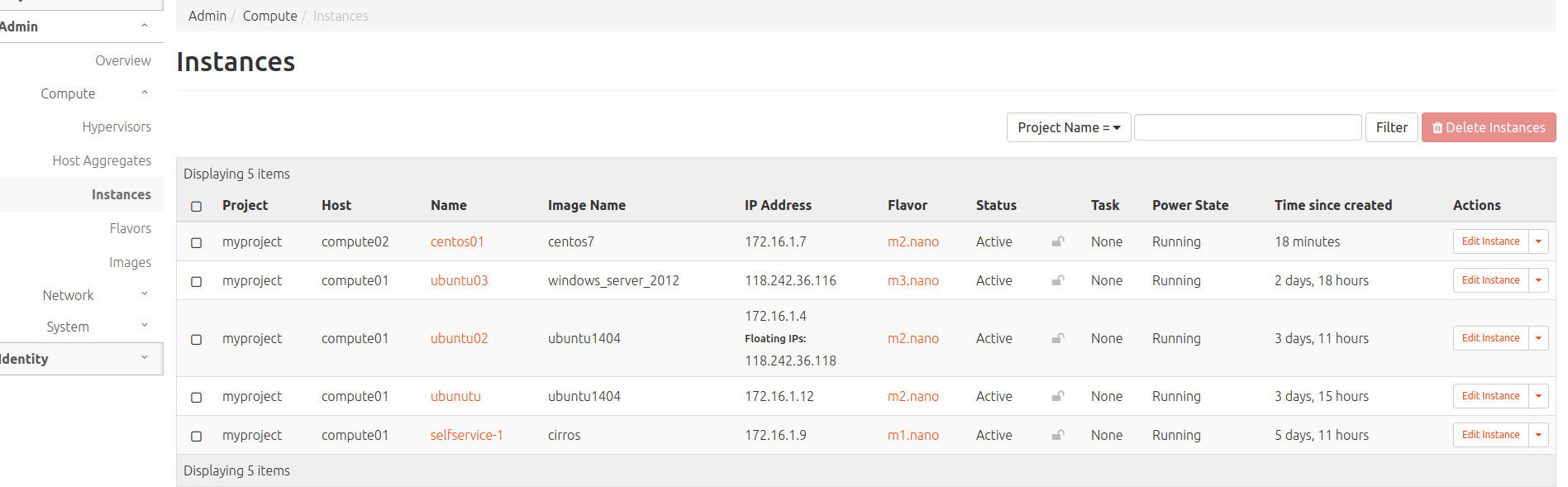

7.8 创建centos和ubuntu实例

创建实例类型配置

openstack flavor create --id 1 --vcpus 2 --ram 4096 --disk 20 m2.nano

上传ubuntu16.04镜像

openstack image create "ubuntu1404" --file xenial-server-cloudimg-amd64-disk1.img --disk-format qcow2 --container-format bare --public

其他镜像下载地址参考

https://docs.openstack.org/image-guide/obtain-images.html

openstack server create --flavor m2.nano --image ubuntu14.04.5 --nic net-id=b828ee15-aaf9-4529-b50b-51694ce8bf86 --security-group default --key-name mykey ubuntu14

openstack server list

+--------------------------------------+---------------+--------+------------------------+---------------+---------+

| ID | Name | Status | Networks | Image | Flavor |

+--------------------------------------+---------------+--------+------------------------+---------------+---------+

| 39e92f2f-5851-4695-9d18-eedf2919aaae | ubuntu14 | ACTIVE | selfservice=172.16.1.2 | ubuntu14.04.5 | m2.nano |

| ea7d7de2-b576-44f8-8388-509efc3002b2 | selfservice-1 | ACTIVE | selfservice=172.16.1.9 | cirros | m1.nano |

+--------------------------------------+---------------+--------+------------------------+---------------+---------+

创建实例初始化脚本

#!/bin/sh

passwd root<<EOF

123456

123456

EOF

sed -i 's/PasswordAuthentication no/PasswordAuthentication yes/g' /etc/ssh/sshd_config

sed -i 's/PermitRootLogin prohibit-password/PermitRootLogin yes/g' /etc/ssh/sshd_config

service ssh restart

这个脚本中可以添加其他初始化内容。

7.9 浮动ip挂载

给私网服务器加浮动ip

openstack floating ip create provider

upsmart@controller:~$ openstack server add floating ip ubuntu02 118.242.36.118

7.10 验证开的实例是否正常

8 添加一台新的计算节点

8.1 配置nova计算服务

8.1.1 安装nova软件包

sudo apt install nova-compute

8.1.2 修改nova配置文件

sudo cp /etc/nova/nova.conf{,.bak}

[DEFAULT]

lock_path = /var/lock/nova

state_path = /var/lib/nova

transport_url = rabbit://openstack:123456@controller

my_ip = 192.168.88.232

use_neutron = true

firewall_driver = nova.virt.firewall.NoopFirewallDriver

[api]

# ...

auth_strategy = keystone

[keystone_authtoken]

# ...

auth_url = http://controller:5000/v3

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = 123456

[vnc]

enabled = true

server_listen = 0.0.0.0

server_proxyclient_address = $my_ip

novncproxy_base_url = http://192.168.88.230:6080/vnc_auto.html

[glance]

# ...

api_servers = http://controller:9292

[oslo_concurrency]

# ...

lock_path = /var/lib/nova/tmp

[placement]

# ...

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:5000/v3

username = placement

password = 123456

8.1.3 启动计算节点nova

service nova-compute restart

验证:

su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

Found 2 cell mappings.

Skipping cell0 since it does not contain hosts.

Getting computes from cell 'cell1': 16da0d9a-3477-4ad7-ba0c-78a1368bf4c2

Checking host mapping for compute host 'compute02': 1bc43e54-221f-4bcf-a3d1-3da8362879a4

Creating host mapping for compute host 'compute02': 1bc43e54-221f-4bcf-a3d1-3da8362879a4

8.2 配置neutron网络

8.2.1 安装neutron相关组件

apt install neutron-linuxbridge-agent

8.2.2 配置neutron

cp /etc/neutron/neutron.conf{,.bak}

[DEFAULT]

# ...

transport_url = rabbit://openstack:123456@controller

[DEFAULT]

# ...

auth_strategy = keystone

[keystone_authtoken]

# ...

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = 123456

[oslo_concurrency]

# ...

lock_path = /var/lib/neutron/tmp

Networking Option 2: Self-service networks:

cp /etc/neutron/plugins/ml2/linuxbridge_agent.ini{,.bak}

[linux_bridge]

physical_interface_mappings = provider:PROVIDER_INTERFACE_NAME

[vxlan]

enable_vxlan = true

local_ip = 192.168.88.232

l2_population = true

[securitygroup]

# ...

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

vim /etc/sysctl.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

sysctl -p

加载模块:

modprobe br_netfilter

/etc/nova/nova.conf

[neutron]

# ...

url = http://controller:9696

auth_url = http://controller:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = 123456

8.2.3 启动并验证新计算节点

service nova-compute restart

service neutron-linuxbridge-agent restart

验证之前的操作

neutron agent-list

neutron CLI is deprecated and will be removed in the future. Use openstack CLI instead.

+--------------------------------------+--------------------+------------+-------------------+-------+----------------+---------------------------+

| id | agent_type | host | availability_zone | alive | admin_state_up | binary |

+--------------------------------------+--------------------+------------+-------------------+-------+----------------+---------------------------+

| 55bef7b0-1d8c-495c-80fa-baeed3739c6a | Linux bridge agent | controller | | :-) | True | neutron-linuxbridge-agent |

| 6facb724-4947-4684-afd1-48d2fe24eac0 | DHCP agent | controller | nova | :-) | True | neutron-dhcp-agent |

| 755c54c8-0e80-4d67-8c27-e209760852bb | Linux bridge agent | compute01 | | :-) | True | neutron-linuxbridge-agent |

| 9504c570-c9b3-4832-9b41-4216da934e2e | Metadata agent | controller | | :-) | True | neutron-metadata-agent |

| aa26e51c-cc4e-46ee-a0de-f14d3df93943 | Linux bridge agent | compute02 | | :-) | True | neutron-linuxbridge-agent |

| dc6320f3-cadc-4f25-add8-4630daf5fe37 | L3 agent | controller | nova | :-) | True | neutron-l3-agent |

+--------------------------------------+--------------------+------------+-------------------+-------+----------------+---------------------------+

验证计算节点

openstack compute service list

+----+------------------+------------+----------+---------+-------+----------------------------+

| ID | Binary | Host | Zone | Status | State | Updated At |

+----+------------------+------------+----------+---------+-------+----------------------------+

| 4 | nova-consoleauth | controller | internal | enabled | up | 2019-07-21T10:16:23.000000 |

| 5 | nova-scheduler | controller | internal | enabled | up | 2019-07-21T10:16:26.000000 |

| 9 | nova-conductor | controller | internal | enabled | up | 2019-07-21T10:16:26.000000 |

| 12 | nova-compute | compute01 | nova | enabled | up | 2019-07-21T10:16:25.000000 |

| 13 | nova-compute | compute02 | nova | enabled | up | 2019-07-21T10:16:22.000000 |

+----+------------------+------------+----------+---------+-------+----------------------------+

创建实例验证

9 cinder块存储服务

Openstack块存储服务(Cinder)为云主机添加持久的存储,块存储提供一个基础设施为了管理卷,以及和Openstack计算服务交互,为实例提供卷。此服务也会激活管理卷的快照和卷类型的功能。

https://docs.openstack.org/install-guide/launch-instance-cinder.html

由于实验服务器有限,本次未做这块实验。

10 附录

10.1 常见错误

问题:

Failed to allocate the network(s), not rescheduling.

解决

在计算节点的/etc/nova/nova.conf中添加下面两句

#Fail instance boot if vif plugging fails

vif_plugging_is_fatal = False

#Number of seconds to wait for neutron vif

#plugging events to arrive before continuing or failing

#(see vif_plugging_is_fatal). If this is set to zero and

#vif_plugging_is_fatal is False, events should not be expected to arrive at all.

vif_plugging_timeout = 0

如果无法创建虚拟机,我们需要查看控制节点和计算节点所有服务的日志,同时也要查看iptables、selinux、时间同步等

grep 'ERROR' /var/log/nova/*

grep 'ERROR' /var/log/neutron/*

grep 'ERROR' /var/log/glance/*

grep 'ERROR' /var/log/keystone/*

10.2 解决OpenStack创建实例不超过10个

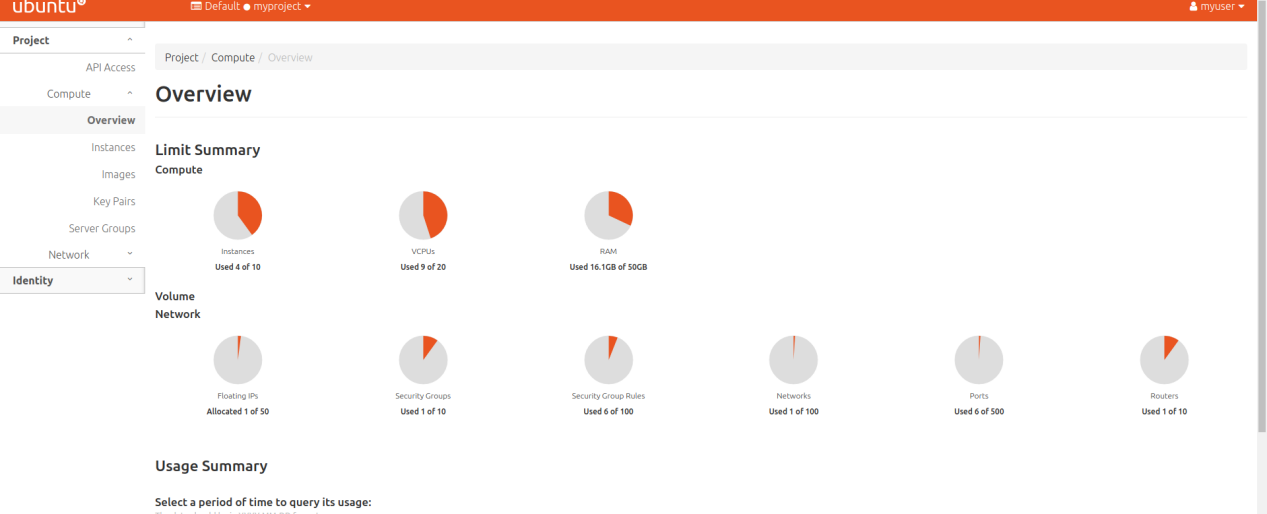

在使用openstack的过程中,默认创建的实例最多10个,这是因为配额默认实例就是10

所以我们需要修改配置文件/etc/nova/nova.conf中的配额参数就可以了

[default] 末尾添加

quota_instances=1000000

quota_cores=20000

quota_ram=5120000000

quota_floating_ips=100000

改成对应自己想要的数值就好

拓展

如何把实例转换为镜像

实例热迁移,实例冷迁移

Glance镜像服务迁移

controller高可用

- 我的微信

- 这是我的微信扫一扫

-

- 我的微信公众号

- 我的微信公众号扫一扫

-